In my last post over here I have proposed Hive-Engine light nodes. You are finding more information in the old post if you are wondering what that is.

In this post I will be giving an update on how light nodes are coming along and a few other small changes.

Light nodes

Since my last post, the light node PR has been approved by the engine team and was merged into the main repository. By the way the engine github has switched to a new repository, which you can find here https://github.com/hive-engine/hivesmartcontracts.

There was a bit of a discussion at the start about light nodes, but they are coming along quite nicely now. We have fixed some minor issues and adapted a few things including the default blocksToKeep. It was rasied to 864000 blocks, equivalent to about 30 days of engine block history, that light nodes should keep.

In the meantime there are already 18 running light node witnesses, 6 of them even in the top 20. In the long term the best would be to transition to 100% light nodes for engine witnesses, as the expenses of running a full node are too high compared to the rewards. The 30 day history should be sufficient for most use cases in my opinion.

The capped block / transaction history is basically the only difference of light nodes, other than that they are equivalent to full nodes. The largest benefit is, that they drastically decrease storage size to ~12GB, compared to ~130GB for a full node. Also the snapshots are only 4.5GB compared to 57GB for full nodes.

Talking of snapshots...

Light node snapshots

In addition to the full node snapshots, I am now providing light node snapshots twice a day, which can be found over here https://snap.primersion.com/light.

They are much smaller in size, which results in a much faster restoration time of about 30 minutes, compared to a few hours (~6?) for full nodes.

Restoration / setup script

I have created another PR a few weeks ago, which adds a script for easily fixing broken (forked) engine node databases or setting them up from scratch.

It can be found in the root directory of the repository (restore_partial.js) and the usage instructions can be found in the file:

/**

* Script for helping to restore / repair hive-engine node databases. The restore mode will simply drop the

* existing database and do a full restore.

* The repair mode will delete all invalid blocks / transactions and drop all other collections and restore the

* last valid state using a light node snapshot.

*

* These are the different modes:

* - FULL node:

* - Restore by executing `node restore_partial.js` (~30-60 minutes)

* - Drop by executing `node restore_partial.js -d -s https://snap.primersion.com/` (~6 hours)

* - LIGHT node:

* - Drop by executing `node restore_partial.js -d` (~30-60 minutes)

* - Restore is not supported as dropping is faster

* */

HF26 beneficiary fix

In HIVEs upcoming HF26 a breaking change for beneficiaries was introduced, which simply changed the structure of beneficiaries. This resulted in engine nodes forking all the time, as nodes syncing blocks from HF26 HIVE nodes were parsing beneficiaries wrongly. You can read more about the change in the PR over here if you are interested https://github.com/hive-engine/steemsmartcontracts/pull/153.

The new beneficiary format now uses an object with type and value inside the extensions instead of an array e.g.:

"extensions": [

{

"type": "comment_payout_beneficiaries",

"value": {

"beneficiaries": [

{

"account": "alinares",

"weight": 300

},

{

"account": "hiveonboard",

"weight": 100

},

{

"account": "tipu",

"weight": 100

}]

}

}]

}]

],

Other changes

A few other changes were proposed and implemented by @rishi556 and @forykw, including:

- Show

getStatusresult directly when accessing a node - Add support for NATed ports

- Improve witness_action.js script

- Add support for IPv6 nodes

- Add additional configuration options for the RPC interface

We are now at version 1.8.1 already, with more changes to come soon hopefully.

A lot has changed for the Hive / Engine monitoring site I am running as well, but more on that in my next post.

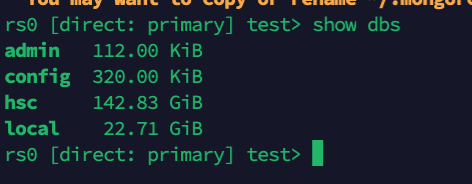

Its over 140 gigs actually for a fullnode. Running a fullnode definitely isn't cheap anymore.

The ipv6 changes are my personal favorites 😄. The skyrocketing cost of ipv4 addresses really is going to push more and more services to go ipv6 only to reduce expenses(with a large hosting provider you don't generally see the ipv4 prices as large since they've got larger pools, but at smaller ones every last address becomes sacred and not using it where it can be spared is attempted).

A few things I've seen for importing the snaphosts, RAM really seems to play a role in it. More real ram is always desired. For a fullnode I think I got it in just under 2 hours with 128 GB of ram in a machine, vs taking about 6 hours on a machine with 32 GB of ram. The partial restore helps a ton with this since we don't have to restore every single element. Thank you for that.

I think continuing to help drive the cost of hosting nodes down in the near future should definitely be a goal. The last few weeks have shown how much of apps rely on just the one node hosted by the engine team, when we have quite a decent amount of fullnodes to pick from. Getting app developers to host their own light node for certain applications would be ideal, but I guess not enough want to do it. Tried to make it even easier with a script that can do it all for them: https://git.dbuidl.com/rishi556/EngineSpeed.

The secret is IO =)

Doing a lot of experiments with ZFS lately... which have been proving that with much less RAM, performance grows quite fast and read access to RAM cache is very beneficial for slower IO subsystems.

But of course, if one has NVMe's for very big bursts, then it's like cloud9, and ZFS will eat all that... and it will be even faster. The problem I have been trying to understand is how to best manage ZFS memory usage (now understood) and the next harder part is tunning some parameters on the kernel extension. These are usually part of my daily job, but because I don't use ZFS at work, it's like finding a new fun toy!

...and I understand the pain when using services that don't provide enough visibility on what's below the infrastructure. It's usually the way to make money. Maybe that's why I never liked to run things on the cloud... 🤣 Bones of my own crypt I guess! - but in that regard, I am happy to provide some tricks for virtual providers.

In simple words is trying to use all the "VPS package" resources in the more intelligent way possible, with the right software.

Yeah that's the downside of using cloud hosting. You never really know for sure what you will get and resources may vary largely at various times. Happened to me a few times already that services kept stopping randomly, probably due to some resources being drawn from my particular cloud instance.

Additionally I've been having some weird networking / routing issues on my snapshoting server, which caused very slow downloads for some particular accesses. But all in all I am quite happy with the result / performance.

Until now I've never really looked into ZFS, as the speed of my servers suffices for now (using nvmes).

My new HF26 HIVE node will migrate to ZFS (from LVM+XFS). My raw benchmarks indicated at least 4x performance while synchronizing the chain (when read cache is enough for the workload), compared with ZFS for example (ext4 is even worse). And this is on DDR4 so, on DDR5 things are going to be even better.

Overall, feeling very positive about it... and still not using the latest ZFS version... (using what comes with ubuntu, which is v0.8.3) but it is so easy to manage and has so many features that it becomes a no-brainer.

I had started a post series on @atexoras account and then got so far away exploring that any of what I had planned made sense anymore LOL.. typical for my ways of exploring. Will come, later, when I have more time.

You might like talking to @deathwing, he's doing testing with ZFS as well.

👍

Yeah I hope I can switch mine to ipv6 soon too. I plan on doing so in the next weeks. ipv4 addresses are not too much of an issue for me right now, as you can still get them easily with Hetzner ;)

I also observed that behaviour. At the start I was running the node on a machine with 64GB, which really did speed up the proccess, as you said. But I later switched to smaller (cloud servers) to separate multiple applications. Also I think for most witnesses cost plays the biggest role in choosing a smaller server, otherwise they won't get much out of it. Yours really is a killer machine :D

We should really try to "kill" that dependency or at least distribute it better between multiple nodes. The engine team node really has been unstable the last weeks.

I will add https://git.dbuidl.com/rishi556/EngineSpeed to the link section of the monitoring site if you approve, as I personally already forgot that you created that script ;)

Docker stuff will help a LOT on bigger systems. But not yet something unless people want to bundle everything on the same big machine (which has benefits), but for cloud stuff, yes, indepent small stuff plays best usually.

My main rpc node is 32 gigs, and witness has 8 gigs. That 128 gig machine is used for testing stuff and so its beefier than normal.

I'd definitely like to see other nodes get used more and more. Its sad to see so much of the ecosystem break when one node breaks because the devs rely solely on that one.

Don't care either way about this, I mention it at the end of my setup guide, but it's really setup to work for the way I do things and I haven't tested it with other machine configurations at all. If I ever clean it up to work cross platform better, I'll let you know.

App devs should at least start using the node balancing endpoint insted of relying on the engine node itself... But I guess many simply don't know it exists.

Yea, letting more users know that it exists is key. I really don't think most users know of any node besides the one run by the engine team.

And getting witnesses/rpc nodes more diversified. This is the list where people hosted their nodes the last time that I ran the check, I know things have changed(we lost Choopa and Privex gained one more node) but the over reliance on Hetzner is insane. And it basically comes down to cost. Nowhere else can you find servers for as cheap as Hetzner(other than Contabo really, but they don't really have the best servers) without really sacrificing performance and sadly that means everyone flocks to them. Its 21 non on hetzner, and 20 on hetzner... That split should say a lot.

Hetzner Online GmbH 20 Contabo GmbH 4 Vodafone NZ Ltd. 2 RYAMER 2 UUNET 1 Converge ICT Solutions Inc. 1 CENTURYLINK-US-LEGACY-QWEST 1 AMAZON-AES 1 DIGITALOCEAN-ASN 1 BTS Communications BD ltd 1 Deutsche Glasfaser Wholesale GmbH 1 CONTABO 1 NOCIX 1 Linode, LLC 1 AS-CHOOPA 1 UAB Interneto vizija 1 Privex Inc. 1Yeah it really comes down to cost I would say and because it's so easy to set up a new server on one of the big cloud providers. Also a lot of witnesses just don't have stable / fast connections at home or don't want to "expose" their home network.

But I agree that we should start thinking about alternatives as recently cloud providers started an offensive against crypto. I don't really think that will be happening any time soon, if even at all, but it's better to already have an alternative and also to make hive / engine truly decentralized.

I have seen a lot of people trying to do things from home and its really hard to see some of them going away when requirements increase, either losing interest or not being able to sustain the effort for those beefier resources. It can be overwhelming towards security too, but it can be manageable if one knows how to isolate things.

I will keep showing proof that decentralization can be achieved from home =) It's not easy, but for some people, infrastructure can be learned!

To be fair a good streaming PC can already do so much in terms of support for blockchain nodes, and so many people have them and don't know about it.

All it takes is memory (for the ultimate high-performance blockchains or DBs)... and I would say, not yet next year, with DDR5, but later in 2023 when the new chips become available and the amazing AMD CPUs jump to more than 128GB of max RAM (Rysen alike), it's going to be something VERY, very interesting for anyone having a gaming PC for example.

But apart from that, with things like docker, these might become a reality to be used by anyone.

For sure, but for many (including me) connectivity is the biggest problem in overcoming it - see other comment.

Yeah...

Shame we can't trade RAM for Gbps... I could use that market.

Maybe I can set one up at my work place, as there I would have a great stable connection. But not sure if they would want that.

For me a really unstable / slow home connection is why I didn't go forward with setting a witness server up at home already. Additionally my power goes out all now and then as the building I live in is over 100 years old and I guess they should be renewing the infrastructure :D

I can't comment on this one too much without going to be hammered by everyone, LOL =) currently, I am very lucky... 😁 ask @rishi556

Although my fricking upgrade has been an overwhelming catastrophe... I guess I have chosen the wrong company... and I am learning with all this, and probably will have to put the current "good service" proponents into a complaint and find a new provider. But the idea is to go to 2Gbps (up&down). It was a thing for later in the year... but prices are irresistible! And all I need is more uploads...

I'm flying to New Zealand and running a cable from your house to mine back home so I can get better internet 🤣.

Haha 🤣

😅

Wow, that sounds nice. Using coaxial cables for internet is the only option at my home unfortunately 🙁

Coaxial can actually reach 1Gbps (my current max download) but companies dislike it because of the price of copper. Fibre became the new form.

Oh, didn't know about that. But speed is only theoretical, as e.g. the coaxial cable coming into the house is shared between all flats (split up where it's coming in).