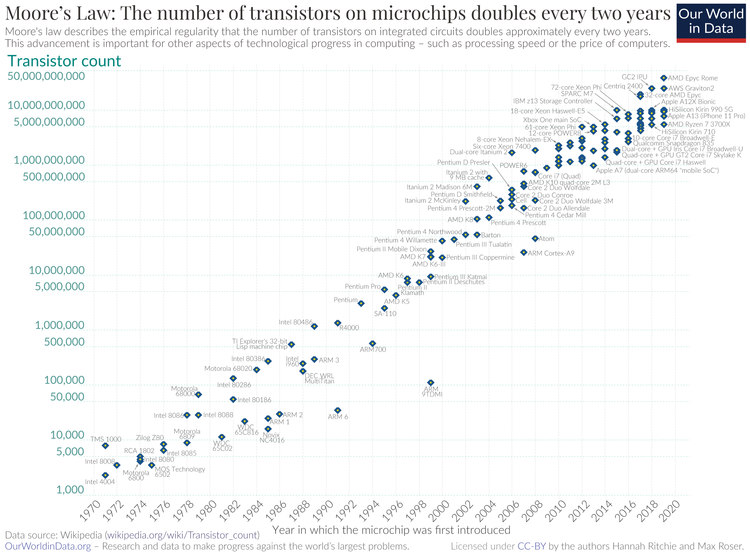

A semi-log plot of transistor counts for microprocessors. (image source)

In 1965, Gordon Moore, engineer and co-founder of Intel, predicts that the number of components in an integrated circuit doubles every year. Moore's prediction defined the future of technology. In 1975, 65,000 components were packed in an integrated circuit and Moore's prediction were verified. Moore revised his statement and proclaimed that the number of transistor on a chip doubles every two years. This is known as the Moore's Law.

Moore's prediction inspired chipmakers to fulfill the doubling rate of transistor in a single integrated circuit. They invented impressive chips that outperforms the previous generation. Transistor became smaller and compact as cutting-edge manufacturing process evolves. In the last 40 years, chip manufactures managed to live up to Moore's Law. To date, there are over 10 billion transistor in a single chip as compared to 10 transistors in 1960.

More powerful chips are created every year as we needed more computing power. The doubling of transistor in a single chip slowed down in mid of 2000, where new problem emerge as transistors became smaller and denser. In 2020, Moore's law has slowed down farther as it is forecasted to double every 20 years. We are approaching the end of Moore's Law.

The Shrinking Problem

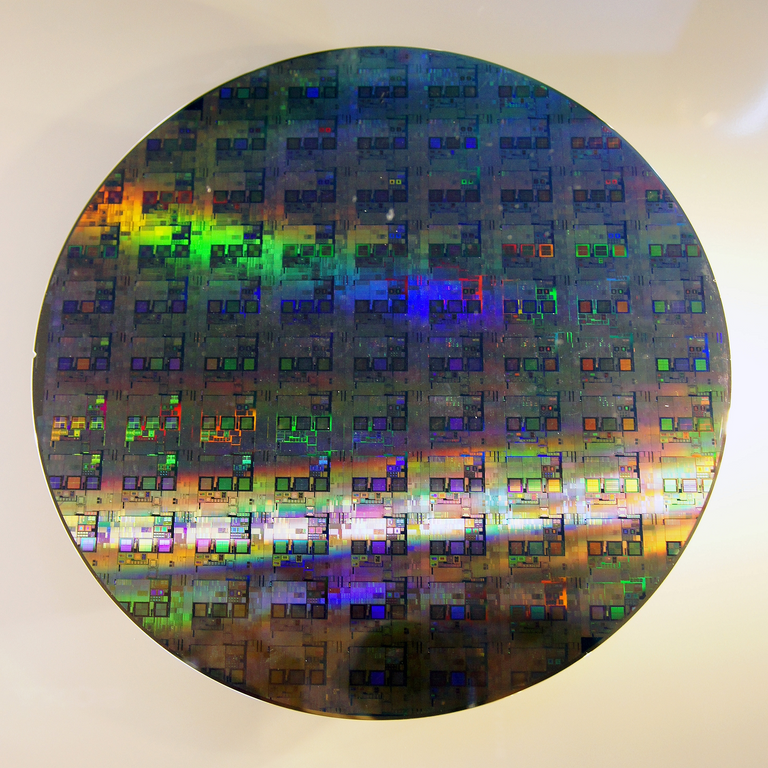

A 12 inch silicon wafer. (image source)

Chips are manufactured by printing the component using lithography and packing more transistors required shrinking the size of transistors. The first transistors were printed with lines 80 microns wide. Moore has foreseen that cramping transistors in a single chip comes to an end. It slowly decline but not a sudden death. In the 1999, an Intel researcher predicts that producing chips lesser that 100 nm is a difficult task by 2005. The task of shrinking transistors is so hard that Intel, industry leader, hit several bumps in pushing the 10 nm chips to 7 nm. In recent years, chipmakers aimed to reduced transistor's size from 7 nm to 5 nm and 5 nm to 3 nm.

There are key problems to address when reducing the size of transistors. At 10 nm, the chip is susceptible to leakage hence it can not contain electrical current at its channel. This caused the chips to heat up and wear out over time. The integrity of the chips are compromised as billions of transistor heats up. Lowering the processor voltage helped prevent overheating but limiting the processing performance of the chip. We are approaching the thermal ceiling point by 2024 (as projected by International Technology Roadmap for Semiconductors). There is a limit to how much heat can be cooled down per surface area. As chips gets smaller and denser, it became impossible to cool it down.

Intel and other chipmakers can add more components to our chips but eventually cheaper chips is over. The cost of producing smaller and denser chips are quite high as compared to the previous generation. Taiwan Semiconductor Manufacturing Co. (TSMC) and Samsung cracked another step to Moore's law as it successfully achieved 5-nm chips manufacturing process. The chip is reduced by as much as 2000 times smaller as to the previous generation. Shrinking the chips to this size requires fabs (a chip manufacturing plants). TSMC invested over $10 billion per year to operate the fab. Samsung projected around the same investment value.

Previously, cramping more transistor has a reasonable price increase. At 65 nm, chipmakers observed the economic gains of shrinking transistor is slipping away. At 14 nm, more steps are introduced in shrinking the transistors that caused a lot more to manufacture. In 2015 (by International Technology Roadmap for Semiconductors (ITRS), transistors was projected to stop shrinking by 2021. In 2021, chip manufacturer will find ways to improve chip's performance by not shrinking the transistor. The report suggest that it is not economical for companies to reduce the size of microprocessors.

Beyond Moore's Law

The end of Moore’s Law is not the end of progress. To recall, Moore's Law projects the doubling of transistors density in a single integrated circuit but not the speed or performance of the chip itself. Matching the predicted progress of Moore's Law is so difficult and expensive that chipmakers have abandoned it. They are finding cost-effective ways to boost performance and not relying on shrinking transistors. We see a decreased in research and development of cutting-edge chip-manufacturing over the years. Chipmakers are now focused on specialized chips that are designed to accelerate computation. The age of Moore’s Law is nearly over.

We are in the new era of innovation where the future of computing may no longer at a exponential rate but a steady pace. New technologies emerge which gives us a new perspective to process information. Here are some of the technologies that could revolutionize the world of computing when Moore’s Law takes its curtain call.

Memristor

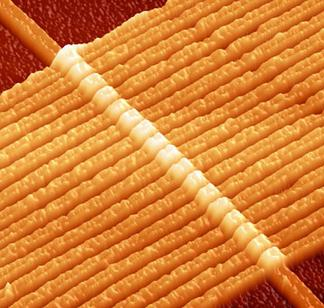

Oxygen-depleted titanium dioxide memristor. (image source)

In 2008, memristor was discovered by scientists from Hewlett-Packard. Memristor is a hypothetical circuit element predicted by Leon Chua in in 1971 at UC Berkeley. This component remembers the electrical current that had flowed through it and has a resistance that changes according to that history.

The memristor is a viable option to replace the transistor. It is packed with higher densities that enable faster processor or higher memory capacity. Many touted memristor as the ideal component in modelling neural networks and machine learning. In 2017, the first commercial memristor was released but critics argues whether it is the same as the hypothesized one. To date, manufacturing memristor is difficult but viable.

Quantum Computing

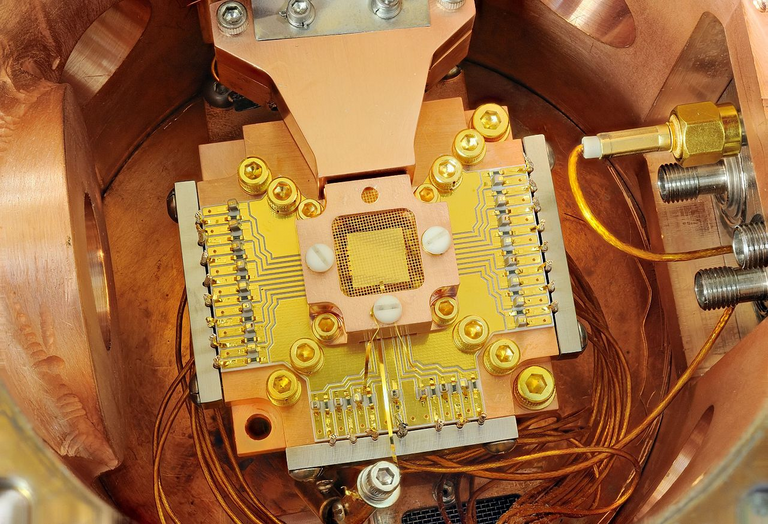

NIST quantum computer. (image source]

Quantum computing supersede the conventional computing power of silicon. Regular chips relies on bits (basic unit of information) while quantum computing relies on qubit. Bits can take either 0 or 1 but qubits can take both value (0 and 1) at any given time. For example, qubits can be represented by you sitting across the end of the couch at the same time. This allows information processing faster with less energy.

In October 2019, Google researchers claimed that their quantum computer solved a problem in 3 minutes an 20 seconds that would take supercomputers to solve in 10,000 years. Scientists likened Google’s feat to the Wright brothers. Some experts question the significance of Google’s quantum supremacy experiment and all stress the importance of quantum error correction. The announcement may be debatable but it proves something is possible. Greg Kuperberg, a UC Davis mathematician who specialized in quantum computing was not impressed by Google announcement but he stated "I consider it as a relevant benchmark".

The early leaders in quantum computing—Google, Rigetti, and IBM. With other big names in tech industry such as Microsoft are jockeying in investment to secure quantum supremacy. Each follows different design to its quantum computers and the race to quantum supremacy remains. The initial result of certainly look promising and we can foresee a new era of computing.

Final thoughts

The end of Moore's Law is inevitable but progress continues. In the last 50 years, Moore's law dictates the trajectory of technology ands progress itself. We are now at the edge of Moore's law, where shrinking transistors becomes difficult and expensive. We can still shrink the transistor smaller that what it is today. Eventually, we will hit the hard limit to how much can we shrink it. The end of Moore's law opens up a new era of computing.

References

Moore’s Law Is Ending. Here’s What That Means for Investors and the Economy.

After Moore’s Law: How Will We Know How Much Faster Computers Can Go?

The Decline of Moore’s Law and the Rise of the Hardware Accelerator

Transistors will stop shrinking in 2021, but Moore’s law will live on

Beyond Moore's Law: Taking transistor arrays into the third dimension

Andy Gilmore, The biggest flipping challenge in quantum computing

Hello quantum world! Google publishes landmark quantum supremacy claim

Google Claims a Quantum Breakthrough That Could Change Computing

Yay! 🤗

Your post has been boosted with Ecency Points.

Continue earning Points just by using https://ecency.com, every action is rewarded (being online, posting, commenting, reblog, vote and more).

Support Ecency, check our proposal:

Ecency: https://ecency.com/proposals/141

Hivesigner: Vote for Proposal

Great article! I have been following the slowdown and end of Moore's law for the last decade. I maintain a zotero shared library about it at https://www.zotero.org/groups/33380/fin_de_la_loi_de_moore/library. Your references will be nice additions to it, thanks for the update.

I also made a presentation in French about it 10 years ago: https://prezi.com/_2wcwscifh85/la-fin-dune-loi-de-linformatique-la-loi-de-moore/?present=1 at the time when I was considering shifting my research interests towards neuromorphic computing. I really should write a blog post about that!

The shared library is full of info on Moore's Law. I am also following Moore's Law for years now. I am thrilled to see what the ends bring to technological advancement. The shared presentation is compact and informative. Thank you for sharing it.

It is my first time to hear about neuromorphic computing. It would be great if you write a blog about it.