A large fraction of my work as a particle physicist concerns the exploitation of the results of the Large Hadron Collider (LHC) at CERN. With this post, I provide bits of explanations about why this is important and how it could be done.

The Standard Model of particle physics explains most high-energy physics data. That is a fact. It however suffers from various conceptual issues and practical limitations (see here for a short summary of those motivations). All of this makes physicists thinking that the Standard Model only consists in the tip of a huge iceberg explaining how the universe works at the fundamental level.

The problem is that we have very little guidelines on how to explore this iceberg and what it could be. In fact, there are thousands of possibilities and the LHC at CERN is looking for clarifying the situation. However, for now, there is no sign of any new phenomenon in data. We are thus in the dark!

This is where what I want to discuss today comes into the game! This has been associated with two of my recent research articles, that are available from here and there for those interested in getting information beyond this post.

[image credits: open photos @ CERN]

Searching for new phenomena at the LHC

As said above, we have today no compelling evidence for the existence of something unexpected. All LHC collaborations try hard to discover a sign of phenomenon beyond the Standard Model, but without any success so far. As an appetiser, let me discuss a bit how that hunt for new phenomena works in practice.

To design a search, we start by considering how a specific new phenomenon should materialise in data. The choice of the phenomenon itself is generally motivated by concrete beyond the Standard Model theories. In this context, the exact details behind what should be seen depend on the theory parameters, and those parameters can in principle take any value. This yields slight differences in the expected observations, but those differences are irrelevant for the design of the search itself.

From there, one implements an analysis allowing us to unravel the considered new phenomenon from the background of the Standard Model. If something is found in data, we may have a first sign of the path to follow for a big discovery. If not, the theory we started from gets constrained: its parameters cannot take any random value anymore.

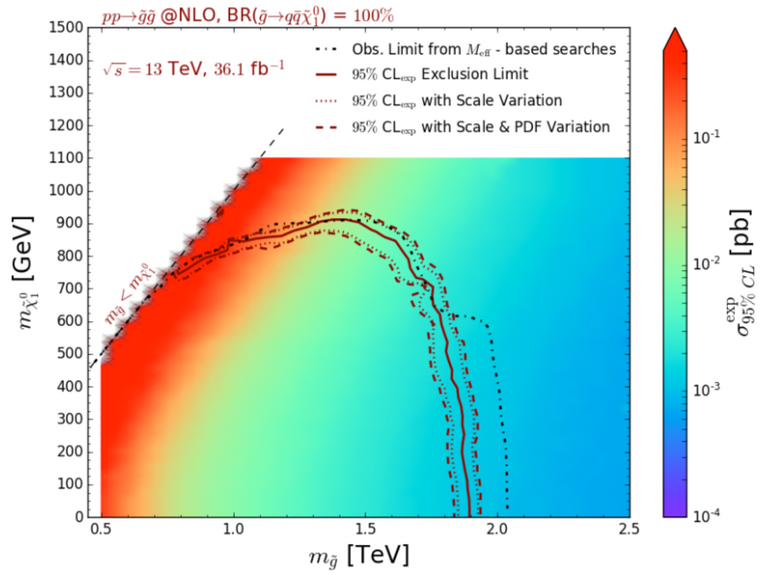

An example is shown below, taken from this research article of mine.

[Credits: arxiv]

The theoretical framework we consider contains two parameters that can take any positive value: the masses of two new particles. Those masses correspond to the x and y axes on the above figure. Moreover, each coloured point consists in a theoretically valid mass spectrum, or parameter configuration.

Any configuration lying in the lower left corner, inside the red contour, is found to be excluded by data. In other words, if this was the path chosen by nature for our universe, we should have seen some related new phenomena already. As this is not the case, the configuration is excluded.

Taking the entire experimental programme targeting new phenomena, we actually iterate this procedure over a huge set of signatures, originating from many theories. The entire catalogue has been designed thick enough so that any model can fit in: its expectation in terms of new phenomena are in principle (there are rare exceptions) covered.

As the LHC has not found anything, beyond the Standard Model theories are getting severely constrained (without being actually excluded). The experimental publications however always focus on deriving constraints on specific models, that are often chosen to be the most popular ones…

Re-interpreting the results

This is where my work comes into the game: There are many theories that deserve to be tested against data, and our experimental colleagues have not enough resources to test them all.

The idea we had half a decade ago was to develop an opensource platform where anyone could inject his/her own signal and verify whether it is compatible with the non observation of anything new in data. In this way, instead of asking to our experimental colleagues to work more, we could come to them with only the most relevant models, after having tested them by ourselves.

[Credits: CERN]

A framework developed by theorists however features, by definition, differences with respect to the full experimental software (that is not public). For instance, we need to re-implement the experimental analyses by ourselves, and understanding the related documentation is sometimes (well… often) a nightmare. We need sometimes ages to get the information right.

In addition, the detector simulation is differently handled in our framework. Instead of the huge (not publicly available) machinery related to an LHC detector, we use an opensource lightweight option. We hence need less than a second for simulating one single collision, instead of minutes.

As a consequence, it is important to **validate carefully **the re-implementation of each analysis in the platform. This takes time (often months). This is however mandatory, as we must be sure that any prediction made with the platform is a good-enough approximation of what would have happened in real life.

Thanks to this platform, it becomes possible to use an analysis targeting a signature of a model ‘A’ to constrain a model ‘B’ featuring a similar signature without having to beg our experimental colleagues to do the work for us.

It also helps us to assess whether every model is correctly covered by the on-going analyses. In other words, we can conclude about the (non-)existence of loopholes in the LHC search programme, and do whatever needs to be done to fill these loopholes if necessary.

Summary - searching for new phenomena at the LHC

The search for new phenomena beyond the Standard Model of particle physics plays a big role at the Large Hadron Collider at CERN. Our experimental colleagues are searching for signs of the unexpected through various signatures belonging to a vast catalogue. Unfortunately, all results are so far compatible with the background of the Standard Model and there is no hint of anything new so far.

As a consequence, constraints are imposed on many theories of physics beyond the Standard Model. Our experimental colleagues do not however have the resources to constrain all potentially interesting theories. This is where my work comes in. We developed a platform allowing to test, in an approximate but good enough manner, whether any given signal could survive the absence of any sign of new physics in data.

In this way, anyone, including theorists and even non physicists, has a way to confront his/her favourite model to data, and come back to the experimental collaborations so that they could focus on the most interesting and intriguing findings!

I hope you have appreciated this small window on my work. Feel free to ask me anything!

PS: This article has been formatted for the STEMsocial front-end. Please see here for a better reading.

Hello @lemouth,

I understand from your article more clearly how what you do is 'theoretical' physics. All 'interesting' theories are possible, until the lack of data indicates that they really are not likely. You look for indications that something exists beyond the standard model, but failing to find anything where there should have been something, you move on to explore more likely theories.

If I call you a dreamer, that is not an insult. By dreamer I mean someone who looks beyond the known to the possible. Without such dreamers there is no advancement in human knowledge.

As I have said before, your work is very exciting and calls upon not only the greatest analytic skills but also upon great creativity.

Quite exciting. Thank you for explaining in a way that makes the purpose of your work, and even to some extent, the methodology understandable.

This is exactly the point. In order to exclude a theory, you need to exclude all possible choices for the parameters of the theory. And this is what is complicated: Some combinations may be excluded... Excluding all of them is however a more complicated task. However, when one is left with only extreme parameter choices, we may lose some nice properties of the theory... and then give up and focus on more interesting frameworks.

Here, the point is that we only be able to push the frontier where the something lies a bit further. We can only say that there is nothing, up to a certain point, with the new phenomena lying potentially beyond that point.

Yes, you can call me a dreamer. A dreamer happy to share some pieces of the dream! ^^

Thank you :)

I find it fascinating that a simulation can show the (non-)existence of unknown behaviors that can be verified experimentally afterward. Are you still working on the same simulation software (the one you created and taught maximouth how to use 2 years ago if I remember correctly)?

We always need theory predictions to confront to data if we want to draw conclusions about the potential existence of new phenomena (in one way or the other). There are two ways to get there:

The two options are followed, but I developed tools for the second one.

Yep, still the same tool. We are still improving it. I am planning to submit a new article on Monday (after tomorrow) where we augmented the tool by a novel lightweight way to simulate the detector effects. We manage to gain 30%-50% in speed and we need 100 times less disk space. I am quite happy with it (I will probably write a blog on that... at some point :) ).

Speaking about maximouth: I teach him particle physics now (adapted for a 9 y.o. child ;) ). I may share the material with STEMsocial... one day (after translating it from French to English ;) ).

So...how does a phenomenon eventually help explain how the universe works?

I wrote it on one sentence to make it short, but this deserves paragraphs of explanation. Let me try to make it in the middle (we will always have time later for paragraphs).

We have a lot of theoretical frameworks proposing how to extend the Standard Model (and thus explaining how the universe works). All these frameworks predict new phenomena beyond the Standard Model. Therefore, observing some new phenomena, together with non-observing others, will help us to clarify what are the possible options and what are not.

Does it clarify it?

Any hints of the dark matter?, there is no new physics reported by the LHC since the Higgs bossom, shouldn't this point the research to alternative models to explain the effects on the gravity apart of that "dark matter" (not questioning the Standard Model of course)?. For what we know this is the only explanation for the cohesion of the galaxies or could we explain that cohesion for a bad estimation of the weight of the black holes?, never understood how can we be so accurated in that estimation.

I'm only curious, because I'm aware that I have a very basic (almost neardenthalish) understanding of physics 😅.

Only indirect hinds. We are still lacking the direct evidence of it. While dark matter has a lot of nice advantage on the cosmology side, other alternative options are still allowed (i.e. modified gravity for instance). For that reasons, you have researchers working on dark matter (for instance myself) and others working on other paradigm. We need to be pragmatic and cover everything,.

I am not sure to get what you mean. Could you please specify? Dark matter is needed to explain the formation of the galaxies in the Standard Model of cosmology. In addition, dark matter could be made of black holes too.

I know I didn't answer the question, but I didn't get it exactly. Feel free to come back to me and I will clarify anything needed.

Sorry maybe I didn't explained myself properly, as I said my knowledge about the subject is very limited, as limited its my english too, so is a double effort to me 🙂.

For what I understood by my previous readings about the dark matter, was needed to add enough mass to the galaxies, and to explain the fact that they don't break up because of the kinetic forces of their own rotative movement. (maybe I misunderstood this)

What I didn't know is that is also needed to explain their formation which is very interesting too. For what I understand from your reply there is no sure measure of the weight of the black holes (because dark matter could be part of them) and therefore I was wrong assuming that the scientists have this problem "solved".

Many times in the scientists articles I use to read they say for example this black hole has 20 times the sun mass and the like, they seem to affirm it with so much conviction that I assumed that they're sure about it, therefore my confusion.

Thank you very much for your kind explanation.

No problem with the double effort. I will be patient, don't worry ^^

Dark matter was initially postulated to explain the motion of the stars in the galaxies, as well as the global motion of the galaxies themselves. Assuming gravity, observations are only reproduced if we add something invisible (that generates some gravitational force): dark matter.

Today, we also know that dark matter is also needed to explain galaxy formation, the cosmic microwave background, gravitational lensing.

In short, many cosmological observations are pointing towards its existence and everything seems to agree with each other. The only missing link is the direct observation of dark matter on Earth.

The mass of a black hole (and not the weight) can be inferred from the motion of the objects lying around it. This is thus another story, different from dark matter. We indeed observe how objects are attracted by the black hole or orbit around it. From there, one can extract the black hole mass.

What is more exciting finding new phenomena you predicted or finding existing phenomena you just didn't recognize or expect to find within the confines of that particular experiment?

This kind of physics is a little out of my league (I was good with high school stuff)...but an example would be like the deep-sea thermal vents, we expected to find new life down there, but we didn't expect to find certain life down there we were already aware of. Both had fascinating implications

The two are as exciting for me. They however lead to a very different kind of work.

In the first case (finding something that was predicted), the problem is to characterise the observations. In general, plethora of configurations of plethora of theoretical frameworks can mostly explain everything. Then one needs to design new properties to observe so that we could start crossing options and get a clearer picture. Inverting the problem (from observation to the theory behind them) is by far a very complex and ill-defined problem. Which is great and a lot of fun in perspective!

In the second case (finding the unexpected), the problem is to understand what we have. And then we need to think about novel theoretical options to explain that. The good news is that new ideas appear regularly, and physicists do not lack imagination. Again, a lot of fun! :)

This naturally gets to your conclusion: both have fascinating implications ^^

I knew I would find an example soon. They seem to have some theories as to what it is, but I am personally hoping it's aliens.

Naaaaaaah BBC quoted it wrong.... A dark matter experiment found something new, that is probably not dark matter (although myriads of dark matter explanations are probably on their way too).

I recommend the reading of the official press release of the Xenon1T experiment. The collaboration provide three explanations for the excess:

The excess seems to be more compatible with the latter. But the significance of the two others is of the same order. In addition, many theory papers will appear soon. The first one was already announced this morning... (axion-like dark matter).

I was not planning to write on that topic yet, but I actually could. I just have no time at the moment... I will see.

The official press release explains it much better.

I guess we will find out sooner or later which of the 3 explains it.

Although the tritium seems like the most boring, the large finite numbers and the ability to detect or calculate this always amaze me...talk about finding a needle in a haystack.

In fact, it is more about finding a needle in a needle stack! :)

I may eventually write about this news next week. I have read to many incorrect articles about it (even on Hive).

Hello @lemouth, this is an interesting article. Theoretical physics has always being far from the reality, though this not peculiar to physics alone. Although models designed can be easily used to explained what is expected to be seen. There are times that we see a wide gap between the model explained and what is being seen. At times models may appear beautiful but seeing it in reality maybe a constraints. I hope that your work of testing theory against data will help out.

Thanks for passing by!

I won't agree with this statement. Theoretical physics is everywhere, as physics by itself is aiming at explaining how our world (and our universe) works :)

Models must explain, but also predict and thus be testable. Otherwise, one just fits some signal (and this is not very exciting, IMO).

Unfortunately, for now, everything looks quite in agreement with the Standard Model. But we still have a few decades of data taking... This may (and hopefully will) change :)

I agree with you that theoretical physics is everywhere, like mathematical models use to explain natural phenomenon. Use of Quantum field theory, Classical field theory and many other aspect has been used to construct models, but what I was trying to say is that Theoretical physics is different from the Experimental physics, which employ the use of experimental tools. I guess the way I put it is not right.

Theory and experiments are different. But they need to walk down the path together. One cannot exist without another. That's my vision: what is the point in designing theories that can't be tested. And what is the point of taking measurements we have nothing to compare with? :)

Certain tools are different between both branches, but many are common. Especially in particle physics,

It must be fascinating to work on the cutting edge of known science pushing back the boundaries. Good luck with your quest.

Thanks for the nice words! I have indeed the chance of being able to say that my work is my hobby at the same time.

Just for the record, this is not really my quest, but the quest of all of us, at least somehow :)

I like what Cern is doing, I followed the higgs boson news closely when it broke.

I actually use Boinc and crunch the LHC boinc project on all the different application they currently host on it.

Nice! It is really cool to help the high-energy physics community as you can. Thanks for that.

I am also sure my experimental colleagues (they are those who really need a lot of computing power, much more than theorists) are all grateful!

Did you make plans to use the BOINC platform (as other LHC projects do) or is there a reason (technical, ...) to not consider it?

As a theorist, I do not plan to use the BOINC platform The reason is that me, our any collaboration of theorists, don't need as much computing power as what our experimental colleagues do.

In general, we can achieve doing anything we want with small, very affordable, clusters. If you take the simulation of a single LHC collision by the big experiments, it amounts in minutes of CPU time.... For one single collision. And we need billions of these simulations. As you can see, here there is a need :)

That's quite clear. Thank you for explaining.

You are very welcome!

Just wondering if you could shed some possible light of a subject I've been pondering. Could you share your ideas about quantum entanglement and its possible application in future communications and security? Many thanks.

Fundamental physics is a vast domain of research and what I work on is very far from quantum entanglement. I wrote this two years ago. You may find some information in it. However, I am afraid my knowledge on the topic is too limited to answer your queries. Apologies (but we can't know everything about everything ;) ).

I find it fascinating to read about your work and while it took me a few reads to grasp what this is all about, its nevertheless very very cool to meet someone who actually works at CERN. I only hear of it occasionally in the news feed or in science fiction but looking at what you do, man does give me a whole new understanding of things. With the recent events, has funding for CERN been constrained?

To be fully honest, I don't really work 'at' CERN. However, my research work definitely concerns what is going on at CERN (and I still go there once in a while to meet colleagues). I was physically on site on a daily basis (i.e. having my office at CERN) only in 2012-2014.

The budget situation for particle physics is always worrying, as fundamental science is not where the money is put in in the first place.

This being said, CERN has the chance to be funded by many countries (see for instance here for 2020, at a very little cost for the European citizens themselves (it is I think one cup of coffee per capita per year).

I however have no idea how the current situation will impact the next round of funding. Therefore I can't answer. If I fund anything, I will let you know.

I'm impatient to review the article. thank you for sharing this wonderful

My pleasure! By the way, which article exactly you want to review?

I haven't searched for CERN LHC for a while. Thanks for sharing.

You are welcome!

I am "regularly" blogging about this, sharing some fresh news with my own vision of them. However, please note the quotes, I don't have as much time as I would like to blog ;)

This was such an interesting read! Thank you!

You are welcome!

😊

Thanks for your contribution to the STEMsocial community. Feel free to join us on discord to get to know the rest of us!

Please consider supporting our funding proposal, approving our witness (@stem.witness) or delegating to the @stemsocial account (for some ROI).

Thanks for using the STEMsocial app, which gives you stronger support. Including @stemsocial as a beneficiary could yield even more support next time.

Congratulations @lemouth! You have completed the following achievement on the Hive blockchain and have been rewarded with new badge(s) :

You can view your badges on your board And compare to others on the Ranking

If you no longer want to receive notifications, reply to this comment with the word

STOPSupport the HiveBuzz project. Vote for our proposal!