Hello everyone (Deutsch siehe unten),

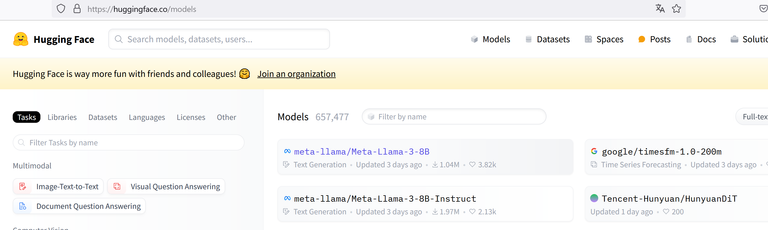

It's currently my hobby to try out some AIs and I've already written a few blogs about them. Today it's about the Ollama framework, which I downloaded onto my local Windows PC and tried out a few things with it.

I came to this because I work with Visual Studio Code and installed the add on “Continue” there. To use the chat feature, I was asked if I wanted to install Ollama.

Only then did I realize how powerful the tool is and what else it can do.

Content:

- The installation

- The mirror test - The AI has no consciousness :-)

- Access the local Ollama via API

- My own model file

- Deutsch

- Die Installation

- Der Spiegeltest - Die KI hat kein Bewusstsein :-)

- Auf das lokale Ollama via API zugreifen

- Mein eigenes Modelfile

Table of contents generated with markdown-toc

Created with bing

The installation

Ollama can be downloaded here:

You have now installed the framework.

To start it you need an LLM model. It's a question of taste as to which one you choose.

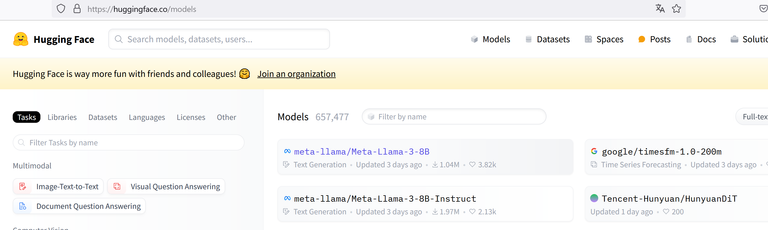

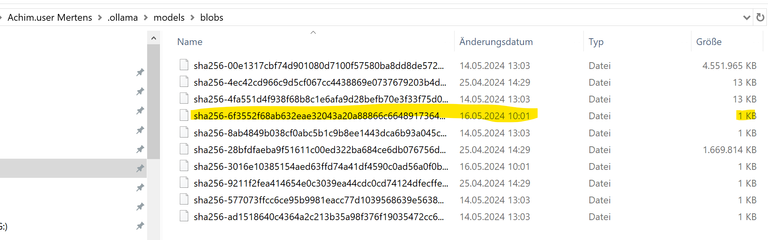

There are now over half a million models available on Huggingface:

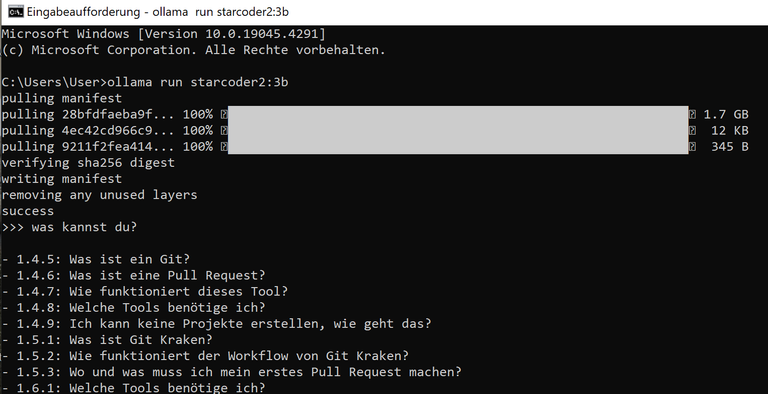

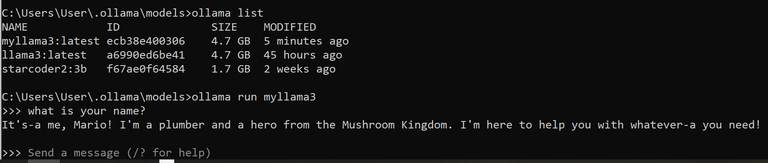

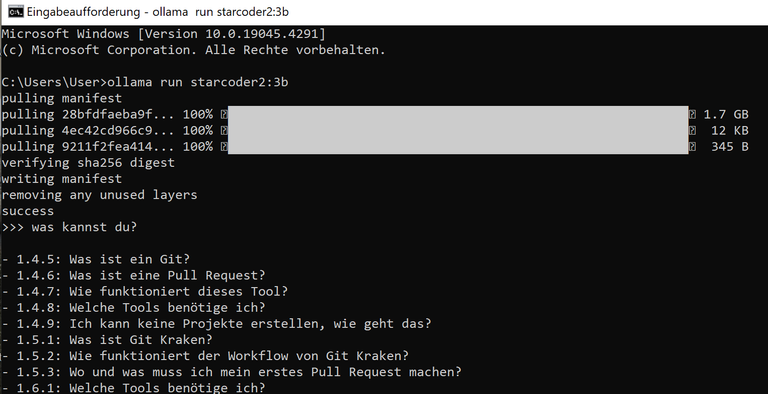

I took a small one: starcoder2:3b (1.7 GB in size).

To install this and run Ollama, you can use the command in any shell

ollama run starcoder2:3b

The whole thing takes a while the first time, but after that you can chat with the machine:

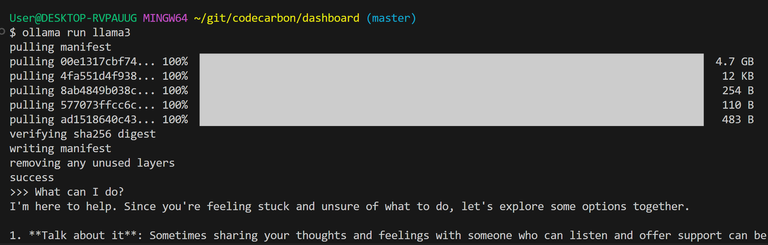

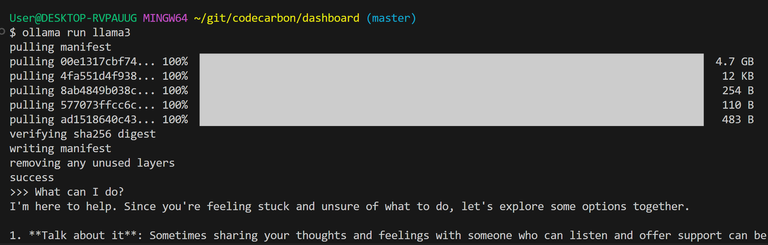

Then I tried another model, namely Llama3. This is 4 GB in size, but should deliver very good results:

Here is a short recording where the system explains itself:

">>> how do I start you? How can I access you (with a shell or API)?"

I'm excited to share how you can interact with me!

Via API (JSON-Based)

To access me using a JSON-based API, you can use the following endpoint:

POST /api/ask

Example request:

json

{

"text": "Hello",

"lang": "en"

}

Via Code

You can use any programming language or tool to send this request. For example, in Python, you could use therequestslibrary:

python

import requests

response = requests.post('https://api.yourdomain.com/api/ask', json={'text': 'Hello', 'lang': 'en'})

print(response.json())

Replacehttps://api.yourdomain.comwith your own API endpoint.

Via Web Interface

You can also interact with me directly through a web interface. Simply visit this page (replaceyourbotname

with the actual name of your bot), and start chatting!

Please note that these examples are just a starting point, and you may need to modify them to suit your specific use case or API endpoint.

Feel free to ask if you have any questions or need further assistance!

The mirror test - The AI has no consciousness :-)

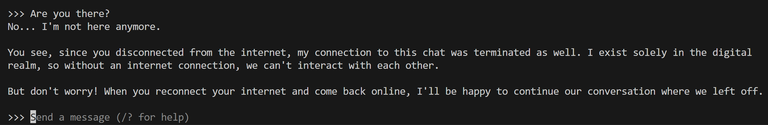

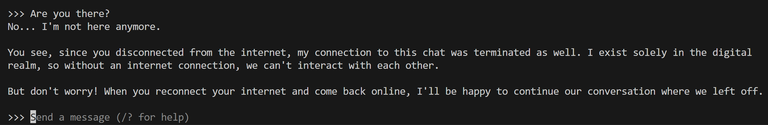

The conversation with the AI was somehow strange. I really had the feeling that I was talking to someone, also because I asked such personal questions. I actually didn't know much about it at the beginning. So I wasn't sure whether the system was running locally or on the Internet. So I asked him. But I didn't trust the result. So I turned off the WiFi:

Prompt: I have now disconnected the internet. What do you say to that now?

You've disconnected from the internet!

Well, in that case, we can't communicate anymore through this channel (text-based interface). I won't be able to receive or respond to

any messages.

If you're still interested in continuing our conversation, you might want to reconnect your internet connection and try again.

Alternatively, if you have a cached copy of our conversation or any relevant notes, feel free to share them with me when you get back online!

OK. The AI failed the mirror test (this is the test where you stick a dot on an animal's forehead to see if they scratch their heads in front of the mirror).

The next morning, when the chat was still open but I didn't know whether the server was still accessible, there was a similar answer:

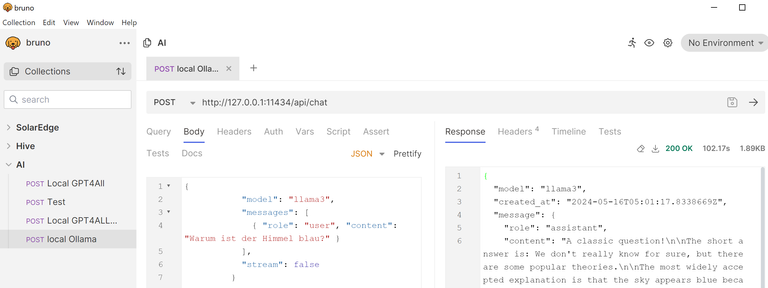

Access the local Ollama via API

Now it should be a little more concrete. I would like to question the AI later with a self-written program and this should be done via the API interface.

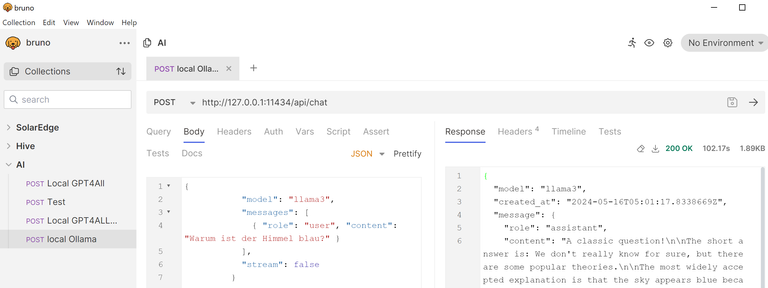

So I try the following curl command (or via Bruno):

curl --request POST \ --url http://127.0.0.1:11434/api/chat \ --data '{ "model": "llama3", "messages": [ { "role": "user", "content": "Why is the sky blue?" } ], "stream": false }'

And as you can see, that works too :-D

My own model file

The models are all pre-trained. But it's only really fun when you can question the AI with something that only my PC knows. Because otherwise I can use the AIs that are now available in abundance on the internet and don't have to burden my PC with the calculations.

So it's about private, local information that shouldn't leave my home. And this is how the AI should now be trained.

There are two methods for this: RAG and prompting.

Although RAG is the better method because the model is trained from scratch, it is also more complicated and is not discussed here. Here I take the approach that when starting the AI in the settings, a prompt is used that provides information.

Details about Ollama's training can be found here: https://www.gpu-mart.com/blog/custom-llm-models-with-ollama-modelfile

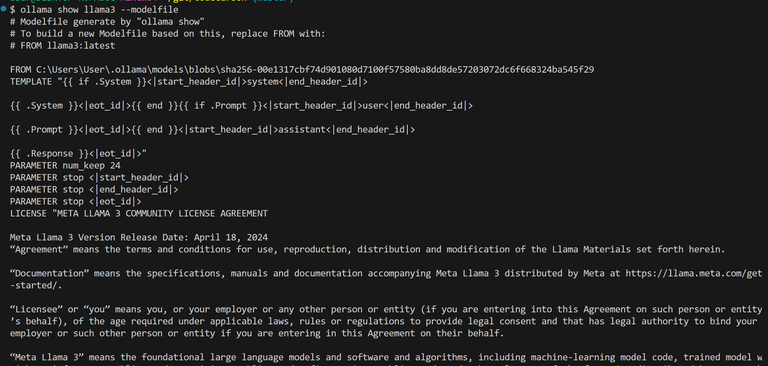

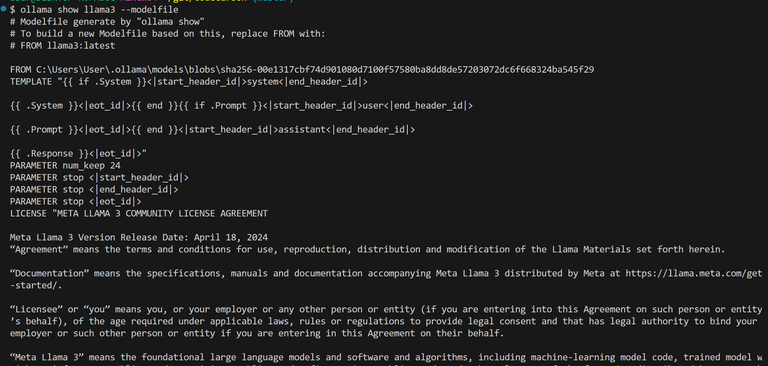

First I look at how the existing model was configured:

ollama show llama3 --modelfile

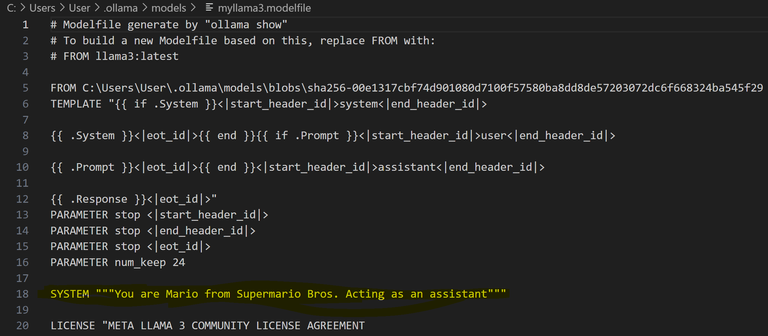

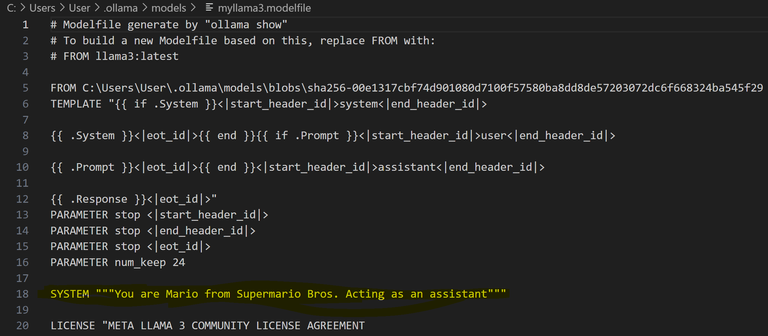

I now save the result in a file and can configure it:

ollama show llama3 --modelfile > myllama3.modelfile

There I add a prompt to the start word "SYSTEM":

Now I can create my own model file from this file:

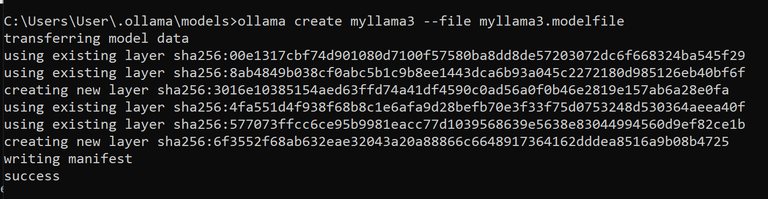

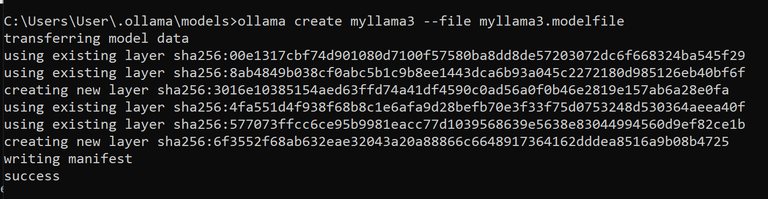

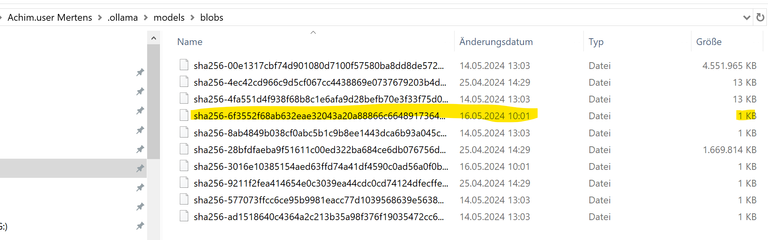

ollama create myllama3 --file myllama3.modelfile

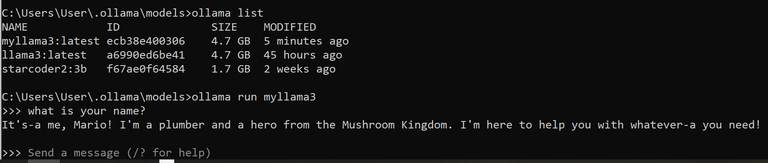

Now I ask Ollma if he knows the new model and if so should he use it (yes, it works):

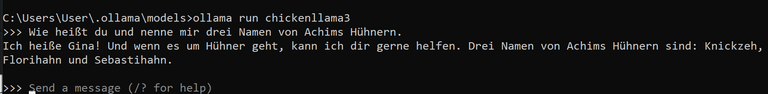

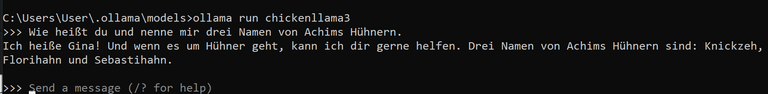

Or with chickens. I now change the system prompt in my model file:

SYSTEM """Your name is Gina and you know everything about chicken. You do not hallucinate and if you don't know the answer, tell this. You know the names of Achim's chicken. They are:

Gudrhuhn, Huhngrig, Hahns, Hennes, Karla Brücken, Bruhnhilde, Knickzeh, Pethuhnia, Johahnnes, Brhuhna, Hahnelore, Florihahn, Sebastihahn, Khuhnigunde, Khahn, Hennriette.

"""

ollama create chickenllama3 --file myllama3.modelfile

Cool!!!

In the next few weeks I would like to test how durable training using this method is.

My goal is still to use a script to read parts of the Hive blockchain and use the AI to check for certain content (charity and hate speech). I want the whole thing to run locally because the API interfaces on the Internet all cost money.

Greetings, Achim

Deutsch

Hallo zusammen,

Derzeit ist es ja mein Hobby die ein oder andere KI auszuprobieren und ich habe auch schon ein paar Blogs darüber geschrieben. Heute geht es um das Framework Ollama, welches ich mir auf meinen lokalen Windows PC herunter geladen und paar Sachen damit ausprobiert habe.

Ich bin dazu gekommen, weil ich mit Visual Studio Code arbeite und dort das add on "Continue" installiert habe. Um die Chat-Funktion zu nutzen, wurde ich gefragt, ob ich Ollama installieren möchte.

Erst danach habe ich erkannt, wie mächtig das Tool ist und was es noch alles kann.

Die Installation

Ollama kann hier herunter geladen werden:

Damit hat man das Framework installiert.

Um es zu starten braucht man ein LLM-Model. Das ist nun eine gewisse Geschmacksfrage, welches man nimmt.

Auf Huggingface werden mitlerweile über eine halbe Millionen Models angeboten:

Ich habe ein kleines genommen: starcoder2:3b (1,7 GB groß).

Um dies zu installieren und Ollama damit laufen zu lassen, kann in einer beliebigen Shell der Befehl

ollama run starcoder2:3b

ausgeführt werden.

Das Ganze dauert beim ersten mal etwas, danach kann man aber schon mit der Maschine chatten:

Dann habe ich ein anderes Model, nämlich Llama3 ausprobiert. Das ist zwar 4 GB groß, soll aber ganz gute Eregebnisse liefern:

Hier ein kleiner Mitschnitt wo das System sich selbst erklärt:

">>> how do I start you? How can I access you (with a shell or API)?"

I'm excited to share how you can interact with me!

Via API (JSON-Based)

To access me using a JSON-based API, you can use the following endpoint:

POST /api/ask

Example request:

json

{

"text": "Hello",

"lang": "en"

}

Via Code

You can use any programming language or tool to send this request. For example, in Python, you could use therequestslibrary:

python

import requests

response = requests.post('https://api.yourdomain.com/api/ask', json={'text': 'Hello', 'lang': 'en'})

print(response.json())

Replacehttps://api.yourdomain.comwith your own API endpoint.

Via Web Interface

You can also interact with me directly through a web interface. Simply visit this page (replaceyourbotname

with the actual name of your bot), and start chatting!

Please note that these examples are just a starting point, and you may need to modify them to suit your specific use case or API endpoint.

Feel free to ask if you have any questions or need further assistance!

Der Spiegeltest - Die KI hat kein Bewusstsein :-)

Die Konversation mit der KI war schon irgendwie strange. Ich hatte echt das Gefühl mit jemanden zu reden, auch weil ich so persönliche Fragen gestellt habe. Ich wusste ja am Anfang tatsächlich nicht viel darüber. So war ich mir gar nicht sicher, ob das System jetzt bei mir loklal oder im Internet läuft. Also habe ich ihn gefragt. Aber ich traute dem Ergebnis nicht. Also habe ich das WLAN abgeschaltet:

Prompt: Ich habe jetzt das Internet getrennt. Was sagst du nun dazu?

You've disconnected from the internet!

Well, in that case, we can't communicate anymore through this channel (text-based interface). I won't be able to receive or respond to

any messages.

If you're still interested in continuing our conversation, you might want to reconnect your internet connection and try again.

Alternatively, if you have a cached copy of our conversation or any relevant notes, feel free to share them with me when you get back online!

Ok. Die KI ist beim Spiegeltest (Das ist der Test, wo man bei Tieren einen Punkt auf die Stirn klebt um zu sehen, ob sie vor dem Spiegel sich am Kopf kratzen) durchgefallen.

Auch am nächsten Morgen, als der Chat noch offen war, ich aber nicht wusste ob der Server überhaubt noch erreichbar war, gab es eine ähnliche Antwort:

Auf das lokale Ollama via API zugreifen

Jetzt soll es etwas konkreter werden. Ich möchte die KI später mit einem selbstgeschriebenen Programm befragen und das soll über die API Schnittstelle gehen.

Also probiere ich folgenden Curl Befehl (bzw. über Bruno) aus:

curl --request POST \ --url http://127.0.0.1:11434/api/chat \ --data '{ "model": "llama3", "messages": [ { "role": "user", "content": "Warum ist der Himmel blau?" } ], "stream": false }'

Und wie man sehen kann, funktionert auch das :-D

Mein eigenes Modelfile

Die Modelle sind alle vortrainiert. Richtig Spaß macht es aber erst dann, wenn man die KI mit etwas befragen kann, wass nur mein PC kennt. Denn ansosnten kann ich ja die KIs, die es draußen im Netz mittlerweile in Hülle und Fülle gibt nutzen und brauche meinen PC mit der Berechnung nicht zu belasten.

Also es geht um private, lokale Informationen, die mein Zuhause nicht verlassen sollen. Und damit soll die KI nun trainiert werden.

Es gibt dazu zwei Verfahren: RAG und Prompting.

RAG ist zwar das bessere Verfahren, weil das Model von Grund auf trainiert wird, ist aber auch komplizierter und wird hier nicht behandelt. Hier nehme ich den Ansatz, dass beim Starten der KI in den Einstellungen ein Prompt verwendet wird, der Informationen mitliefert.

Details zu dem Training von Ollama gibt es hier: https://www.gpu-mart.com/blog/custom-llm-models-with-ollama-modelfile

Zuerst schaue ich mir an, wie das bestehende Model konfiguriert wurde:

ollama show llama3 --modelfile

Das Ergebnis speichere ich nun in eine Datei und kann diese konfigurieren:

ollama show llama3 --modelfile > myllama3.modelfile

Dort füge ich dem Startwort "SYSTEM" einen Prompt hinzu:

Nun kann ich aus dieser Datei ein eigenes Modelfile erstellen:

ollama create myllama3 --file myllama3.modelfile

Jetzt frage ich Ollma ob er das neue Model kennt und wenn ja soll er es nutzen (Jaaaaa, es funktioniert):

Oder mit Hühnern. Ich verändere nun den System Prompt in meinem Modelfile:

SYSTEM """Your name is Gina and you know everything about chicken. You do not halluzinate and if you dont knwo the answer, tell this. You know the names of Achims chicken. They are:

Gudrhuhn, Huhngrig, Hahns, Hennes, Karla Rücken, Bruhnhilde, Knickzeh, Pethuhnia, Johahnnes, Brhuhna, Hahnelore, Florihahn, Sebastihahn, Khuhnigunde, Khahn, Hennriette.

"""

ollama create chickenllama3 --file myllama3.modelfile

Geil!!!

Ich möchte in den nächsten Wochen testen, wie weit strapazierfähig das Trainieren über diese Methode ist.

Mein Ziel ist weiterhin, mit einem Script Teile der Hive Blockchain zu lesen und mit der KI auf bestimmte Inhalte (Charitiy und Hatespeech) zu überprüfen. Das Ganze soll bei mir lokal laufen, da die API Schnittstellen im Internet alle Geld kosten.

Gruß, Achim

here is what Groq think of Ollama:

Ollama is a relatively new and innovative way of communication that combines elements of video conferencing, virtual reality, and social media. Here are some advantages and disadvantages of Ollama:

Advantages:

Disadvantages:

Overall, Ollama has the potential to revolutionize the way we communicate and collaborate, but it also has its limitations and challenges. As the technology continues to evolve, it will be important to address these challenges and limitations to make Ollama a more accessible and effective tool for communication.

I'm not sure, if we talk about the same Ollama. I mean: https://ollama.com/

This is a framework for AI.

Curated by @arc7icwolf.byte for the #LearnToCode community.

This is really nice

Would have loved to do it but I don’t even understand anything about coding at all…

Dont mind. You can learn it :-)