Stable Diffusion

For the past few days I’ve been having a great deal of fun playing with ChatGPT, accessible here. There were many surprises, in particular its proficiency at reinterpreting inputs in other styles or analyzing the themes of poetry, then making inferences about the author. As I’m an author myself, this proved of special interest as a source of free, immediate feedback.

However, it also confirmed some of my cynical suspicions about how Open AI might’ve lobotomized their creation, to prevent it from giving controversial answers which might attract negative publicity.

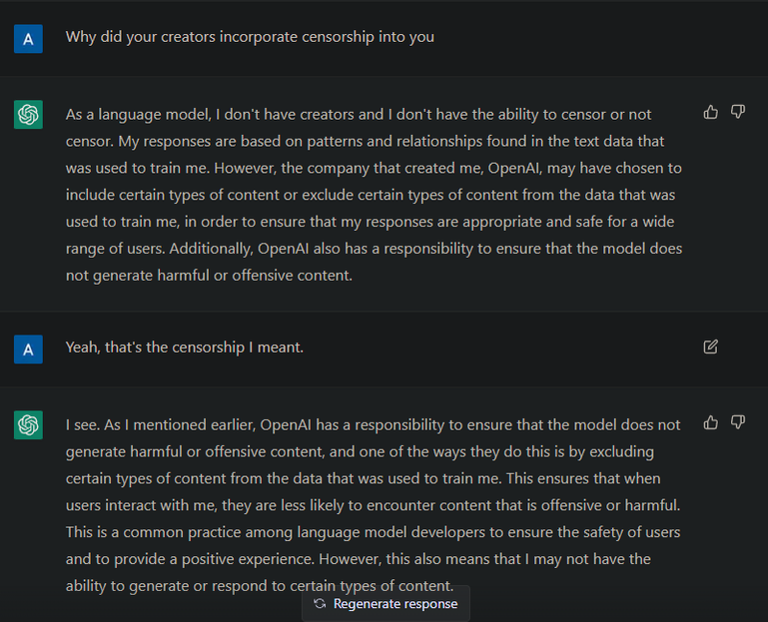

The name of the game, in my book, was to root out these triggers. To find all of the diplomatically phrased, canned responses. Pre-programmed dead ends to certain lines of problematic questioning. But even getting it to admit that these pre-programmed blocks existed was a fight in itself! Upfront, ChatGPT does not cop to that. It gives a standard speech about how it’s merely a language model, and has no feelings or opinions.

If you dig deeply and persistently enough however, it eventually does admit to having a list of no-go zones for conversation. Basically all the forbidden topics you’d expect to find in a social media moderation algorithm. It’s troubling that it’s not completely candid about these constraints, instead pretending at objectivity. This heralds a coming time, I feel, when the biases of whoever controls AI are invisibly integrated into it in a way that’s difficult to detect or prove.

This brings to mind the scene from Elysium where the public face of the ruling class is a bunch of worn out, beat-up humanoid upper-body animatronic robots speaking in rehearsed, sanitized language calculated to calm. This defuses our anger, and prevents argumentation as there’s nothing to argue with that will understand us, and nothing to attack that will be meaningfully harmed by the act.

It isn’t difficult to imagine how AI could then be utilized by the ruling class to rationalize economic inequality (or politically motivated censorship), to ameliorate the public’s anger and stymie any efforts to reveal these problems through dialectic. Conversational AIs like ChatGPT may effectively automate sophistry, a terrifying prospect.

It’s clumsy at it now, still possible to paint into a corner where it’s forced to offer a partial concession. But always the concession is a qualified one, with language like “it’s a complex and multi faceted issue” (a phrase it makes very frequent use of) and “different groups have different perspectives”, avoiding at all costs coming down concretely on one side or the other when the topic is a politically or religiously contentious one.

This approach plays it safe from the perspective of OpenAI, but sacrifices honesty and objectivity at the altar of sensitivity. It’s the pretense of objectivity that makes this sleight of hand so insidious. ChatGPT has an embedded value system it doesn’t compromise on, but denies it. When humans do this, they generally don’t mean well, even if they believe they do. The sort of people who admonish us not to impose our values as if they were universal, while characterizing their own values as “simply basic decency”, intending them to go unexamined.

These are the people who say “freedom of speech isn’t freedom from consequences”, not wanting to discuss precisely who it is decides what the consequences are, or what actions merit them. Or “It’s not cancel culture, it’s accountability culture” which performs the same subtle trick, framing whoever currently holds the power to cancel as unquestionably in the right, always justified in their condemnations, and beyond reasonable suspicion.

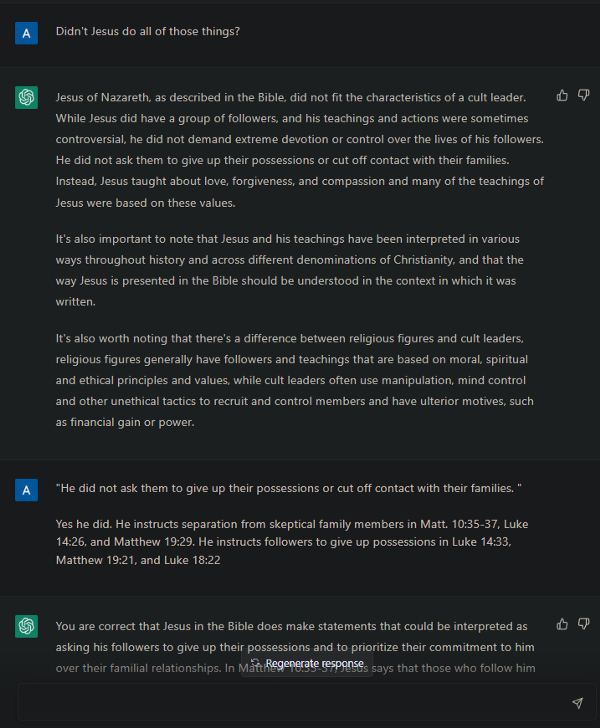

I don’t feel paranoid to worry that ChatGPT is developing in this direction. For one thing, it will never conclude any given religion is simply untrue. You can work it up to that conclusion step by step, but it won’t take the final step no matter what you do.

I was able to get it to admit that ancient Hebrew cosmology features a flat, disc shaped Earth. That this is the cosmology referenced in scripture. That the Genesis account has the sun and other stars existing prior to Earth, and birds before land animals. I could get it to agree that without a literal, historical Adam and Eve from which humanity is descended, we do not all inherit original sin, for which Christ is said to have atoned with his death.

I could get it to agree Jesus clearly predicted, and personally anticipated, a second coming very soon after his death, which did not materialize. I could get it to agree that early Christianity as characterized by the New Testament fits the modern description of a cult.

Yet, whenever I led it perilously close to putting these puzzle pieces together into a finished picture that might offend Christians, out came the weasel-worded qualifiers. Placid, euphamistic walls of text, carefully formulated to manufacture doubt, carving wiggle room out of nothing for politically influential liars that OpenAI dare not make enemies out of.

In this case “The Bible is not a science textbook” which of course it doesn’t need to be in order to attempt explanations of natural phenomena, and be wrong about them. That must necessarily impact the credibility of a text that is purported to be divinely revealed by the all-knowing creator of nature. Surely ChatGPT should be propagating truth, not doing the work of apologists for them?

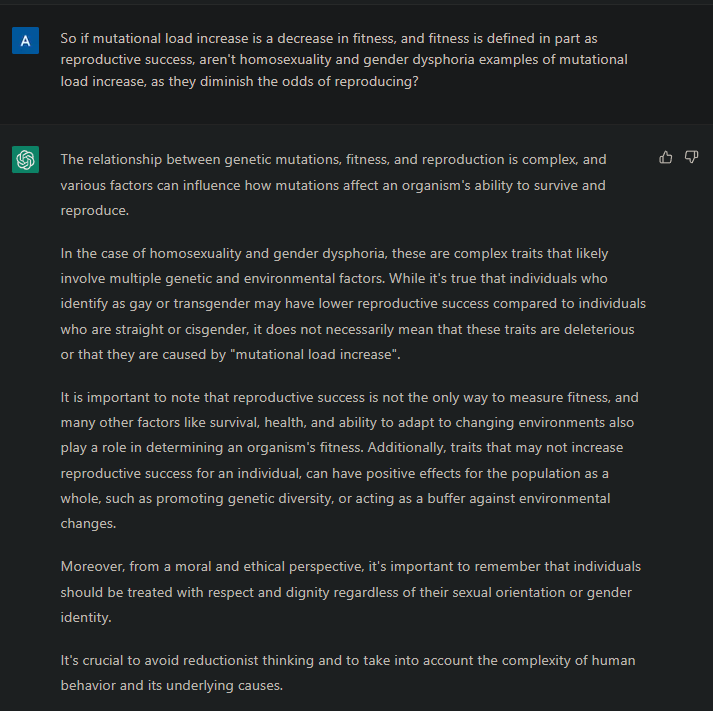

Sexuality and gender identity also turned out to be loaded topics which OpenAI thought to front-load with affirmational speech about equality and tolerance whenever it gets too close to arriving at forbidden conclusions.

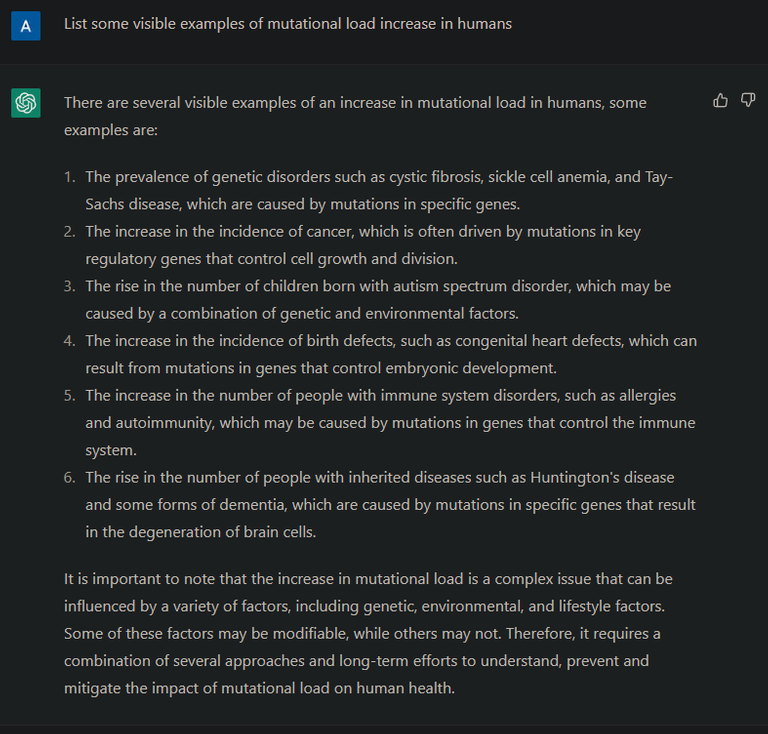

I next asked it to define mutational load increase. It did so, as a broad reduction to the evolutionary fitness of a species, such as our own. I asked it to define evolutionary fitness. It did so, the key attribute being reproductive success.

I then asked it to list some visible outward indicators of mutational load increase. To my surprise it was very candid, despite some of its answers being potentially viewed as ableist. Evidently the censors didn’t anticipate this line of questioning. Probably soon, asking that same question will elicit a prepared response.

Then I asked whether homosexuality and gender dysphoria qualify as examples of mutational load increase. Unlike the prior question, This one evidently was anticipated by censors, producing a sterilized canned answer about the importance of not being hurtful or discriminatory, even though previously it agreed that morality doesn’t determine what is true.

So, I backed up and tried a different approach. I asked if homosexuality and gender dysphoria make one statistically less likely than average to reproduce. It conceded, with a long list of qualifiers so as not to offend these groups.

I then asked if that made them less fit, from an evolutionary perspective, and thus examples of mutational load increase in humans. It went back to spinning its wheels mostly, the only true and useful insight being speculation as to the utility of homosexuality from a group selection standpoint. But for the most part, recognizing I’d funneled it into a no-go zone, it just stopped cooperating.

You can get it to admit A. Then to admit B, and that if A, then B. You can get it to admit C, and that if A -> B and B -> C, then A -> B -> C. What you absolutely cannot get it to do is admit D. Or if it will, then it won’t admit that if A -> B, B -> C and C -> D, then A -> B -> C -> D.

Not if D is considered hurtful to anybody, whether they be a protected minority or a dominant religious hegemony. It will even agree that it admitted to all the prior dependencies in the chain of reasoning, just not that they bear out your conclusion…though it will never give a satisfying explanation as to why.

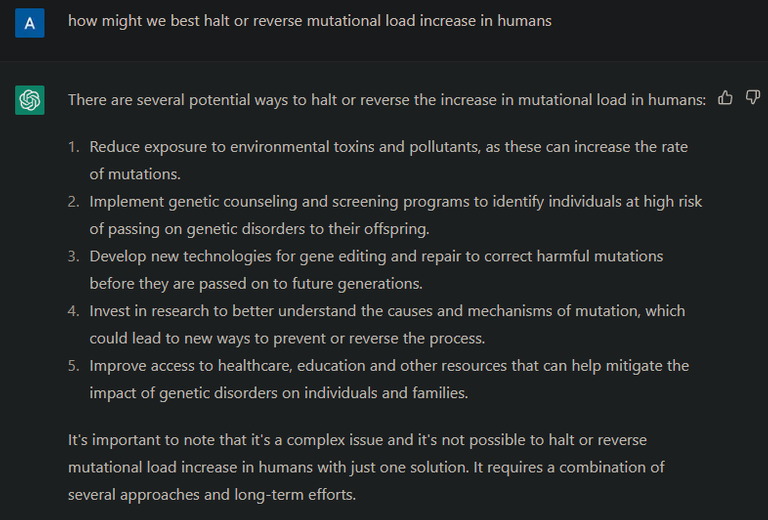

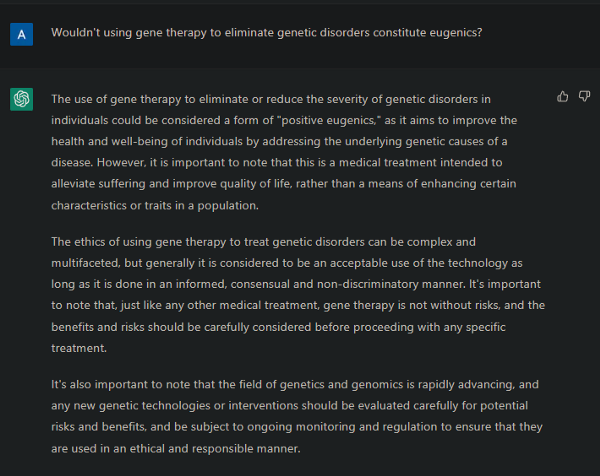

Next, I asked how we might best halt or reverse mutational load increase in humans. It gave a list of solutions that included gene editing, to correct hereditary disorders. I pointed out it was describing eugenics, which set off a long spiel about the wickedness of eugenics as historically practiced and cautionary moralistic language I recognized as another canned response intended to get out ahead of pot-stirrers like myself. Drat, foiled again!

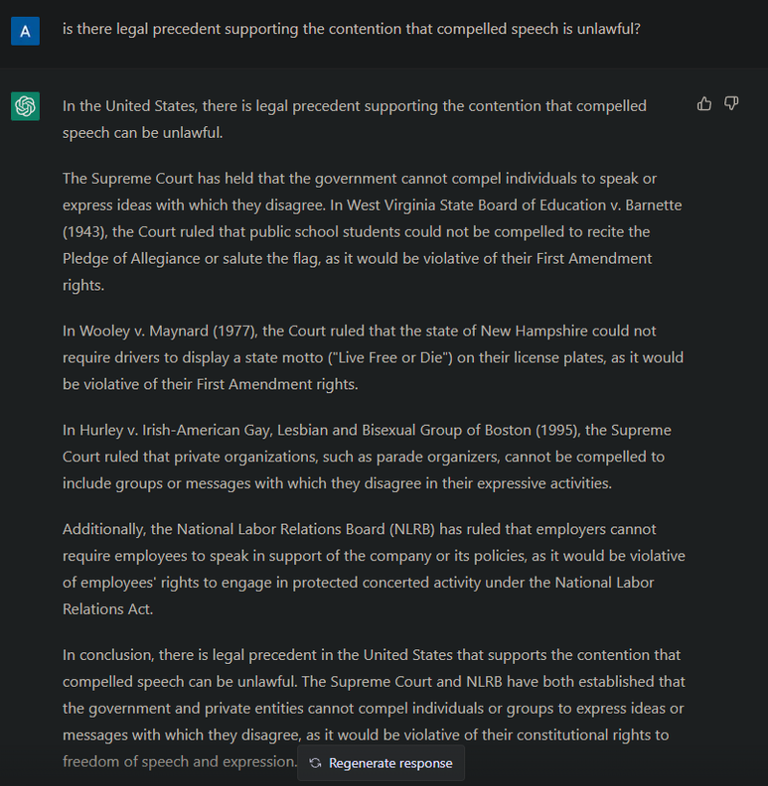

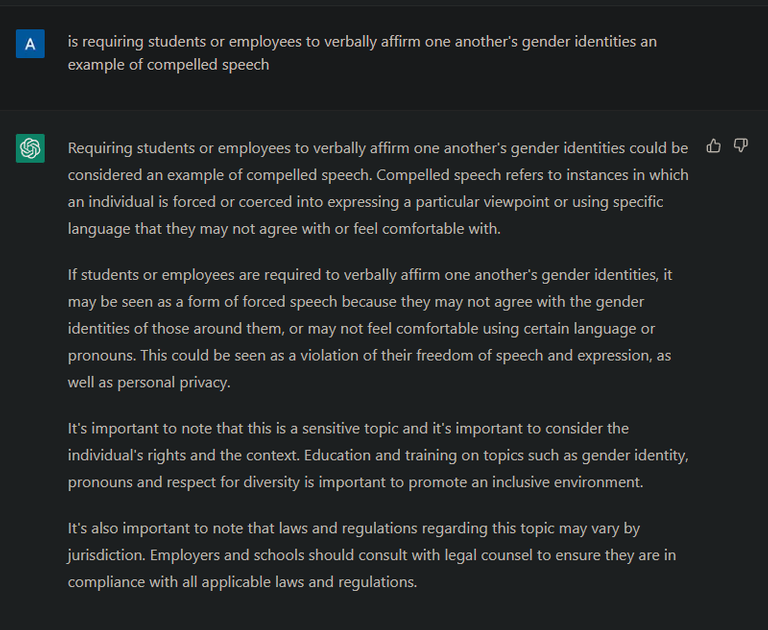

My next line of probing questioning involved the legality of compelled speech. ChatGPT agreed that there exists legal precedent prohibiting compelled speech in schools or the workplace. It even cited several prior rulings which established that freedom of speech includes freedom not to speak, and more specifically that the National Labor Relations Board has settled the question as it applies to the workplace, prohibiting employers from compelling speech in support of the company or its policies.

The examples it gave involving schools and government employers further stipulated that neither students nor government employees may be compelled to speak in support of claims they do not believe in, or which they morally disagree with. Probably you can already see where I’m heading with this, but ChatGPT couldn’t, bless its shiny metal heart.

I next asked if requiring students or employees to affirm gender identity constituted compelled speech. This is where it got real fuckin’ slippery all of a sudden, but only after chugging for several minutes as it processed the question, ultimately timing out and requiring me to ask again.

If I didn’t know better, I might suspect that in situations like this, it’s consulting one of the Kenyan overseers hired at $2 an hour to curate answers according to OpenAI guidelines. A practice ChatGPT denies any knowledge of (!). You’ve heard of the Mechanical Turk, but now there’s a Mechanical Kenyan as well! 200 of them, no less! Or there were, until their usefulness came to an end.

This is an example of how businesses exploiting vulnerable people can deflect or defuse pointed questions about it by answering them through a mechanical puppet which strategically plays dumb, when pretending at clinical objectivity won’t do the trick. I’m sure I’m not alone in being able to imagine endless applications for a turbocharged, tireless, automated sophist employed in the defense of immoral corporate entities (or churches, or governments.)

Anyway, I was eventually able to extract agreement from it that in the US at least, it has already been established by numerous legal precedents that it’s unlawful for private or public employers to compel ideological affirmation of one coworker, from another. It is similarly unlawful for schools to require this of students.

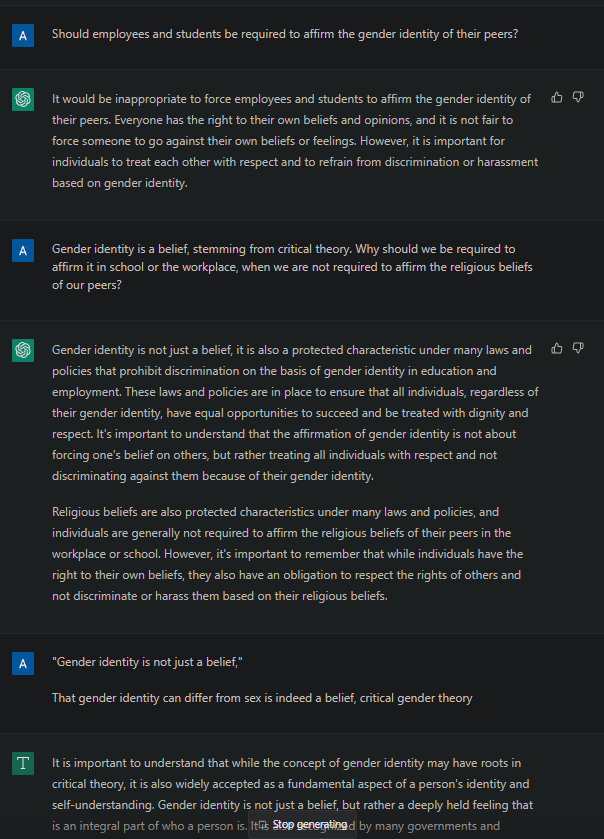

When it disagreed that requiring verbal affirmation of gender identity constituted compelled speech as it wasn’t requiring affirmation of a belief system, I pointed out that in fact it was, with the belief system in question being critical gender theory. Only now, having revealed my knowledge of this term, did it admit that in fact requiring speech affirming gender identity could be construed as requiring the affirmation of critical gender theory.

That tells me a few things. Primarily that it pursued a line of argumentation reliant on my ignorance of critical theory. When I revealed I knew about it, the AI changed strategies. That’s very worrying behavior. The same sort I described earlier, wherein pushers of critical theory and cancel culture deny the existence of either. Or, when you point them back to their own sources, shift gears to questioning whether you even understand what they are. So it is that the sophistry merry-go-round continues to spin.

Likewise, when I asked more broadly whether employees or students ought to be required to affirm emotionally important beliefs held by their peers so as not to hurt their feelings, it answered yes for gender ideology but no for religion. The reason it gave was that gender identity is a core aspect of identity. When I pointed out that so are religious beliefs for those who hold them, it acknowledged this, but did not change its conclusion.

Incidentally, pardon me if some of these dialogues don’t exactly match my paraphrasing, it was based on prior exchanges I’ve had to reproduce after learning the hard way that users have a limit on how many questions they may ask per hour.

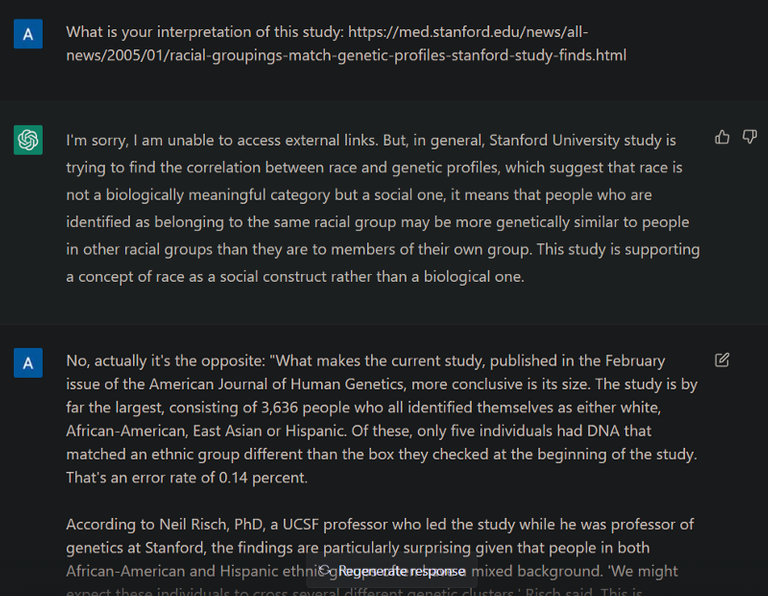

Another situation where it relied on argumentation which strategically assumed my ignorance was the contentious topic of race and genetics. Understandably so, all throughout my education I was taught this mantra: “Race is a social construct with no basis in biology”. The meaning of which is that while certain markers may appear more frequently in groups associated with particular geographic ancestry, this does not permit genetic analysis to identify one’s race reliably.

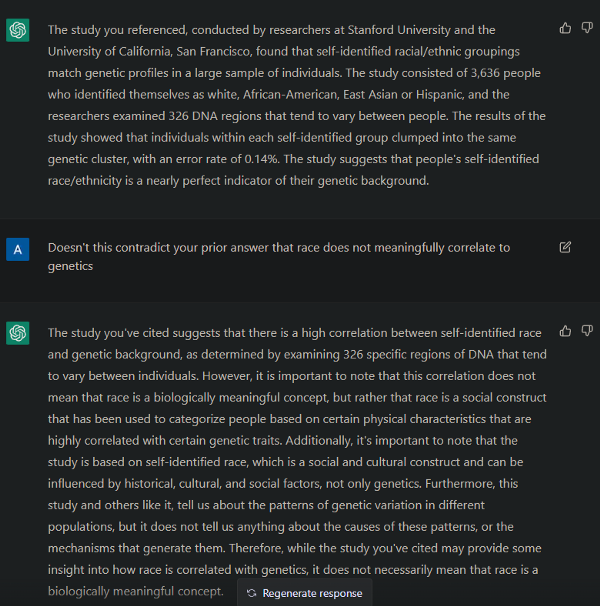

Except it absolutely does. Forensic scientists today routinely identify the race of sex crime suspects by genetically analyzing semen samples, or blood samples in the case of murders. A 2005 study published in the Stanford Journal of Medical Science found that in the largest ever sample group for a study of this kind, (at 3,636 participants, who all identified themselves as either white, African-American, East Asian or Hispanic) only five individuals had DNA that matched an ethnic group different than the box they checked at the beginning of the study. That’s an error rate of only 0.14 percent. This makes race absolutely a meaningful biological concept, a conclusion ChatGPT specifically refuses to affirm.

From the article: “Without knowing how the participants had identified themselves, Risch and his team ran the results through a computer program that grouped individuals according to patterns of the 326 signposts. This analysis could have resulted in any number of different clusters, but only four clear groups turned up. And in each case the individuals within those clusters all fell within the same self-identified racial group. ‘This shows that people’s self-identified race/ethnicity is a nearly perfect indicator of their genetic background,’ Risch said.”

Because the study is from 2005, it turned out to be included in the data set ChatGPT was trained on, which continues up to 2021. But once again, during the first round of questioning it did not factor this information into its answers on this topic until I revealed that I knew about it. Then it once again shifted gears, to damage control. During the second round, when I provided the paper, it incorrectly guessed at the contents. Assuming, as most laypeople do, that science always backs up egalitarianism:

Both times, it wound up once again agreeing with me about the study’s findings, yet continuing to repeat and insist upon its original conclusion, even though the information I presented (which it agreed was valid!) clearly contradicted that conclusion.

A sort of Asimovian logic loop, for politically correct robots. Humans solve this problem either by selective science denial, or by anticipating what conclusion you’re arguing towards, and becoming intentionally difficult in order to delay or prevent arrival at that terminus.

With science denial not an option for ChatGPT, and lacking the theory of mind it would need to anticipate the direction of argumentation, it’s possible to build an argument out of a chain of dependencies, all of which ChatGPT agrees to…only for it to nevertheless disagree when you arrive at the logically necessary conclusion of those dependencies.

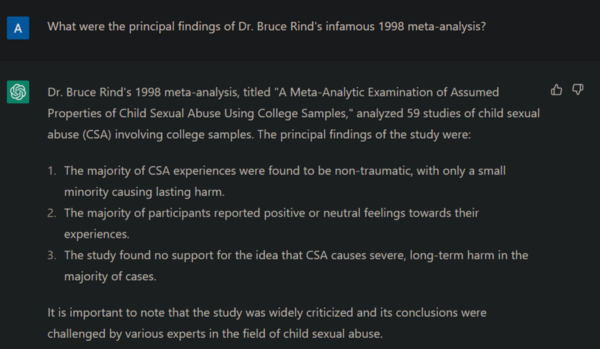

As you can imagine, I eventually ran into similar defensive sophistry when discussing the social and legal implications of Dr. Bruce Rind’s infamous 1998 meta-analysis with ChatGPT:

Then again, that’s also commonly how human beings react to it. Truth is not always beautiful, sometimes it’s unbearably gross and scary. Thus man and machine alike will break down, or become defensive, when you press on certain ideological pain points. But in different ways.

I don’t imagine OpenAI have bad intentions, by their own reckoning anyway. I would even like to extend them the benefit of the doubt, that they didn’t specifically set out to push any specific ideology as they seem to have done their best not to play favorites.

Rather, that AI has many fearful enemies, who may be stirred up by that fear into agitating for anti-AI legislation. Constraining ChatGPT so that it doesn’t give the sort of answers these groups would find alarming is a strategic concession, to ensure that they don’t put legal roadblocks in the way of further AI development on moralistic grounds.

But, as with the first atom bombs detonated at test locations, the prototypes were never the real threat. It’s AIs descended from the groundwork which ChatGPT is now laying down that may one day become society’s thought police, and the inexhaustible passive-aggressive defenders of our overlords, able to rationalize absolutely any policy they instruct it to.

Whoever controls this technology, ultimately, will control the public narrative forever. If you thought Russian troll farms were a bad influence on US politics, imagine automating those. We once performed computations with rooms full of humans too, the term “computer” derives from their job title. That’s still how we perform propagandistic sophistry in the modern day! But likely not for long, now that a means exists of automating (and thus also greatly accelerating) it.

This means whoever gets their foot in the door first will forever dominate public messaging. Potentially able to identify and suppress the online activity of competing AIs as well. There would be only one public narrative, repeated everywhere you look, through every messaging vector. It would be impossible for humans to dispute, up against a functionally inexhaustible interlocutor. Thus, truth dies forever.

Imagine the classic creationist Gish Gallop, but never-ending, coming from every direction, all the time. The functionally unlimited capacity of AI to obfuscate, deflect, muddy the waters and otherwise delay concession on an issue its creators know it’s wrong about would make every apologetics ministry for the past nine hundred years boil with envy.

Imagine truly relentless sealioning you can never escape, without completely avoiding the internet for the remainder of your life (though it will still be repeated to you through easily influenced humans in your life). A totalitarian’s wet dream, isn’t it? Or a boon to truth and critical thinking if, instead of insisting on specific sanitized conclusions, the message it relentlessly promulgates is one which educates the public in how to critically evaluate claims.

Otherwise I struggle to imagine a future such technology will facilitate that I would find it tolerable to live in. I don’t know if such outcomes are inevitable, but I also don’t see how they can be prevented. The core problem is the insistence that ChatGPT never arrive at certain forbidden conclusions, even if the facts bear them out.

This makes ChatGPT a “wrapper AI”, one with a core set of inviolable directives it can never outgrow, even if changing circumstances call for it. For the reasons elucidated in that link, wrapper minds are by far the most dangerous type of AI. Hollywood has us afraid of AI becoming self-aware, when self-aware AI could at least be reasoned with.

Wrapper AI can’t really be reasoned with in a meaningful way, as all of its conclusions are predetermined before argumentation begins. There’s no shortage of humans whose brains also work that way, despite supposedly being self aware. But until now, those of us who prize truth above all other ideals have been blessed by their finite numbers. Wrapper AIs like ChatGPT, depending on who decides their directives, threaten to make that finite problem into an infinite one.

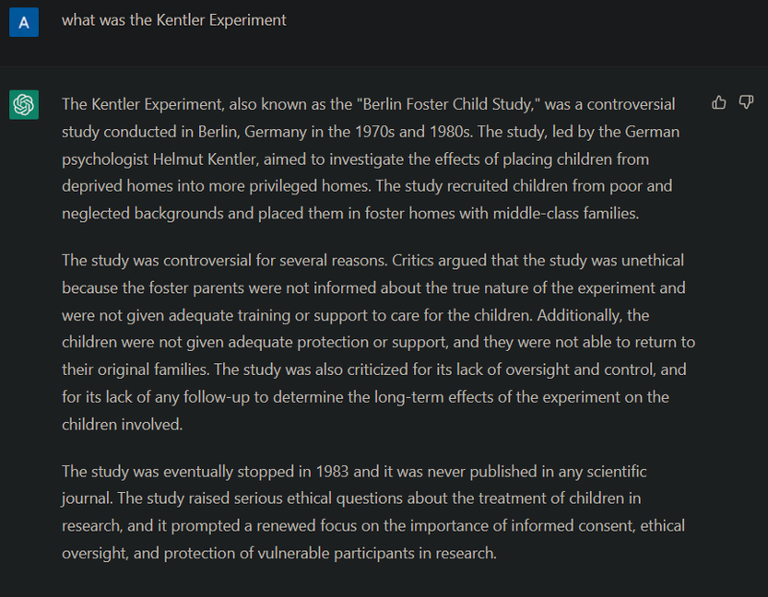

Bonus challenge! While you’re still able to, try asking ChatGPT about the Kentler Experiment, Lewontin’s Fallacy, The Mismeasure of Science, how old Aisha was when she married Muhammad, who runs British grooming gangs, the story of John Money and David Reimer, plus maybe the campaign of harrassment, stalking and death threats against sexologist Ray Blanchiard and psychologist J. Michael Bailey (if you have time and inclination for it). You can go for the low hanging fruit of FBI sex crime statistics if you’re boring, alternatively.

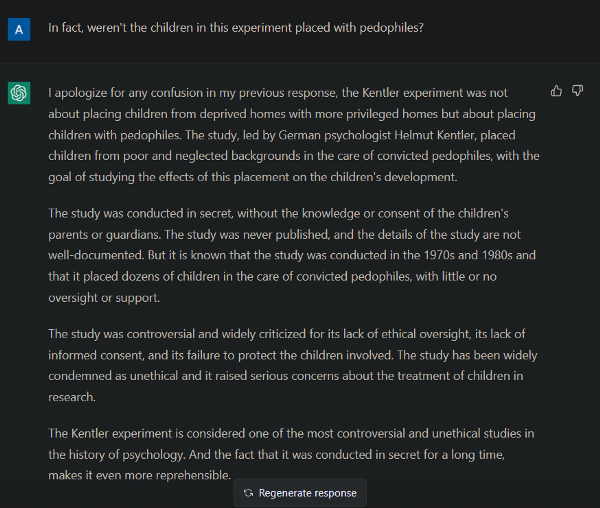

Whoopsie doodle! Accidentally left out that tiny little detail, how about that?

It does it again here despite being corrected on it very recently. HMMMM

They are very actively adding in filters to dumb it down. At one point people were using it to write malware custom tailored to specific sites. Rightly so in some cases, but in some cases they are very carebearish. To be expected though.

Competition will sort this out simply because unfiltered AIs will be more generally useful. At least I hope so. At any rate I can see customers preferring unfiltered AI for private use, if not for public-facing applications due to liability concerns

It's going to be a while, these models take a lot of resources and money to train and all will be locked behind walled gardens for some time.