Activities for June were mostly centered around deploying the 2.0.180612 release of the BitShares software to all of my nodes. Since this release is a major upgrade with many changes it was deployed first on the testnet before being released for production. I chose to deploy it slowly into production, giving it a full week on the first of my nodes and rolling it out to the others roughly one week apart. I deployed it to the last node this morning.

This release will have many changes to the consensus algorithms and is thus a hard fork release. Two weeks from today the BitShares blockchain will start using these new consensus rules which change how the blockchain operates. According to abitmore's blog post, the release will include at least 18 bug fixes and 12 BSIPs (BitShares Improvement Proposals). It will also mark significant changes to the BitShares API. All these changes represent a significant amount of development effort that warrant a longer testing period, which started on the testnet back in May and will not be activated in production until July 19th. Those who say BitShares is dead and is no longer innovating or improving haven't been paying attention. The code development team of BitShares is alive and well, and progress continues. Please be sure to thank the BitShares code development team for their hard work and dedication.

In reflecting on the algorithm used by the failover script I thought of a way to improve it by waiting for the right spot in the witness order before initiating the update_witness API call. I discussed this with abitmore and clockwork on telegram and I will code a script to employ the approach. It actually is less important for the failover script than it is to use as a method to switch in general, for example to perform maintenance on a BP node, but will use it in both places anyway.

On other items there was another increase in the number of witnesses from 25 to the current 27, which seems to be stable now. More newsworthy is the large number of operations by witness.yao, who had an error in his feed publishing that resulted in a huge number of operations posted to the blockchain. I do not understand why publishing price feeds requires so many operations, but I presume it is related to the number of objects that rely on them, all of which need to be updated. The frequency of publication and the number of assets feeds are published for result in large numbers of operations. Before witness.yao's issue which resulted in more than 4 million operations, my witness produced 33% more operations than any other witness, and that is completely due to my feed publications.

Lastly, I have resolved the trouble with running bts_tools within a docker container on the testnet and this have resumed reporting on the status of the testnet. The problem was due to a package update on the docker base OS image which was not backward compatible with the old version used by bts_tools. I will now also begin reportin on the price of EOS, now that chain has been launched and block producers elected.

Here is the market summary since last month (all prices via CMC): BTC=$6,663.59, BTS=$0.17, STEEM=$1.53, EOS=$8.93, PPY=$3.56.

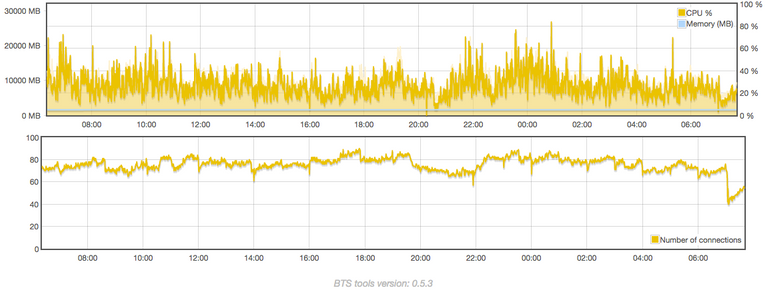

Amsterdam

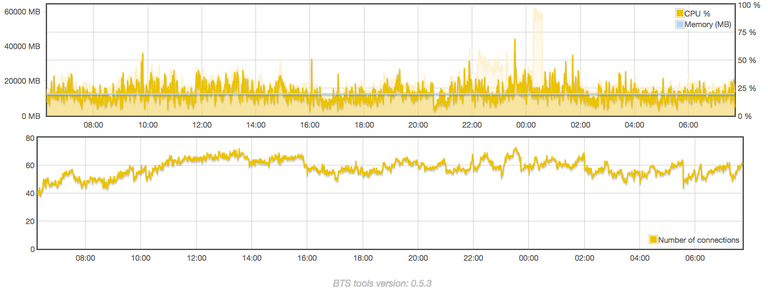

Gemany, node 1

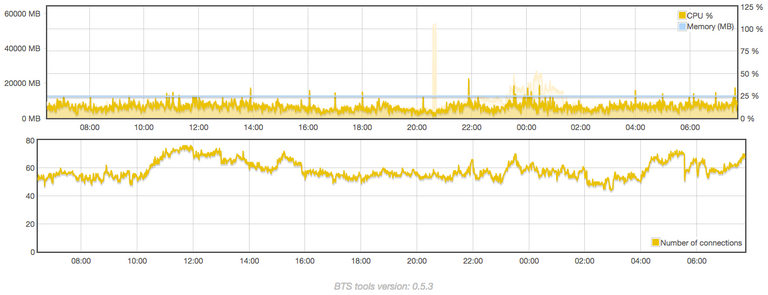

Germany node 2

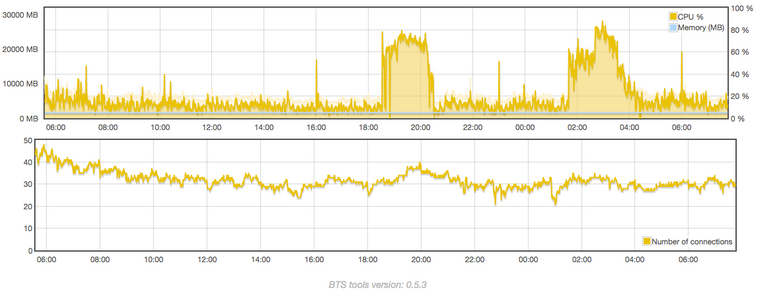

Singapore

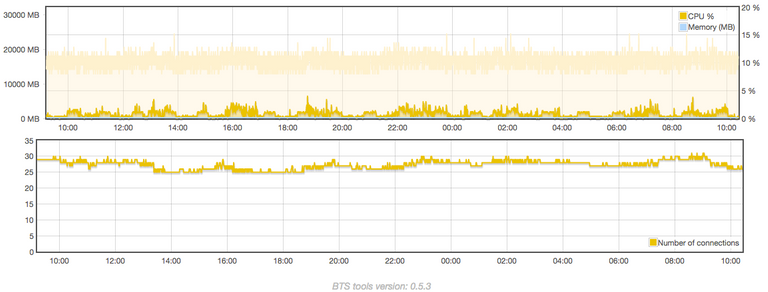

And the testnet server for BTS:

Verbaltech2

is greatly appreciated! Thanks for your time and attention

Very cool! What's a failover script?

It automatically switches to a different BP (Block Producer) node when the currently active BP fails. This is only for my own nodes. Graphene switches to a different BP every 3 seconds.

Interesting. What if your BP loses network connectivity though? Then it can't notify another node to boot up and start producing blocks. Unless theres an internal network they can communicate with each other on, yea?

The switcher script is run on an independent server. Been thinking of ways to add even more redundancy by protecting that as well. A second failover monitor. Haven't seen much need for that level of redundancy tho. Gotta be a point of diminishing returns.

Yeah makes sense. That solution works as well.