Great work and I know this is going in the right direction and I have a specific request.

If this is possible:

We need absolutely drop dead simple instructions for running the most lightweight HAF based API server that can be run on the absolute bare minimum $10 VPN.

Something as simple as editing a .env and then docker compose up -d is what I'm looking for.

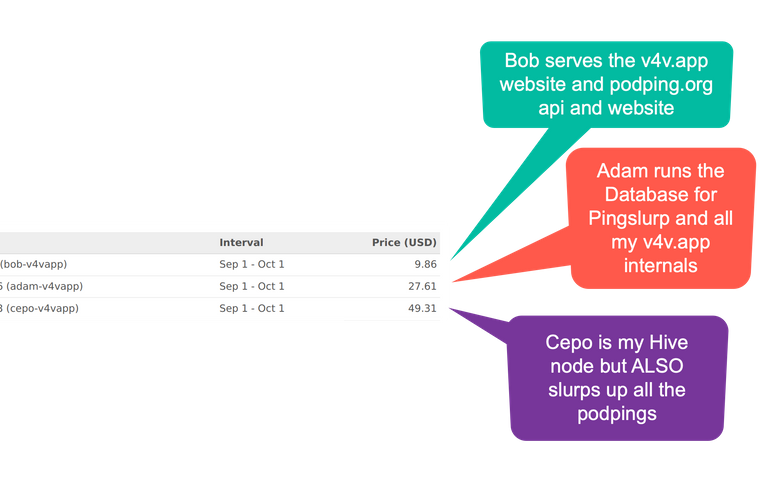

In the last couple of years while you were building HAF I wrote and deployed my own code called Pingslurp to keep track of all Podping custom_jsons. This code has got 6 months of my podpings in but the MongoDb is only 1.6Gb which is perfectly manageable on really cheap hardware.

All my code really does it look for and save the data on chain I'm interested in a reasonable format. I must admit I do some cools stuff with time series information which I have no idea how to do in SQL.

I only built this because every time I looked at the HAF docs the first step was getting a machine with specs I will never need for this app to hold social data I don't need because all I want are my custom_jsons.

Just to make this clear and why up to know I've not even tried using HAF, each time I looked, the base spec made me cry!

The base spec for a HAF server is extremely small (something like 6GB of storage space).

So I suspect you're looking at the storage needs to store the entire blockchain (theoretically around 3.5TB, but in practice only around 1.2TB using zfs with lz4).

But like I said, the above storage space is only needed for a full API server that needs access to all the blockchain data. When you setup your HAF server for your HAF app, you just need to add a line to your hived config.ini file to filter out all but the operations you want. Then you'll have a super small database.

As far as docker goes, the recommended way to setup a HAF server is using docker nowadays. We're also creating a docker compose script for a setup of a full API node, but for setup of a simple HAF server, it all fits in one docker container, so no need for docker compose.

Does the database run in the same docker container? I will look again at the docs thank you!

The database software runs in the same container as hived (this is the "haf" docker), but by default you end up bind mounting your hived workdir and the database's storage to a local directory. If you use the script to create the docker it handles this process (command-line options to override defaults) or if you use the as-yet-unreleased docker compose script, you edit associated .env file.