So we know what Web 3.0 is, right? It's Hive, obviously.

And Web 2.0 was AJAX, or something. Actually, it was AJAX and the semantic web, even though they are unrelated. AJAX is the old buzzword that we used to use referring to asynchronous javascript. And semantic web would be stuff like non-UI markup that provides accessibility features and embedding standards to make it so you can do stuff like paste a URL into Twitter or Discord and see a nice preview.

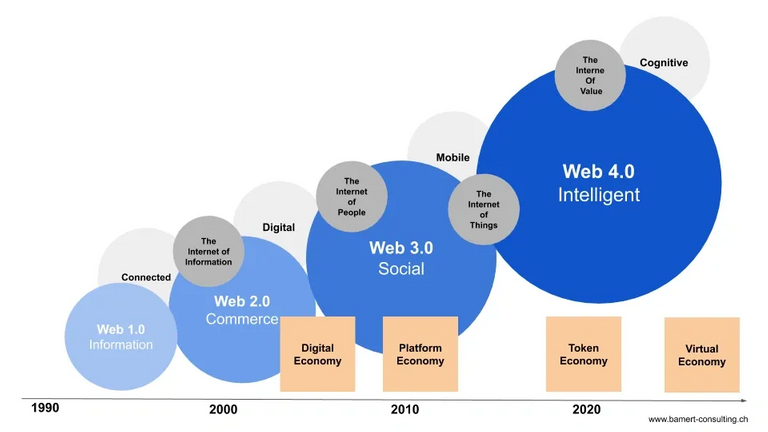

Therefore, Web 4.0 would be an even better thing because it's one better than Web 3.0. Got it?

source

What would Web 4.0 really be? I think it's like this. Let me make this extremely concrete: It's basically an intelligent interface framework.

Right now, if you were to upvote something on Hive, you're doing Web 3.0. But if that upvote was queued into an accumulator so that if/when that upvote fails to be included in a block, you'd be notified and allowed to retry it later, or something other than it silently failing, making it Web 4.0.

The idea here is, right now, we have fire-and-forget. If, for any reason, your intended upvote does not get included, for example, if there's a timeout and the node accepting the transaction never gets the signature, the accumulator still has a record that you intended to vote. Maybe it just tries to re-submit the transaction within the expiration period, in which case you never notice and it's removed from the accumulator, and Web 4.0 silently saves the day.

Or, a more rare situation, the upvote is not included due to a micro-fork. The accumulator might re-try that as well, only to find that the fork lasts longer than the expiration, in which case you must be notified that your intended operation failed and must be re-signed. In this case, Web 4.0 notified you that something got interrupted and it needed additional instructions.

What I'm describing is not just to ensure upvotes happen. It's an entire framework that is aware of edge cases where intent is not carried out, or carried out in non-standard ways.

Whatever the solution is, it would apply to all types of operations, not just upvotes. It would be implemented in a uniform way that is encapsulated into a published, general library standard, so that's not just a feature that exists in one language/library.

Those would be the append-case scenarios. There are also read-only scenarios that we need. For example, once a vote is cast, the UI should estimate what the upvote value will be, right away, and update the UI instantly. It's just an estimate, but it's informed by standards-based calculations, and accurate to the best case scenario. It's even better than waiting 3 seconds (like we used to do way back when, before we lost that functionality due to Hivemind lag).

Another read-only scenario that we need is the ability to predict the current state of resource credits, live. A user should not be allowed to even broadcast, if the UI determines they do not have available resource credits.

Fire-and-forget is going to get tedious, if we don't get ahead of it. Actually, it already is.

Would this be layer 1 or 2? Sounds pretty cool either way.

It doesn't matter.

Doesn't HAF by blocktrades do what your accumulator does?

Not really. The closest thing we have right now is Hive Keychain and any client that will attempt to cycle API nodes when there are timeouts (failover). The problem is, they all use different approaches; not all of them are resilient to every edge case.

It might be within the scope of HAF to provide the read-only statistics as an API method.