This is a rather technical post that gets into the details of both the HIVE broadcast API and statefull hash based signatures, but I really hope this post reaches some of the more technical people in the community so I can hopefully find out if I'm on the right track with this.

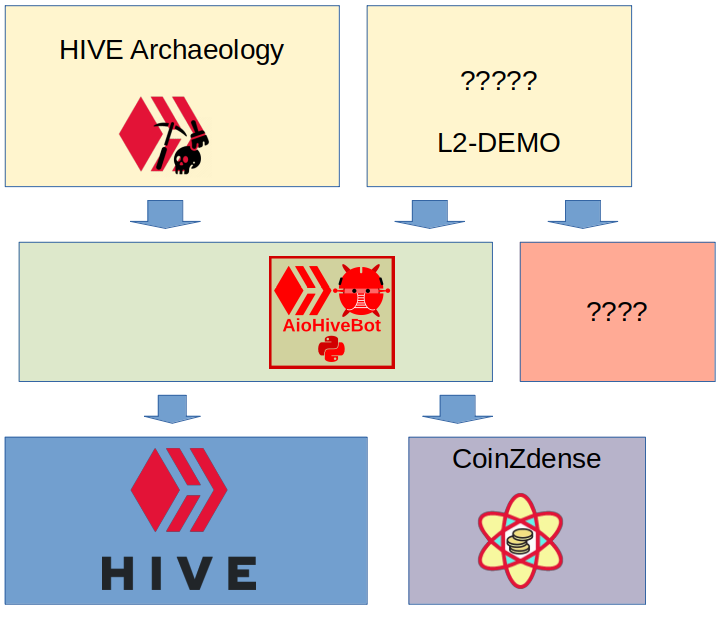

I'm currently working on the aiohivebot python library for HIVE. AIO-Hivebot aims to become a specialized asyncio Python library for HIVE that is less suitable than Beem (I'm personaly not a fan of beem) or the excelent lighthive for writing small and nifty command line tools for the HIVE blockchain, and is aimed primaraly at writing middleware, layer-two, and bots for HIVE, that need to work nicely with Python web frameworks like aiohttp (my personal favorite), sanic and starlette, and/or need to communicate with other chains or databases (or combinations of the two such as my favourite, FlureeDB using @aioflureedb) asynchonically.

The reason that I'm building aiohivebot isn't primaraly to build a new Python HIVE library for this purpose, it is to be part of a proof of concept stack for hash based signatures.

The stack starts with the HIVE blockchain and the Python CoinZdense implementation (also still under constuction) as its foundation. On top of that is the aiohivebot library that should bind HIVE and the CoinZdense hash based signatures together, for now in a piggybacking and proof-of-concept way as a real implementation will require updates to the HIVE core, and we are still quite a bit removed from that point. First we need a proof of concept. I will get into this later as this is the main subject of this post.

On top of aiohivebot, I aim to first write a port of the HIVE Archeaology bot to aiohivebot . This bot is a really simple bot that monitors for it's owner (me) upvoting expired content, and then creates and upvotes a comment with the origninal post's author as main beneficery.

In the future I hope to expand this stack with something more serious than thie silly bot, but for a first POC, this should do.

Some details on hash based signatures

Normal clasical signing on HIVE and most other blockchains uses a signing algoritm for signing transactions with called Elliptic Curve Digital Signature Algorithm , or ECDSA for short. ECDSA is a great and amazing algoritm, the signatures are short and the signing if fast and consistent in speed. And signing keys are stateless. All amazing properties. The alternative signing that CoinZdense aims to provide is noe of these things. Signatures are big and clunky, signing keys are statefull, and once in every few hundered or few thousand transactions, the time to sign the next transaction goes up by quite a bit because operations are needed to enable the next few hundred or few thousand transactions. So why would we want to use these so obviously much worse signatures?

Well, Quantum Computing (QC) is showly but surely gaining grounds and at some point in the not to near but not to distance future, ECDSA will stop being secure because the size of general purpose QC has exceeded a special treshold that will make it possible to reconstruct the private signing key from either the public signing key (that on HIVE is very much public as it is part of an accounts public data), or just from a single signature because ECDSA signatures with a special construct allow the public key to be reconstructed from the signature. For more info on this, see this video.

So because of QC, towards the not so distant future, we want to start protecting Web 3.0 blockchains like HIVE with these clucky statefull signatures. For now we don't want to touch the core blockchain yet, we just want to test the concepts by piggybacking hash based signatures onto HIVE in a not enforced but still verifyable way, and the tech stack I'm working on in a rather slow and discontinous way aims to allow for that.

Account json_metadata as poor man's pubkey storage

As I demonstrated already with my little HIVE CoinZdense Disaster Recovery tools repo, it is possible, though not ideal, to use account json_metadata as a place to piggyback something like a hash based account recovery key. Nothing is currently enforcing the security of this data, but in the event to a real QC event against HIVE, timestamps may be used for actual account recovery.

As an example, here is my current account json_metadata:

{

"beneficiaries" : [],

"coinzdense_disaster_recovery" : {

"key-a" : "QRKB6rzqPbDLWbMQNi9iqFbofRLBBeJscxmzAQU75",

"sig-a" : "QH1SwjM6zJuT8hwurq3nz2vqpHdaRxoovKwemPwTEjT8BWrrsP3Qjg6N6sjfgdNSJVoP6djNEc6NferEDx4y2J2WVF3uhLia6A"

},

"profile" : {

"about" : "Mythpunk fiction. Python. C++. Computer Forensics. Computational Statistics. Cryptography. LCLΩ6HP, Entomophagy, N=1 experiments. Engineering.",

"cover_image" : "https://pbs.twimg.com/profile_banners/3021818589/1424700326",

"location" : "Netherlands",

"name" : "Rob J Meijer",

"profile_image" : "https://pbs.twimg.com/profile_images/881738626983505920/L94HR_Bv_400x400.jpg",

"website" : "https://steemit.com/bannerpost/@pibara/master-banner-post-7-18-2018"

}

}

In this example there is a one-time-signature public key (key-a) and an ECDSA signature (sig-a) that is created with the user's ACTIVE key. A similar construct should be possible with a full many-uses CoinZdense public key.

Please note that these Proof-Of-Concept keys will be just that, I'll be using a coinzdense_poc key, and the keys will live in a different cryptographical namespace from any eventual CoinZdense pubkeys.

It is important to note that CoinZdense keys will need an aditional key/value layer by design because of subkey-management. In theory this setup could create race conditions, but for now, while we are not building a layer-2 on multiple sub-keys, this shouldn't become an issue yet. Preventing race conditions in subkey registration though should be a consideration when proposing HIVE core changes in the future.

Adding extra custom_binary operation to transactions

When a bot, middleware or L2 wants to create a comment, set comment options, upvote a post, or do anything differently that uses the users signing key, one or more broadcast operations will get grouped into a transaction.

When the transaction is composed, a call to database_api.get_transaction_hex will return a hexadecimal representation of what is supposed to get signed with the users posting or active signing key.

But we aren't going to do that yet. At least not with ECDSA. Instead we sign it with the CoinZdense POC hash based signing key, and use the result to create either a custom_json or a custom_binary broadcast opp to the existing transaction.

Then, if needed, we add some extra state in an additional custom_json (this should only be needed when a treshold of the number of transactions is reached by this transaction, or potentially if its about to get reached). More about this in the next section.

Now using the expanded transaction, we call database_api.get_transaction_hex a second time, sign the extended transaction and broadcast it to the chain.

Account json_metadata for stateless clients

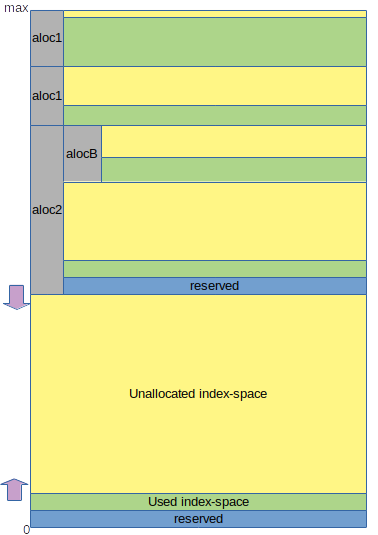

As mentioned before, hash-based signatures arent' stateless. They are like a resource that gets used up, both one signature at a time and in case of subkey management, sometimes with whole chunks of the resource getting allocated to a differerent subkey.

As mentioned before, keys and subkeys can be piggybagged on account json_metadata (with some caveates towards race conditions in the future that the POC can't solve). But there is more state. State about what parts of the key have either been used up or have been allocated to subkeys. While for part of this state it can be stored client side, and earlier experiments did exactly that, it is desireable to make the POC even if everything is piggybacked on extra custom jsons and custom binaries, as close to the eventual real thing as possible. And as such, we will try to make it so that the only local state the client stores will be for speed, but if a client starts with just the private keys, it may start up pretty darn slow, but it will work just fine without any security risks associated with index re-use of hash based signing keys.

So what is the main state? In it's basis it's the index to be used in the next signature. This index will increment by one every transaction untill the signing key is expended what with right dimensioning should roughly be one minute before never.

This state will exist per subkey.

Next to this state, every it is important to realize that in CoinZdense subkeys can be revoked but not directly. subkeys are revoked through the invalidation of unused index space. Basicly every transaction invalidates one single index in the 2^64 size of index space.

We have a choice to imidately move this to a full L2 implementation where everything is broadcast on a per subkey level and the L2 is responsible for keeping the complete invalidation map up to date and mostly hidden, or we can make our proof of concept library overextend itself for now and provide a full up to date map for all subkeys combined from any subkey, still allowing vor validation, but keeping the revocation and invalidation in the open and outside of the (not curently existing) L2.

My current first instinct is to go for this later option. Mostly because I feel that puting it in an L2 right now may keep it in an L2, what I feel is a bad idea. These invalidation maps should eventualy end up in the core, so keeping them an open cludge for now may be a good idea because it makes the fact that it is a temporary piggyback cludge very obvious. I hope I'm making sense.

Right now my ideas regarding invalidation map and signing index posting in custom_json operations is as follows:

- Subkey signing indexes get updated in the account json_metadata when a new transaction is done with the subkey and no transaction was done for that subkey for 4096 blocks or more.

- The account json_metadata, next to the index will contain the pre broadcast head block number for when the subkey index was updated.

- When any subkey index space gets revoked, or when a new subkey gets created and signed, the client overextends itself and calculates plus post the invalidation map for the full key, as if the client was a single node L2.

- In the same transaction where the invalidation map is posted in a custom_json, the account json_metadata gets updated with a reference to the last head block number.

This would make the account JSON possibly look something like this:

{

"beneficiaries" : [],

"coinzdense_poc" : {

"invalidation_head": 89066372,

"subkeys": {

"F17B44958C3AAA94198DA8A89994F6AC": {

"path": ["ACTIVE"],

"offset": 16777216,

"size": 251658240,

"index": 119

"pubkey": "QRKB6rzqPbDLWbMQNi9iqFbofRLBBeJscxmzAQU75",

"sig-a" : "QH1SwjM6zJuT8hwurq3nz2vqpHdaRxoovKwemPwTEjT8BWrrsP3Qjg6N6sjfgdNSJVoP6djNEc6NferEDx4y2J2WVF3uhLia6A"

},

"ABB1956F1970AAAAA4CDA91765AC5555": {

"path": ["ACTIVE.POSTING"],

"offset": 268435456,

"size": 1879048192,

"index": 22783,

"creation_head": 89060013

},

"C1254567ABCFFB418907986038909CDD": {

"path": ["ACTIVE.POSTING.comment","ACTIVE.POSTING.vote"],

"offset": 2147483648,

"size": 16384,

"index": 14,

"creation_head": 89066465

}

}

},

"profile" : {

}

}

Notice big things are made findable by refering to the broadcast time head value. This means that the client can find the actual invalidation data and and subkey pubkeys can be found by looking at blocks starting at the indicated block.

An invalidation custom_json matching the above could look something like:

{

"top_key": "F17B44958C3AAA94198DA8A89994F6AC",

"invalidated": [

[0, 16777215],

[16777216, 16777334],

[268435456, 268458238],

[2147483648, 2147483660]

]

}

The race condition

For the archeaology bot, race conditions won't be a problem, there will be only one subkey, eventualy one like the multi path one in the example JSON, but we are quite a bit removed from there. But when a user actually starts to use multiple subkeys in paralel on different bots, there is a bit of a risk to the use of the account json_metadata to store. For now the impact seems to be relatively OK, though more thaugth may be needed on how to handle it. It seems that race condition problems should at least be detectable, and recovery strategies might be deviced, but deeper thought is needed.

Feedback ?

For now these pigybacking ideas seem OK from my perspective, and I would like to make a start at implementing them with my current stack of projects. Though doing so only from my own thoughts and preliminary conclusions seems a bit tricky. I would prefer some feedback from the technical HIVE community. So if you read this and think you might have something usefull to say, even if you are unsure or something doesn't quite add up in my text abouve: Plaase comment on this post. I need feedback on my piggybacking plans for aiohivebot.

Help with funding

If yuou think I'm doing good work with these three projects and you would like to support me and help me allocate more work on aiohivebot, coinzdense and the hive archaeology bot port, please have a look at my donations and sonsors page, or sponsor my projects on github.