Graphics have made great strides over the years, but in recent times, the resolution increases offered by displays has become rather challenging. Let's look at the PlayStation series. The first PlayStation support full 480i rendering, through with the benefit of hindsight, we know some games actually ran at 480x360 interlaced. 6 years later, the PlayStation 2 brought 480p rendering across the board. A vast majority of games ran at 640x480 - certainly an improvement over the first PlayStation, but nothing dramatic, on average a 2x increase in resolution. Most of the PS2's much more powerful hardware was used to improve graphics dramatically. 7 years later, PlayStation 3 released with Full HD support, though we know that most games ran at resolutions around 1280x720 instead. This time, it's a 3x increase in resolution between generations. So why did most PS3 games run at 1280x720 instead of 1920x1080? Simple - game developers had to find the right balance between increase in graphical fidelity, maintain stable framerates, and resolution. By and large, 720p was the standard for this generation. 6 years after that came the PlayStation 4. This time, the standard was 1920x1080, and for the first time, the resolution increase actually decreased to ~2x. But there's a catch - 4K was just around the corner, and a mere 3 years later, PlayStation 4 Pro released targeting 4K - another 4x bump over the PlayStation 4.

You see where we are heading - as resolutions get higher, potential improvements in graphics and framerates have to be compromised to meet resolution demands. Before this generation, pretty much all games were rendered at a fixed resolution. Because GPU load varies by each frame, the performance was rather inconsistent, and games of the past had pretty erratic performance. The goal this generation has thus become to render at lower resolutions when possible, while still maintaining a perception of high resolution.

As a side note, I enjoy writing these in-depth articles. They obviously take a lot of time and effort, and there's likely very little interest for these on Hive, but it helps me clarify some of my thoughts.

Dynamic resolution scaling

Dynamic resolution scaling has been the foremost innovation this generation. It's pretty simple - the resolution of the game varies by each frame, so as to maintain a consistent frame rate. Pushing up to higher resolutions like Full HD caused developers many headaches, and this is a fine fallback option in case the game fails to hit the target frame rate.

It's hard to pin-point which game actually pioneered this technique, but the favourite is probably Call of Duty: Advanced Warfare. Curiously, no one was actually testing for dynamic resolution at the time, so this was only retroactively discovered. Games before it, like Killer Instinct, also had more limited variants where the resolution would temporarily drop.

The first game to hype up this feature was definitely Halo 5: Guardians. It had an incredibly flexible resolution scaler, which dynamically adjusted each independent axis, and thus succeeded in hitting 60 fps on the underpowered Xbox One.

Since then, this feature has become a standard across most titles. Indeed, with the move to 4K resolutions, it's use has proliferated. Not all titles feature dynamic resolution though, titles with cutting-edge graphics such as Metro Exodus, Forza Horizon 4 and Control, as well as the upcoming The Last of Us: Part II opt for fixed resolutions. On a related note, the Forza games actually have a dynamic graphics settings feature, though that's the only game engine I'm aware of that offers that in lieu of dynamic resolution.

Overall, this is a great way of maintaining a consistent experience. Gamers aren't going to notice a small but temporary drop in resolution, but a drop in frame rates results in judder which is definitely noticeable.

Digital Foundry has a great video on the technique, a must watch:

Temporal reconstruction

There are various types of temporal reconstruction techniques being used today, but the basic concept is the same. The game is rendered at a lower resolution, but over time, it uses information from adjacent frames to create a higher resolution image. Let's say, if you're standing still, then most areas of the frame remain unchanged. So, by accumulating information over time, the frame can appear richly detailed even if each frame is actually rendered at a lower resolution. Of course, the drawback here is in shots with motion, which will appear lower resolution.

The first game to use this technique was probably Quantum Break. It rendered at 1280x720 internally, which more of a last generation resolution, really, but temporally upscaled that to 1920x1080. When standing still, it looked pretty good on the Xbox One. But move a bit and you'd get pretty ugly ghosting artifacts.

Over time, developers have gotten way better at this technique. They have found ways to use data even while moving, and if they can't, cover it with smart motion blur so no visible artifacts can be seen. The current state-of-the-art is The Division 2's incredible temporal reconstruction feature. You can run the game at 75%-85% of the native resolution, and it's basically going to look like 4K even to the eagle-eyed, in most situations. Instead, you gain a 20%-30% performance boost for free, effectively. Other games offering top-notch reconstruction include Call of Duty: Modern Warfare and Gears 5. Speaking of Gears 5, Unreal Engine 4 natively supports both dynamic resolution scaling and temporal reconstruction - so we'll definitely see wide adoption of these features.

The latest PlayStation 5 demo actually runs at 2560x1440. The reason why it still looks good at 4K is because of temporal reconstruction. Check it out!

Checkerboard rendering

A related but different technique is checkerboard rendering. Here, as the name suggests, only half the pixels are rendered in a checkerboard pattern, and then temporally accumulated to a full image. The invention of this technique was out of necessity. Sony knew their PlayStation 4 Pro was simply not powerful enough to render a native 4K image, so they pushed their developers to use checkerboard rendering instead.

Surprisingly, there were a few games shipping with checkerboard rendering right at PS4 Pro's launch, some examples being Rise of the Tomb Raider and Mass Effect: Andromeda. These games certainly offered noticeable improvements over the Full HD of the PS4 versions, but the flaws were pretty evident, including a slight softness, greater aliasing and some checkerboard artifacts.

The best checkerboard-like solution implemented to date remains the Decima Engine powering Horizon Zero Dawn and Death Stranding. While it is by no means as sharp as a native 4K image, it's certainly good enough, and cleans up some of the artifacts and aliasing at the cost of a softer, but more pleasing image.

Unfortunately, there have been some pretty bad checkerboard implementations too, the most egregious one being Red Dead Redemption 2. Digital Foundry has pretty interesting comparisons which highlight what happens when checkerboard goes bad. I don't think this technique has much of a future, and will like slowly die out in favour of more advanced temporal reconstruction techniques.

DLSS / machine learning reconstruction

A lot of these resolution fudging techniques are limited to consoles. Not because they can't run on PCs, but simply put, the developers never implemented them for the PC versions. Not all, of course - Gears 5 is a great example of a PC title feature both temporal reconstruction and dynamic resolution - but these examples are fewer, though increasing in popularity over time.

Surprisingly, the most advanced of all of these techniques actually birthed on the PC. DLSS is currently an Nvidia proprietary technique, but we can certainly expect AMD and game developers to experiment with similar techniques.

Machine learning reconstruction takes everything learned from temporal reconstruction, but adds another layer on top. Here, a machine learning algorithm will train for certain ways for how the game renders, and fill in the blanks accordingly. The first iteration of DLSS was pretty much a disaster, but the latest version, DLSS 2.0 is the pinnacle of reconstruction techniques currently.

Today, DLSS 2.0 is so good that you can basically double your framerate by rendering at half the resolution, with a similar perceptual image quality. Or, developers can choose to improve the graphics a lot, while maintaining the same framerate.

Hardware Unboxed has a remarkably detailed video highlighting the benefits of DLSS 2.0:

Variable rate shading

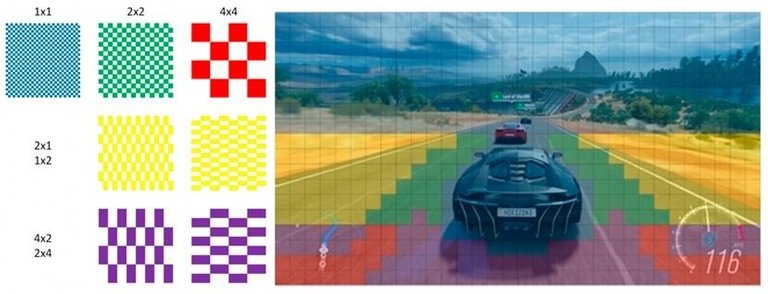

Finally, here's a truly revolutionary technique that actually rethinks what resolution means in the first place. Instead of every pixel drawing the same details, VRS allows developers to vary the detail rendered on a per-pixel basis. Crudely, think of it this way - if there's just shadows, or a blocky object with one solid colour, why waste rendering it at 4K? This object can be rendered at a lower resolution while looking pretty much the same. Meanwhile, a detailed object can be rendered at full resolution.

Currently, this is a nascent technology only supported by NVIDIA RTX GPUs, but releasing soon on next-generation consoles and AMD's RDNA 2 graphics cards for PC. I believe only Wolfenstein II, Wolfenstein: Youngblood and Gears Tactics supports this feature currently. No doubt this will see some huge adoption for next-gen games though.

As we move into the next-generation consoles, all of these techniques will be pretty much common across the board. It's going to be a combination of techniques, too. Wolfenstein: Youngblood is a great example today of a game that uses dynamic resolution, DLSS 2.0 and variable rate shading already. As a result, it's one of the few games that can be played at 4K at 144 Hz with ease on a RTX 2080 Ti. I'd imagine there'll be more such techniques invented along the way, which will attempt to push actual rendered resolution down as low as possible, while keeping perception of resolution constant.