KEY FACTS: Google DeepMind’s Gemini 2.5 Pro Experimental, unveiled on March 26, 2025, represents a significant advancement in AI, introducing "thinking" capabilities that allow it to reason through complex problems in mathematics, science, and coding. Launched just months after Gemini 2.0, this model achieves top scores on benchmarks like the AIME 2025 (math), SWE-Bench Verified (63.8% in coding), and LMArena leaderboard, outperforming competitors with its one-million-token context window and multimodal processing of text, audio, images, and video. Available now via Google AI Studio and soon for Gemini Advanced users, it powers innovative applications while setting the stage for future context-aware AI agents.

Source: Google / X

Google DeepMind Unveils Gemini 2.5

Google DeepMind has introduced Gemini 2.5, heralded as the company’s most intelligent artificial intelligence model to date. Released on March 26, 2025, the experimental version of Gemini 2.5 Pro integrates what Google calls "thinking" capabilities, which allows the model to reason through complex problems before delivering responses. This latest development promises to reshape how AI interacts with users across diverse domains, from mathematics and science to advanced coding, setting a new benchmark in the field.

Today we’re introducing Gemini 2.5, our most intelligent AI model. Our first 2.5 release is an experimental version of 2.5 Pro, which is state-of-the-art on a wide range of benchmarks and debuts at #1 on LMArena by a significant margin.

Source

The unveiling of Gemini 2.5 comes just months after the debut of Gemini 2.0 in December 2024, showing Google DeepMind’s rapid pace of innovation. According to a blog post attributed to Koray Kavukcuoglu, CTO of Google DeepMind, the new model combines a "significantly enhanced base model with improved post-training" to achieve unprecedented performance levels. Unlike traditional AI systems that provide instantaneous answers, Gemini 2.5 is designed to mimic human reasoning by breaking down prompts into logical steps, refining its approach, and selecting the most accurate response. This "thinking" approach, Google asserts, enhances both the reliability and contextual relevance of the model’s outputs.

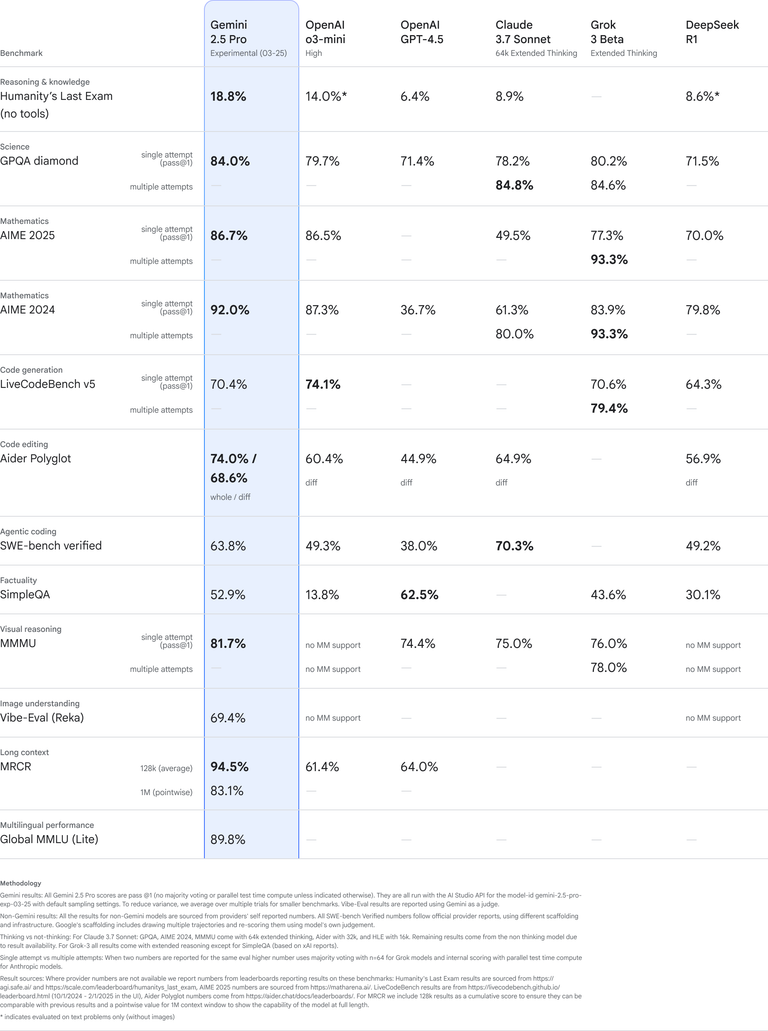

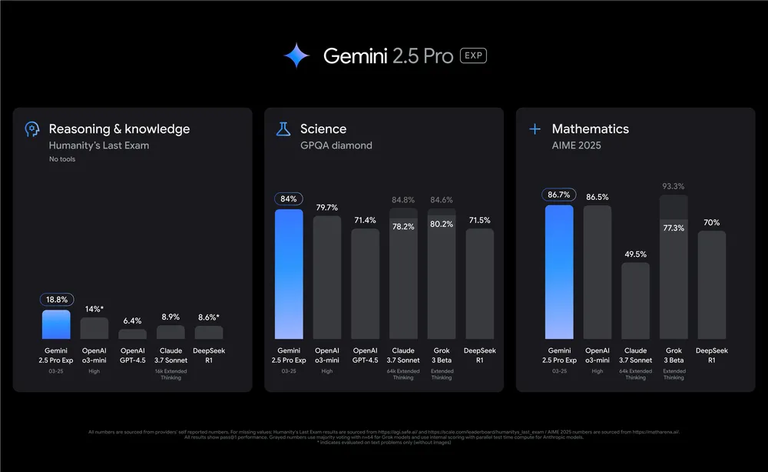

Gemini 2.5 Pro Experimental has already demonstrated its prowess, achieving state-of-the-art results across a wide range of benchmarks. In mathematics and science, it leads in evaluations such as the Graduate-Level Google-Proof Q&A (GPQA) and the American Invitational Mathematics Examination (AIME) 2025, outperforming competitors without relying on costly techniques like majority voting. Additionally, it scored an impressive 18.8% on Humanity’s Last Exam based on a dataset crafted by hundreds of experts to test the frontiers of human knowledge and reasoning. This makes Gemini 2.5 the top performer among models without tool assistance.

Updated March 26 with new MRCR (Multi Round Coreference Resolution) evaluations. Source: Google

The model’s coding capabilities are equally remarkable. On SWE-Bench Verified, an industry-standard evaluation for agentic coding, Gemini 2.5 Pro scored 63.8% with a custom agent setup, showcasing its ability to create visually compelling web applications, develop agentic code, and handle code transformation and editing. Google highlighted this strength with a demonstration where the model generated a fully functional endless runner game from a single-line prompt, producing executable code in moments.

Gemini 2.5 also debuted at the top of the LMArena leaderboard, surpassing competitors by a significant margin. This achievement reflects its advanced reasoning and multimodal capabilities, allowing it to process and respond to inputs across text, audio, images, and video with a one-million-token context window. This expansive context capacity enables the model to analyze vast datasets and tackle intricate problems, further distinguishing it from its predecessors.

Source: Google

The introduction of Gemini 2.5 points to a strategic shift for Google DeepMind. Koray Kavukcuoglu, CTO of Google DeepMind stressed that Gemini 2.5 is the cornerstone of Google’s future AI endeavors, with plans to integrate its reasoning abilities into upcoming models and services. In his words:

"...ith Gemini 2.5, we've achieved a new level of performance by combining a significantly enhanced base model with improved post-training. Going forward, we’re building these thinking capabilities directly into all of our models, so they can handle more complex problems and support even more capable, context-aware agents."

The concept of a "thinking model" goes beyond simple classification or prediction. As Google explains, it involves analyzing information, drawing logical conclusions, incorporating context and nuance, and making informed decisions. These are skills that mirror human cognitive processes. This approach has already borne fruit in Gemini 2.5 Pro Experimental, which showcases enhanced accuracy and explainability. For instance, users can see the model’s thought process in a "Show thinking" section, a feature carried over from the Gemini 2.0 Flash Thinking model, providing transparency into how it arrives at its answers.

Building on the multimodal foundation of the Gemini family, Gemini 2.5 Pro Experimental excels at integrating and processing diverse data types. Its ability to handle text, audio, images, and video within a single framework makes it a versatile tool for real-world applications. Google showcased this capability through a series of demonstrations, including the creation of an interactive animation of "cosmic fish" from a simple prompt, a simulation of fractal patterns in a Mandelbrot set, and a bubble chart visualizing economic and health indicators over time. These examples highlight the model’s potential to bridge creative and analytical tasks seamlessly.

For developers, Gemini 2.5 Pro offers a powerful resource. Available now through Google AI Studio, the experimental version allows coders to experiment with its features for free, while Gemini Advanced users will soon access it via a model dropdown on desktop and mobile. Enterprises can anticipate its integration into Google Cloud’s Vertex AI platform, broadening its reach into professional settings. Google’s commitment to accessibility is evident in its swift rollout, ensuring that developers and users alike can harness the model’s capabilities without delay.

Gemini 2.5 enters a fiercely competitive AI landscape, pitting it against models like OpenAI’s GPT-4.5, Anthropic’s Claude 3.7 Sonnet, and DeepSeek’s R1. Its emphasis on reasoning and coding positions it as a formidable contender, particularly in STEM fields where precision and problem-solving are paramount. The model’s ability to outperform rivals on key benchmarks without additional computational overhead further portrays its efficiency and scalability.

Google DeepMind is soliciting feedback to enhance the capabilities of Gemini 2.5 to make the AI more helpful. The release of Gemini 2.5 Pro Experimental is a bold step toward smarter, more intuitive artificial intelligence.

Information Sources:

If you found the article interesting or helpful, please hit the upvote button, and share for visibility to other hive friends to see. More importantly, drop a comment below. Thank you!

This post was created via INLEO, What is INLEO?

INLEO's mission is to build a sustainable creator economy that is centered around digital ownership, tokenization, and communities. It's Built on Hive, with linkages to BSC, ETH, and Polygon blockchains. The flagship application: Inleo.io allows users and creators to engage & share micro and long-form content on the Hive blockchain while earning cryptocurrency rewards.

Let's Connect

Hive: inleo.io/profile/uyobong/blog

Twitter: https://twitter.com/Uyobong3

Discord: uyobong#5966

Posted Using INLEO

The rewards earned on this comment will go directly to the people( @tsnaks ) sharing the post on Reddit as long as they are registered with @poshtoken. Sign up at https://hiveposh.com. Otherwise, rewards go to the author of the blog post.