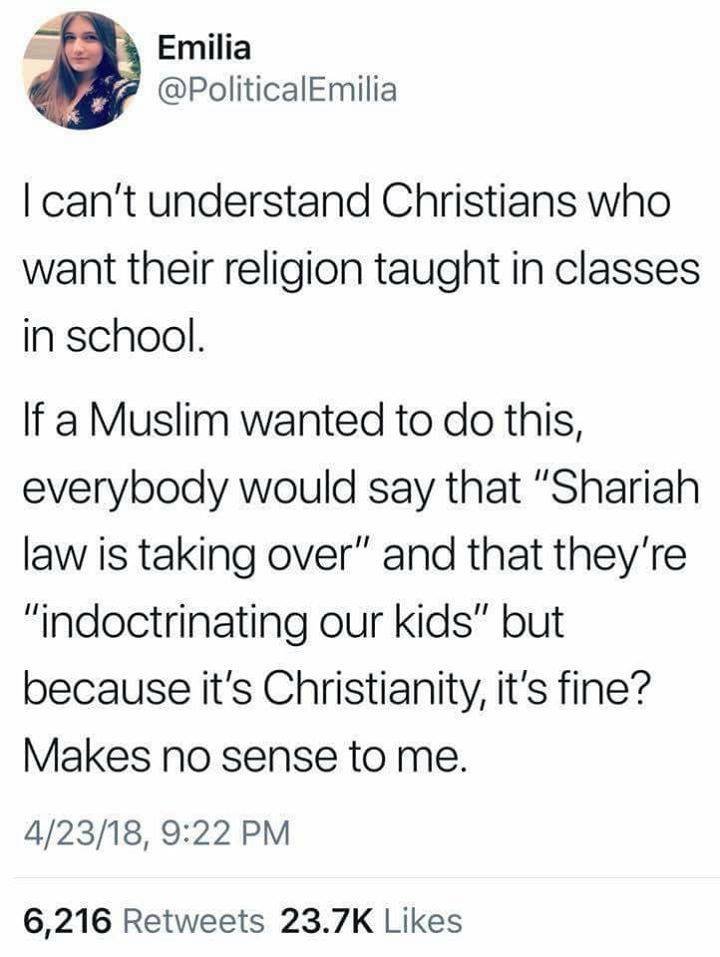

Republicans are not for separation of Church and State when it comes to Christianity. It's astounding how hypocritical they really can be.

Republicans are not for separation of Church and State when it comes to Christianity. It's astounding how hypocritical they really can be.

We have an obligation and a right to take our Christian views into politics, education, and every arena of life. Muslims use their faith to oppress everyone else. Christians use their faith to liberate others. Whether it was slavery then or abortion now. The most oppressed minority in the world are unborn children. Also, by bringing in Christian morality, we can free others from sin by showing how destructive it is and showing why living a moral life gives them a better life. It shouldn't be hard to figure out--is it better to have 4 children by 4 different fathers or is it better to wait until you're married to have sex?

Wow. Fucking wow. If you can't see what you just wrote as the oppression of religion you described in sentence 2, you're delusional.

It's not an oppression of a religion. It's speaking the truth of a religion. In Saudi Arabia they recently crucified someone. They behead Christians. In the Muslim world they throw homoesexuals off tall buildings. It's a standard practice of Islam that they sexually mutilate young girls. If you think telling the truth is oppression then you're the one who is delusional.

Once again, someone says that Christians are hypocritical without even knowing what it means. In means to claim a moral standard but act in opposition to it. Like preaching morality but engaging in immorality. That has nothing to do with Christians wanting Christianity taught in schools. In fact, you singled out Christians for oppression. You're not saying Muslims, atheists, Sikhs, Buddhists, or Hindus have no right to have their religions taught in school. In fact, they are taught in schools. Only Christianity is singled out for oppression.

But we know why that is. It's because, as John said,

(John 3:19 NIV) This is the verdict: Light has come into the world, but men loved darkness instead of light because their deeds were evil.