Say what you see - a python JeVois and Raspberry test

The cam is connected to my raspberry which says what the object detection camera sees via python scripting handling Text-to-Speech. A beginner test and tutorial.

I acquired a Jevois smart camera very recently. JeVois is a kind of very small Webcam embedded in a nano-computer built-in with the latest machine vision algorythm, organized in a ready-to-be used state. It enables a lot of task like object detection and recognition (YOLO, darknet), face detection, road navigation (=autonomous driving), ARcode, barcode recognition and a few other activities.

A couple of project are currently using jevois in quite already complex robotic contests, coupling the little vision sensor to arduinos, motors and others.

As i am no technical engineer, i just wanted to give it a try to see how far i could motivate my children to make use of it in basic toy projects. So this is an article to be shared amongst beginners with basic knowledge of raspberry boards and python scripting (like me ;-)

As a very first experiment, i aimed to make a little machine that would just try to guess the name of the object we would put in front of his eye (the jevois camera).

This could easily be demonstrated via the built-in demos in Jevois, but i wanted to control this process via a raspberry and a small python script in order to get acquainted and being ready for further development ideas.

So here the recipe :

Requirements :

A jevois camera with its default latest image file.

The jevois camera will be connected via USB to a Raspberry3. Currently i am using JeVois image version 1.7.1 (February 2018)

A raspberry3 with the latest image (here the Stretch release).

A little speaker connected to the analogue audio output of the raspberry. (i believe you can use the HDMI sound output if your monitor has such feature).

The run-book is the following

- Adding some software to your rpi3

- Minicom, in order to access Jevois for configuration purposes.

- PicoTTS, in order to give a voice to the Rpi3

- Configuring the jevois hardware with no video output, so that it starts sending messages automatically in the "object recognition" mode to the raspberry3 over the USB.

- Writing a small python script on the raspberry where jevois is connected to, that will read the messages of the jevois camera and say it loud to the audio output via a text-to-speech module.

Step 1. Some additional required software on Raspberry3 that must be installed

In order to access JeVois, it is recommended to install at least a terminal software like Minicom.

From your prompt on the rpi, open a terminal and type :

sudo apt-get install minicom

In order for your rpi to speak we need a Text-To-Speech software. Different options and solutions are here possible. I currently choose the PicoTTS software because it runs locally, fitted for the rpi, multi-langage with a still robotic voice but better than espeak and at this stage, straightforward to use within python2 or 3.

To install PicoTTS, just type the following on your rpi prompt :

sudo apt-get install libttspico0 libttspico-utils

*Nota Bene : On your rpi3, check which canal (HDMI or analogue audio) you use to route the audio signal. As i am using the audio jack, i needed to set this to analogue. The easiest way is to click on the speaker icon of your rpi to modify accordingly this setting.

Step 2.1 Accessing JeVois as an external drive

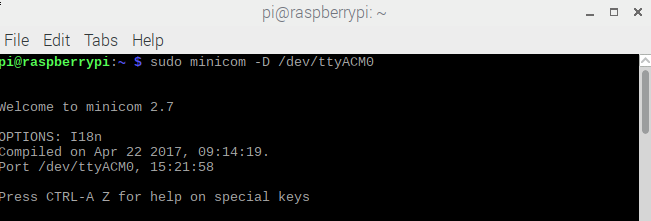

Connect to JeVois by using Minicom software, from your rpi prompt, type :

sudo minicom -D /dev/ttyACM0

You should see

After the welcome message of Minicom, just type blindly (you will not see what you type on the screen).

usbsd

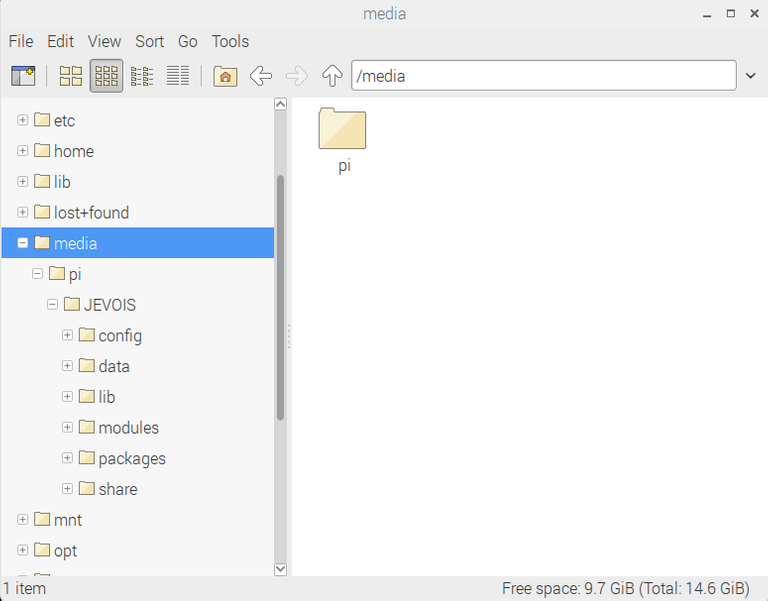

This command will allow you to browse JeVois like a external usb flash drive from your raspberry. So, on your rpi, open the file explorer and go to the /media folder.

Like this :

Step 2.2 Editing initscript.cfg of JeVois to load DarknetSingle at startup

JeVois offers a lot of different mode it can starts with. One can be dedicated to read written numbers on a sheet of paper, another one can be dedicated to translate QR-code, another one is specialized in determining vanishing point of a road etc.

When Jevois camera starts running, powered by usb, it loads the latest configuration it was running (by default the Intro mode), to override this, you can specify the configuration needed in the initscript.cfg file, located on the SD card of JeVois under /media/pi/JeVois/config

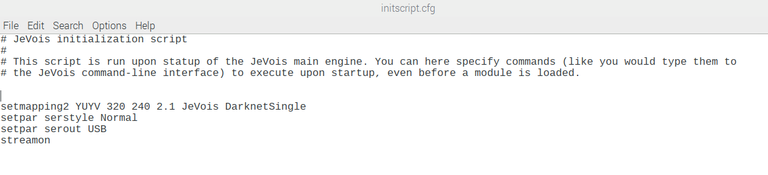

For the purpose of my little experiment, we need to edit the initscript.cfg file so that the module named "DarknetSingle" loads at startup, in a non-video mode, sending the messages in a detail level set as Normal (serstyle Normal), transmitted over USB. To start the module we need to intruct it to start streaming (streamon)

You must access the SD card and edit the initscript.cfg that you wil find on the directory /media/pi/JEVOIS/config

Edit the initscript.cfg with the following parameter

setmapping2 YUYV 320 240 2.1 JeVois DarknetSingle

setpar serstyle Normal

setpar serout USB

streamon

Save your modification. It should look like this :

Restart the JeVois camera (or eject it and plug it again)

Exit or close the minicom.

Nota Bene: at this stage, you may issue another blind command into the Minicom prompt by just typing "restart". If everything is fine, it will just restart JeVois in DarknetSingle mode, and as your minicom is still running, a couple of seconds afterwards, you should see typical messages like DKF number appearing into the minicom terminal.

Step 3. Receive the messages and say it.

On rpi3, we will now write a litlle python script which will do the following:

Grab the messages coming from the camera sensor : whenever the sensor detects an object, it will communicate its naming (or label) and the % of confidence. To perform this step we will just use the "import serial" class and grab every line emitted by the camera sensor to a string named serial_line:

ser = serial.Serial('/dev/ttyACM0', 115200) serial_line = ser.readline()Check if the content of the message is relevant for our test : when nothing is detected or let's say, under a threshold of detection, this module just communicate a frame number which, for my test, does not give any added value. Such message frame number message have a fixed lenght of 15 characters. we do this via basic string manipulations.

If the message contains a label, then we will say it with the Speech-To-Text tool and let it stream trough the speaker attached to rpi3. To peform this we embedd in the python code, the method of used by PicoTTS, namely, creating a standard audio .wav file containing the sentence we have built with our string manipulation, and then play it via the standard 'aplay' linux command.

The full code, to be saved as .py file into your home directory is the following :

# Script for using PicoTTS and JeVois camera

import os, sys, serial, time

ser = serial.Serial('/dev/ttyACM0', 115200)

if os.path.exists("test.wav"):

os.remove("test.wav") # Removes the audio file if exists

while True:

serial_line = ser.readline()

#print (serial_line)

tts = str(serial_line)

ttsL=len(tts)

if ttsL>15:

#os.system("amixer cset numid=6 100%") # Set the audio level

msg=" I see a "+tts[6:]

os.system("pico2wave -w test.wav \""+msg+"\"") # Generates the audio

os.system("aplay test.wav") # Play generated speech

print (msg) # just for debugging not needed

time.sleep(3)

os.remove("test.wav") # Removes the audio file if exists

I admit this is very very basic test of grabbing msg coming from the JeVois camera. But at least it shows how easy we can process these outputs.

Another step will be to implement better parsing, initiate different vision modules based on inputs like "if you detect an envelope, try to read the number or check if you see a Qr-code" etc.

JeVois contains already the latest version of OpenCV, and other algorythms and trained neural networks. On deeper level, you can implement your own module.So the game has just started.

Here some intereting resources i found to make this starting study:

The main site

The forum

http://jevois.org/qa/index.php

Another forum using JeVois for its robotic project.

https://www.chiefdelphi.com/forums/showthread.php?threadid=159883

Read the posts of Mr torq on the following site

https://thenewstack.io/run-jevois-smart-machine-vision-algorithms-linux-notebook/

See you.

WARNING - The message you received from @gordonramsay is a CONFIRMED SCAM! DO NOT FOLLOW the instruction in the memo! For more information, read this post: https://steemit.com/steemit/@arcange/scammer-reported-steemitrobot

@originalworks