Hello everyone, I hope our day has been stress free. I'm so happy to talk to us about technology today and computing to be precise. Computing is a very dynamic world indeed, it is a place where terms and ideas rise and fall like kings in the bible yet, there are still some terms and ideas that rose like wild fire and still maintain its position at the top. One of those terms is VIRTUALIZATION and that will be the topic for our today's discussion.

[credit: Wikimedia. Creative Commons Attribution-Share Alike 2.0 Generic license. Author: Argonne National Laboratory's Flickr page]

In June 2016, the National Research Center of Parallel Computer Engineering and Technology, located in China, designed the world's fastest computer, The Sunway TaihuLight. Till date, the computer is still the fastest, in fact, it topped the fastest computer before 2016, the Tianhe-2, multiplying the speed by X3. It boasts of a peak performance of 93 petaflops! Well putting that in clear English, it means the computer can process a quadrillion thousand, trillion floating points in a second multiplied by 93! Now the not so fun part, it costs a whooping 273 million dollars.

I had to go to the extreme to give us a glimpse of what computing resources look like today. Well, we've got the resources so how do we maximize what is at our disposal? This is where virtualization comes into play and the same reason why its popularity is just at the beginning stage. On a very small scale, my tiny PC, Dell Latitude E7240, comes with two processors each clocked above 2.4GHz and I must say, without virtualization, I just have one powerful PC that is mostly unused. So, what then is virtualization?

Many who are computer literate view virtualization as just the process of running multiple operating system on one computer but that is from a very myopic point of view because as of today, even the reality has been virtualized (Virtual reality), in fact, @sammintor made a post here stating that the virtual reality is so advanced that it can be physically felt.

Wikipedia in simple terms defined virtualization as the abstraction of computer resources and I completely agree with that. Virtualization breaks so many barriers in the computing field and has the ability to merge many physical computing resources into a single unit and at the same time, has the ability to split a single physical resource into many virtual resources that can fully function on its own.

A brief History

VMware, a leading company in the field of virtualization defined virtualization as an idea whose time has come. This is because the idea of virtualization has been a very old one, dating back to the 80's, which is almost as old as modern computer itself.

))

[IBM was very instrumental in the development of hypervisors. Credit: Wikimedia CC1.0 license. Author: Vinoo202]

IBM will always be remembered as far as the field of virtualization is concerned because of their first move in virtualization with their mainframe computers. IBM invested so much resources in developing mainframe computers and as such was very costly and they sorted for a way to share such resources among many users. These mainframe computers included the M44 and M44X series which up on its success gave rise to commercial versions like the CP 67 machines.

The success of these IBM commercialized computers with time-sharing capabilities led to the crash in the price of computers in the 80’s and 90’s since it was possible for private firms and even individuals to use computer without having to purchase or own one. As time progressed, the concept of time sharing on high powered mainframe computer declined and the industry moved into teaming servers with smaller capabilities which resulted in decline in the concept of computer virtualization.

Well the game changed around late 90’s when VMware introduced their workstation. The popularity of VMware’s workstation even rose with the introduction of their ESX servers which does not even need any operating system to perform its virtualization functions.

The Hypervisor

I will love to explain the hypervisor first before going on to the types of virtualization as understanding this would lead to maximum understanding of the major type of virtualization, the server virtualization.

))

[Credit: Wikimedia CC3.0 license. Author: Golftheman

The computer consists of the hardware which are the resources we can see and touch. Then the system software which is divided into operating system, system drivers and system utilities. The operating system comes directly after the hardware and has the functionality of managing the resources that is available to the computer and running the rest of the system software which are the utilities and the drivers. It is also the intermediary between the hardware and the application program.

IBM first came up with the term hypervisor, and it referred to the software that managed memory sharing in their 360 and 365 series of the RPQ systems. Hypervisors are software which operates independently of the guest operating systems and intercepts instructions sent from the operating systems to the computer hardware. Due to the fact that hypervisors oversees the activities of guest operating systems and ensures that there are no resource conflicts among the instances of operating system, they are referred to as Virtual Machine Monitor/Manager (VMM).

The relationship between a computer and its operating system is a one-to-one relationship. With hypervisor, this relationship still exists but how is this possible? The operating systems being policed by the hypervisor, called the guest operating systems is allowed to use the underlying hardware (CPU, GPU, etc.) time. The concept of multi-threading, multi-core processing and many other enhancements available to today's computer made virtualization a success.

Types of Hypervisors

There are basically two types of virtual machine monitors and these are:

I made mention of how virtualization has helped me make the most out of my little PC. I have an Oracle VirtualBox machine software installed in my computer and with this, I was able to run two X86 Linux distributions (RedHat and Ubuntu). This is a type of hosted hypervisors and this is the one most people are very much used to.

The type 2 hypervisors as hosted hypervisors are fondly called requires an operating system to run. They have series of advantages and disadvantages. The major disadvantage of the type 2 hypervisors is that compared to bare metal hypervisors, they are slow and the speed of the guest operating system will depend solely on the speed of the host computer.

The major advantage of the type 2 hypervisors is the ease with which they can be installed and uninstalled making them the best option for beta environment. Examples of type 2 hypervisors are the Oracle's VirtualBox which I have already mentioned, Microsoft's VirtualPC VMware workstation and many more.

The bare metal hypervisors are installed directly on the computer hardware and sits like an operating system. Bare metal installations are mainly intended for servers as servers are meant to operate at a maximum possible speed and also for security reasons. Once the type 1 hypervisor is installed, no further operating system is required for virtualized operating systems to be installed thereby totally annulling the overhead of host operating systems.

A very popular bare metal hypervisor is the VMware ESX and the Microsoft’s Hyper-V.

Types of Virtualization

Virtualization has taken over many aspects of computing today, making this section to be inconclusive. Popular types of virtualization includes:

Server Virtualization

Today's servers are so powerful that one process cannot completely exhaust its resources. Server virtualization is the major driving force of cloud computing. The process of server virtualization hides the physical servers from its users. Here, the actual number of servers including the server resources including their operating speeds, there OS, storage capacity, etc. are teamed up to form a virtual functional server which is better than each of the servers making up this team.

The reverse could also be the case, and single server with high performance could be split into many other servers hence allowing for maximum utilization of its costly resources. It is also worth stating that the major driving force of the server virtualization is the hypervisor (Virtual Machine mornitor) which intercepts hardware calls from operating systems and handles this calls based on its algorithm such as time division.

You’ll agree with me that one of the major issues facing computing today is problem of power for powering up the physical infrastructures and cooling them. Virtualizing servers undoubtedly solves this issue to a great extent. Compared to physical servers, virtualized servers seldom crashes and backing up information has never been easier with server virtualization.

A very wise question an establishment would ask its management board while considering server virtualization is whether it is actually good for them. There are some challenges facing server virtualization which includes budget, education of personnel, security, and possibility of migration. Adopting a virtualized server environment is budget intensive including the cost of obtaining licenses and purchasing capable servers since servers already in use in the environment might not be able to properly run virtualization suites or might require teaming up many servers into one.

[Virtualized servers hides the actual number of servers users access through clouds and other means. Credit: Wikimedia. Creative Commons Attribution-Share Alike 3.0 Unported license. Author: JorchHIT]

Also server virtualization could to a great extent change the modus operandi of IT departments in an organization and this would require reeducating the staffs which might not always be feasible. Another concern is data security and level of control offered by virtualization. Virtualization could make IT managers feel they’re no longer in charge of certain processes and also feel the hypervisors could amplify computing issues. To some extent, this could be true since their might exist some level of administrative privileges in the hypervisor which could lead to abuse.

If the above listed issues can be addressed by any desiring firm, then server virtualization is worth giving a try.

Network Virtualization

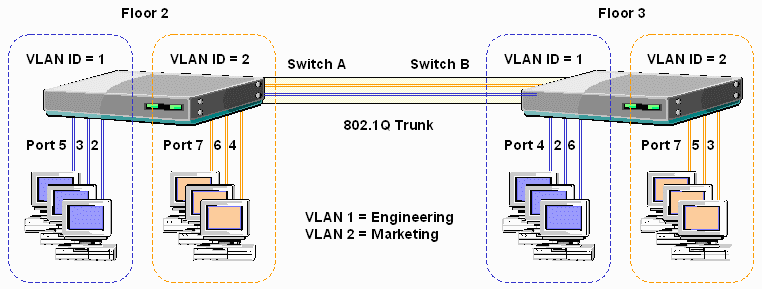

Wikipedia defined Network virtualization as the process of combining both physical and software network resources into a single abstract entity called virtual network. Just like virtualization as term was defined as the abstraction of the physical resource, network virtualization teams up many physical resources into a single software-based administrative unit. These physical resources includes routers, switches, network interface cards, firewalls, network connectivity such as cables, virtualized local area networks (VLAN), etc.

Large networks can also be broken down into smaller virtual networks in the process known as external virtualization. The major driving force of the network breakdown is the Virtual Local Area Network (VLAN) and Virtual Private Network (VPN). Network hosts (such as computers and phones) are not given layer three functionalities (routing and network addressing) hence can only communicate within a local area network.

[Though marketing and Engineering are connected physically, their hosts are split virtually. Credit: Wikimedia. Creative Commons Attribution-Share Alike 4.0 International license. Author: Oyuhain]

VLAN creates virtual networks by defining what is called broadcast domains. Devices that are within the same broadcast domain is said to be in the same network and can freely communicate without a need for a network gateway. By creating software based broadcast domains, new networks are created within an already created network.

Just like the hypervisors helps team up many servers into an abstract functional server, network virtualization also employs hypervisors in the form of Virtual Network Interface card and virtual private networks. The virtual private networks makes it possible for network systems that are not even in the same locality appear to be in the same locality while providing maximum security for such connectivity over public networks.

Storage Virtualization

The transformation going on in the IT industry is a very rapid one. As computing speed is increasing, so also is the connectivity between computers and computer storage. Storage size is as important as storage speed and ease of access. Computer storage has evolved from pre-diskette period, to diskette, to compact disks, to hard drives, to solid state drive, now we have 5D storage. There’s no gain saying that we are in information age but where are these information stored?

It’s of paramount importance that technology be applied in easing storage access and manageability. Well, virtualization is here to help. Many technologies including optical fiber channels, server clusters, data snapshots, high availability, to mention but a few, have helped transform our data storage system into what it is today but one thing is very significant in their technological successions which is; any succeeding technology usually disrupt its predecessor or hyped which could lead to confusion among the masses.

Storage virtualization took a different development path with no standard body or bodies like the IEEE setting standards or limits of use. With that said, it is not easy to state categorically what this emerging technology is or what it is completely capable of doing.

Different flavors of storage virtualization exist and depends on the vendor with some vendors implementing the storage virtualization in Storage Area Network appliances and others host the application on a storage controller. Some vendors implement virtual storage as two different entities between the storage control and the actual data while some vendors implement the complete system as a unit.

Conclusion

There's a popular saying in my society that power without use is useless. IT infrastructure and resources is growing in capacity and quality with each passing day making it impossible for such systems to be completely utilized by one operation.

Cloud computing is also a buzz word in the IT industry and in the heart of that is virtualization. In order to be in line with today's computing, I urge us all to get to know more about virtualization.

I am compiling an interesting post on Big Data technology and I will speak with authority if the concept of virtualization is well understood.

REFERENCES

- Introduction to virtualization -infoq

- Hypervisor -techopedia

- virtualization 101 -pluralsight

- network virtualization -techopedia

- Server virtualization -Softwareadvisory

- Storage virtualization -informit

If you write STEM (Science, Technology, Engineering, and Mathematics) related posts, consider joining #steemSTEM on steemit chat or discord here. If you are from Nigeria, you may want to include the #stemng tag in your post. You can visit this blog by @stemng for more details. You can also check this blog post by @steemstem here and this guidelines here for help on how to be a member of @steemstem. Please also check this blog post from @steemstem on proper use of images devoid of copyright issues here.

))

))

Hi @henrychidiebere! The article is amazing with quite some interesting information like 'Also server virtualization could to a great extent change the modus operandi of IT departments in an organization', and some more!

This article is quite educative and thanks for bringing up this post!! :)

Cheers!! :)

@star-vc I'm happy you read with interest. Thanks

Thanks for an interesting post.

If you use Linux and if you are interested to run lots of other Linux distributions simultaneously, have a look at Linux containers.

It's much more efficient than using a hypervisor and much less demanding on hardware.

Check lxd as a starting point.