I've been looking to replace my NAS for a while. I've considered all my options for commercial products, primarily Synology or QNAP and decided to build something.

The primary reason to build something myself is to have fast CPUs, more drive bays, ability to repair components if they break with off the shelf components, and use ZFS.

ZFS

If you are not familiar with ZFS, it is a file system traditionally used on Solaris hardware but has been ported to Linux and FreeBSD. The most popularly used solution is FreeNAS an enterprise-class product with commercial level support.

- Copy-on-write support

- Snapshots

- Replication

- Efficient Compression

- Caching

- Bit Rot Protection

While ZFS is an amazing file system, it does have one large flaw that can be problematic for most users. If you want to increase your storage, you cannot add to existing arrays and must create new ones. If you have a RAID 5 array with 4 drives, and you want to add a 5th, you will need to create a new Raid 5 array with at least 3 drives. You need to plan ahead of time how much storage you will need before you replace the hardware or leave enough room to grow with additional disks.

Because I am not downloading porn and movies like most large NAS home users, my storage needs are a bit more predictable.

NAS Software

There are two major players in the do it yourself NAS

- FreeNAS

- Unraid

There are a few other options, but those are the two most popular options.

I really didn't like either option, FreeNAS is what I am most interested in as it is enterprise-class software and uses ZFS. It has a lot of features but most of the features are poorly implemented. The storage functionality is good, but the Virtual Machine and other functionality are very weak.

Unraid is a very interesting option, it has modern Virtual Machine support, fantastic UI, but it is slow as shit unless you use SSD caching and schedule writes to the array during downtime. It is extremely flexible in terms of adding storage down the road one disk at a time due to the named parity drives but suffers greatly when it comes to writing performance.

I decided to choose the path less traveled and will be using Ubuntu and ZFS directly. I will configure and setup VM and sharing software myself. This is a lot more work initially but will allow me to get excellent performance, high reliability, and great performance running virtual machines.

The Build

I am not 100% decided on all the hardware, but this is what I think I am going to order on Monday.

Case

Rosewill 4U 15 Bay Case

Case Upgrades

The fans in the Rosewill suck, they are always at 100% from what I understand, so I will buy fans to replace them. The case uses 2 80mm fans and 7 120mm fans. I will be removing the front two fans and reversing the fan tray with 3 120mm replacement fans. This will greatly increase airflow and cooling and allow for variable speed (lower noise).

Motherboard

I am going to go with a refurbished Gigabyte GA-7PESH2. This is an older but very high-end server motherboard with the following key features.

- Dual 10Gbit

- 2 Mini SAS 6Gbps ports (supports 8 drives)

- Dual Xeon

- 16 Memory Slots

- IPMI

This board supports any of the Xeon 5th generation (26xx) processors.

CPU

I plan on using dual Xeon 2690 v1 processors. These processors have a passmark score of 13660 with single threaded performance at almost 1800. They will turbo up to 3.8Ghz, although they are old performance is really good for cheap money.

The v2 processor adds two additional cores but is a tiny bit slower and much more expensive.

CPU Cooling

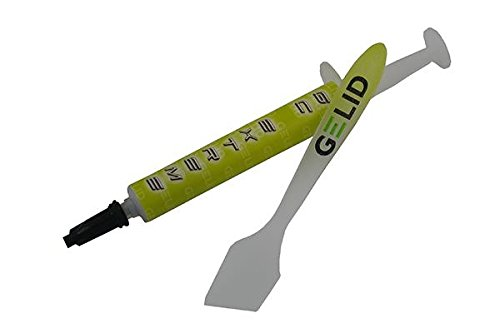

I am going with Arctic Freezer 33 CO coolers, they can do up to 150W TDP cpus, which is more than enough for the 135W TDP Xeon 2690's. Of course, I will need two and will also need some thermal paste.

I am going to use Gelid Solutions GC-Extreme Thermal Compound as it is the best rated available.

Ram

I am going to use ECC DDR3 ram and plan on either 64GB or 128GB. I still haven't decided as it depends on how many virtual machines I plan on running.

One thing about ZFS is it is very ram hungry and I expect to use about 16GB to the operating system and ZFS usage.

Drives

I am not 100% sure what I will use for drives, right now I am leaning towards Hitachi Ultrastar 4TB 7200 RPM SAS drives or new WD Red 10TB drives.

The Hitachi's are dirt cheap as they are pulls from enterprise systems. They are enterprise drives and are 7200 RPM but only have a 64MB buffer. I would be able to get 15 of these drives for $750-$900. As they are enterprise class drives, they tend to last a lot longer, although they already have a lot of hours on them, drives fail in a bathtub curve. This means they typically fail either in the first few day or weeks or some time after the rated failure rates. With many cheap drives, it is easy to replace any failures should they happen, even though this should be rare.

The other option is new shucked Western Digital Red 10TB drives. These are NAS rated drives but are not enterprise class. They have a much larger buffer (128Mb to 256Mb) and have a much better warranty. The cost is significantly higher with a single drive running $200-290. A full rack of 15 of them will run $3,000 - $4,350 USD.

I'm still deciding what I want to do, but if I went with the Reds I wouldn't fill the enclosure and likely only get 8 drives. I don't need a massive amount of storage, so a higher spindle count is ideal and why I am leaning towards the 4TB HGST drives.

I also have to decide if I want to go Raid 6 or 7. In ZFS land this is raidz2 or raidz3, meaning two or three parity drives. I am leaning towards raidz2 with two drives for parity. If I have alerts set up to notify me when a drive is going to fail or has failed, I can initialize a replacement right away and avoid a second drive failure. If a second drive does fail I will still be in business. But 15 drives is a lot of drives for a single array, which increases the chances of multiple drive failure.

If I go with two drives parity, I will get 52 TB of storage from the HGST enterprise drives and 60 TB of storage from the WD Reds with only 8 disks.

If I go with three drives parity, I will get 48 TB of storage with the HGST and I wouldn't even consider raidz3 with only 8 disks, so I'd still have 60 TB with the WD Reds.

Raidz3 would significantly slow down the array and mostly recommended if you don't respond to disk failures immediately. This means having a spare 4TB HGST would be smart and relatively cheap ($50-60).

Operating System and Boot Drive

I am going to use a pair of 256GB or SATA SSD drives for the operating system in Raid 1 configuration. It is important to have the operating system on a dedicated drive although it is possible to have it on a ZFS pool.

I could very easily use a flash drive for the operating system, but I want to have redundancy and a bit more performance. I also may have more than just ZFS running on the core operating system.

A 256GB SATA SSD drive can be bought new for around $30-50, so a pair of them dedicated to booting is not a big expense, but still a lot more than a $5 flash drive.

SAS Expander

The motherboard supports up to 8 6Gbps SATA drives via the two Mini SAS ports. You can feed this into a SAS Expander and use up to 24 drives. Although the case only supports 15, it is still required to go over 8 drives.

Power Supply

I have a couple of these brand new for when I was building mining rigs. These have two CPU cables so I won't need to worry about a splitter. Plus they have just been sitting on my shelf in the closet doing nothing.

Cables

I am going to need Mini SAS to SAS cables, each of the two Mini SAS ports on the motherboard can break out to 4 SAS/SATA drives.

I will also need additional SATA power connectors as power supplies don't have enough to feed 17 drives. I will pick up five of these but I am not sure how many I will need.

Misc

I will need a 10Gbit network card for any machine I want to get full performance from the NAS. I am going to go with the Intel 540 dual 10Gbit card, it is the same controller on the motherboard.

I recently ran Cat 6e plus throughout the house which supports 10Gbit. I haven't tested them for 10Gbit but haven't had a need. I will likely only need a direct line from my NAS to my main workstation as I don't see a real need to feed all my devices with 10Gbit. This saves me having to buy a 10Gbit switch, which is still a bit out of the mainstream for a few more years. I currently use a 24 port 1Gbit switch with 12 PPOE ports.

I will run a patch panel between the NAS and my machine on a dedicated 10Gbit port until I have the need for a 10Gbit switch. The Intel 540 supports automatic MDI/MDI-X configuration so I won't need a crossover cable.

I am heavily leaning towards 16x4TB HGST drives running raidz2 for a total of 52 TB of storage and one cold spare. I'm guessing I'll be around 800MB/s read/write and 16 Xeon cores for around $2,000 USD.

I will put together a post when I start building and have some benchmarks provided everything is successful.

All images not sourced are CC0 or from Amazon

ǝɹǝɥ sɐʍ ɹoʇɐɹnƆ pɐW ǝɥ┴

around how much will this all cost?

little over $2,000.

if it was a bit more I would of tried to help you out. I work at one of the worlds largest global IT resellers

It’s pretty cheap because I am using refurbished and very carefully selected parts. If I went WD Reds I would be over $4,000 just in hard drives.

I have a lot of hardware contacts as I started my first computer company in high school.

yeah i read that in your post.

Just started big in the IT world and its really like drinking out of a fire hose but it seems to be going good

I need a NAS, disorganized data in 8 hardrives and lacking space to make a safe copy of important data, but can't afford and hope not yo have to regret the delay

Sweet build! Will you be able to hot swap a drive in the event of a failure?

This chassis doesn’t support hot swap. But you can do it live if careful.

Famous last words :)

I also run 10gbit, but even directly patched in, you'll want to account for signal anomalies. I used to run 10Gb directly to my NAS and found it problematic. Maybe it was because I also had a line running to my switch to connect it to my proxy for updates.

I found I wasn't getting the speeds I was expecting. I use my NAS for streaming video editing. I do automated video effects and post-processing on 120fps videos. I ended up running 2 ethernet adapters and putting the NAS on a VLAN by itself to ensure that I was getting the best speeds and my traffic was unimpeded. I also put up a proxy accessible from the vlan to access PPA for updates.

Congratulations!

Good choice on the raidZ2/Z3. With standard raidZ, you're pretty much asking to lose an array. You can easily have a second drive failure just recovering your array. If you don't have a drive set up to automatically recover the array, you're just asking for failure. You have to at the very least keep a spare or 3 on hand. If someone didn't have enough disks for RaidZ2/Z3, I'd suggest some form of mirroring, or constant backups. In any case, I'd still suggest keeping a drive or two in the box set up as a spare though. Just less annoying, and less likely to lose an array. Also still need backups, because shit happens.

The drive choice is a tossup. The reduced number of the large drives should give you a lower chance of failure and increased options for expansion...but the used drives are likely less likely to fail right away, due to the "bathtub" curve. New drives tend to fail. I'd probably go with the used drives, due to the lower costs. But that also has slightly more power usage.

If it's not broke, don't fix it:

The original NAS is still alive and kickin'.

So what are you using all this storage for?

Posted using Partiko iOS

Hes mining burstcoin or storj. Maybe even a little sia.

Posted using Partiko Android

machine learning data sets, pictures (got almost 200GB of pictures), data collected over the years, virtual machines. I don't even need half that storage, but since it isn't easy to upgrade ZFS, having more than enough is fine, especially since the drives are dirt cheap. Might as well fill the box as I want the speed from the spindles.

looks likew a solid project , im using a one NAS HDD at home 8 TB , its more than enough fopr my homeproject and the family ;) Its connected as a external on my router at home so everyone is able t9o use it when it gets needed ;) love it ;) my wife firstly didnt understand why i made it but now she loves it :D

nice bit of geeky kit.

This post was shared in the Curation Collective Discord community for curators, and upvoted and resteemed by the @c-squared community account after manual review.

@c-squared runs a community witness. Please consider using one of your witness votes on us here

A bit of unnecessary comment about what others are downloading... lol

Anyway, I am interested to know how all that is so cheap. I also have 2 Rosewill and love them, but they were not cheap.

Then, in terms of NAS software I was really happy with Xpenology (an hack of Synology)... until my board died... so basically I am waiting for some time to explore the new 6.x of Xpenology, but sincerely didn’t had the patience yet.

Posted using Partiko iOS

I like Synology OS a lot, but their devices are slow as shit. I hear Xpenology is fluky to run and much rather have ZFS and pure Linux to work off of.

The motherboard, cpu, and drives are used / refurbished.

Ahh... ok then it makes more sense. =) I was thinking you found the perfect hardware provider.

I also love ZFS but to be honest, not the future for me. And you probably know that on these things you wanna balance the amount of features and how easy is to play with something. I agree that Synology hardware is usually rubish for what I demand... but the "easy" way of playing with their customized busy box is just very intuitive and easy. That was the reason I used Xpenology 5.x until now... plus some SSDs to cache, did really solved 99% of my own problems.

But you clearly looking to something more heavy and in that sense I agree that even Xpenelogy (where you can build your hardware, up to some extent) might not even be for you. Usually one sign of how "far" you wanna go, is how many "competition" you have for the products you are using. If none, then you are going very far. And therefore, tradoffs will happen. Usually complexity for who's used to it, is a great plus because you gain access to a lot of features and some enterprise level stuff too. Otherwise if you are not comfortable with playing with big bugs... then, I would say, avoid the challenge. Especially if all the reasons you are setting up a NAS in first place, are to make your life easier! =)

Please do post about your last findings about this. I would be VERY interested. Even mention me (as I know that way I will have a look). 🤣

Cheers

Very cool write up. Do you plan to use any CM(configuration management) approach to set this NAS up like the Puppet? You know, saving up the headache when things failed and configuration automation.

Ansible

Hey Marky just dropping you a little steem nurse love 💕

Posted using Partiko iOS

Hi @themarkymark!

Your post was upvoted by @steem-ua, new Steem dApp, using UserAuthority for algorithmic post curation!

Your UA account score is currently 8.340 which ranks you at #13 across all Steem accounts.

Your rank has not changed in the last three days.

In our last Algorithmic Curation Round, consisting of 209 contributions, your post is ranked at #1. Congratulations!

Evaluation of your UA score:

Feel free to join our @steem-ua Discord server

it might be because of steem api glitch. sometimes people's reputation go up for no reason, and then it goes back down

Posted using Partiko Android

This post has been included in the latest edition of SoS Daily News - a digest of all you need to know about the State of Steem.

I know this is an old post but I am looking for a bit of help...

I wanna join the STORJ proyect from cero knowledge... so the question is going to be Hard Drives...

If you know the project or you are just curious this is the link

Any help is highly appreciated, thanks in advance.

NAS is a storage system on your LAN but can have Internet access.