Repository

https://github.com/Juless89/steem-dashboard

Website

http://www.steemchain.eu(syncing with blockchain atm)

What is SteemChain?

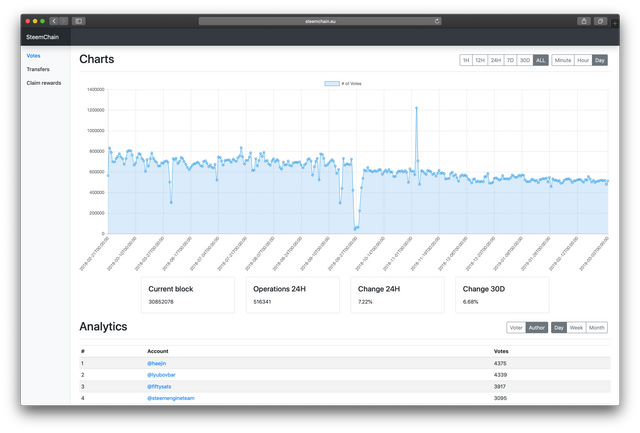

SteemChain in an open source application to analyse transactions and operations from the STEEM blockchain. Store these into a MySQL database and visualise with charts and tables via the web. Looking to bring data analytics to STEEM like websites as blockchain.com do for Bitcoin.

New Features

Expanded to all STEEM operation types

Commit: 3cc40bd48df755a259f37b0e8fc8996b0c53c4eb

For this update all different operation types that the STEEM Blockchain has to offer have been added. This includes the processing and storing of the operation and keeping track of statistics for charting purposes. The amount of operation types tracked increased from 3 to 31. Which can be divided over the next categories.

# all operation types

# voting

vote_operation

# orderbook

limit_order_create_operation

limit_order_cancel_operation

# comments

comment_operation

comment_options_operation

delete_comment_operation

# witness

feed_publish_operation

witness_update_operation

# custom

custom_operation

custom_json_operation

# wallet

convert_operation

delegate_vesting_shares_operation

transfer_operation

transfer_from_savings_operation

transfer_to_savings_operation

transfer_to_vesting_operation

cancel_transfer_from_savings_operation

set_withdraw_vesting_route_operation

withdraw_vesting_operation

claim_reward_balance_operation

# account

account_update_operation

account_create_operation

account_create_with_delegation_operation

account_witness_proxy_operation

account_witness_vote_operation

recover_account_operation

request_account_recovery_operation

change_recovery_account_operation

# escrow

escrow_release_operation

escrow_transfer_operation

escrow_approve_operation

All operation types inherit from Operation. For each unique type different data is extracted which can be used for analytics. In addition for each operation type up to several variables are tracked over time for charting. Each operation type includes at least the count. Also tracked are volume of currencies and several other variables. All class objects are stored inside operations.py removing the need for individual files for each operation.

class Transfer_from_savings_operation(operation.Operation):

def __init__(self, table, storage, lock, scraping=False):

operation.Operation.__init__(self, table, storage, lock, scraping)

def process_operation(self, operation):

# deconstruct operation and prepare for storing

value_from = operation['value']['from']

value_to = operation['value']['to']

amount = operation['value']['amount']['amount']

nai = operation['value']['amount']['nai']

self.db.add_operation(

value_from=value_from,

value_to=value_to,

amount=amount,

nai=nai,

timestamp=self.timestamp,

)

# Allow for multiple resolutions

hour = self.timestamp.hour

minute = self.timestamp.minute

# Chart data

steem = 0

sbd = 0

if nai == "@@000000021":

steem += float(amount)

elif nai == "@@000000013":

sbd += float(amount)

data = {

"count": 1,

"steem": steem,

"sbd": sbd,

}

self.counter.set_resolutions(hour, minute, **data)

Django was used to set up all database models for easy integration with the front-end. Each unique operation type has 4 different models: the operation, count/minute, count/hour and count/day. In all cases the timestamps are index for more efficient time bases analytics.

# =======================================================

#

# wallet

#

# =======================================================

class transfers_count_minute(models.Model):

count = models.IntegerField()

steem = models.TextField()

sbd = models.TextField()

timestamp = models.DateTimeField(db_index=True)

class transfers_count_hour(models.Model):

count = models.IntegerField()

steem = models.TextField()

sbd = models.TextField()

timestamp = models.DateTimeField(db_index=True)

class transfers_count_day(models.Model):

count = models.IntegerField()

steem = models.TextField()

sbd = models.TextField()

timestamp = models.DateTimeField(db_index=True)

class transfers(models.Model):

sender = models.CharField(max_length=25)

receiver = models.CharField(max_length=25)

amount = models.TextField()

precision = models.TextField()

nai = models.TextField()

timestamp = models.DateTimeField(db_index=True)

Next update

The next update will focus on the front-end. Creating a dynamic view to easily extract the correct chart data from the database while keeping it simple with the 31 different operation types. As well as adding the buttons for all operation types.

Thank you for your contribution. I have tried in the chrome browser, and it shows some errors - the graph shows but the table doesn't.

I read your answers to @amosbastian which also mine. I can also see you have put all the classes in one file

operations.pywhich may not be ideal - you might want to store the sub-classes separately. You might also want to unit test the operations based on historic block data.Maybe you can disregard the blocks that were more than 2-year old - to reduce the burden of your server.

Your contribution has been evaluated according to Utopian policies and guidelines, as well as a predefined set of questions pertaining to the category.

To view those questions and the relevant answers related to your post, click here.

Need help? Chat with us on Discord.

[utopian-moderator]

Thanks for feedback, what would be advantage to storing the sub classes separately?

The errors are due to the syncing with the blockchain. For the stats displayed it is trying to get data from the last 24H and 30 Days. Since the application is still catching up there is no data for now.

It will make your code base more organised (clearer structure) and reduce the conflicts if many developers are working on the project...

Thanks

Thank you for your review, @justyy! Keep up the good work!

After bringing up this project a year ago it's great to see you are actually developing it now! Does it need to sync with the blockchain again every time you make an update or is it just for this update? Also, I'm finally starting to use relational databases a bit more often, and was wondering what your process of creating the database model was like?

The next update sounds pretty complicated from a design point-of-view (I'm not that good at it myself, so 31 different buttons sounds pretty intimidating). Am looking forward to seeing how that turns out as well. Do you have any other future plans, or something like a general roadmap?

Finally decided to take on all required full stack technologies, and this is a great way to play around with it.

This should be the last full sync. After that only when major changes are made to what data is being stored for analysing. When that is the case it should not be a problem to only update that data, not all.

This process was quite straight forward. I started out with just deciding on what data to store and keep data separated in different tables that can be separated for quick access. The biggest problem is going over the huge amount of operations for analyses. I did some testing with 10 000 000 blocks of data and this resulted in adding indexes tot the timestamps.

For the future if I am looking to do more account specific data analysis adding indexes to the accounts is most likely also a must. The indexes do become quite large. But they can always be added later on. It is mostly trail and error.

Haha yes, will be fun... I did some preparation already, the API is already set up. I just need to connect the Django database models to the correct key and indeed create 31 buttons.

Yes the idea is to switch out the Chart.js library for something more fancy that allows for zooming and is better equipped to deal with many data points. This should allow users to select any operation type and for any custom period. I would also like to see the ability to combine different charts together.

After that is set and done I will be looking into different analytics. Like tracking which accounts account for most of the volume per operation over longer periods of time. And maybe user specific analytics. This is looking to be quite a challenge due to the large amounts of data involved.

Hi @steempytutorials!

Your post was upvoted by @steem-ua, new Steem dApp, using UserAuthority for algorithmic post curation!

Your post is eligible for our upvote, thanks to our collaboration with @utopian-io!

Feel free to join our @steem-ua Discord server

Hey, @steempytutorials!

Thanks for contributing on Utopian.

We’re already looking forward to your next contribution!

Get higher incentives and support Utopian.io!

Simply set @utopian.pay as a 5% (or higher) payout beneficiary on your contribution post (via SteemPlus or Steeditor).

Want to chat? Join us on Discord https://discord.gg/h52nFrV.

Vote for Utopian Witness!