Yesterday, September 17th 2018, was our last full day in Puerto Rico and unfortunately it wasn't spent enjoying the beach or visiting some cool places (I'll be posting about our adventures in the future). Instead, I was glued to my computer for about 15 hours straight.

As you may have noticed, the Steem blockchain had some issues yesterday. To explain a little of what happened, I'll first explain what witnesses do (you can read more about that here). Witnesses on the Steem blockchain are block producers elected by the token holders to run the network. Steem uses a consensus mechanism (fancy way of saying "process we all agree to") known as Delegated Proof of Stake or DPoS which means the top 20 witnesses by vote (along with a 21st witness rotated in from all the backups) determine what the Steem blockchain actually is. If 2/3+1 of them agree, the software and protocols which define the blockchain can be changed.

Yesterday there were mainly three different versions of the software running. Version 0.19.6 uses the code prior to AppBase which was announced 7 months ago. Version 0.19.12 uses AppBase. Version 0.20.0 is the recently announced Hardfork 20 code which has been in development for quite some time and is running on a testnet. The plan is (was?) to launch Hardfork 20 (HF20) on September 25th. A blockchain "Hardfork" is when new code is released as part of consensus that is not compatible with the old code which means everyone who wants to participate in the network (applications, exchanges, websites, etc) is required to upgrade.

Hardforks are scary things. Most blockchains avoid them, and rightfully so. At the same time, most blockchains quickly become archaic compared to their new competitors in terms of functionality, speed, usability, and more. DPoS allows Steem to innovate quickly and get consensus from the network for upgrades. This is why Hardfork 20 is called "20." This isn't our first rodeo.

Prior to the hardfork launch date, all network participants are encouraged to test and upgrade their systems. The code has checks like this to ensure code that only works with Hardfork 20 doesn't run before everyone is ready for it:

if( has_hardfork( STEEM_HARDFORK_0_20 ) )

and this:

if( a.voting_manabar.last_update_time <= STEEM_HARDFORK_0_20_TIME )

This is important because if a consensus-breaking change happens it can cause a fork which means two different versions of the Steem blockchain exist simultaneously. A fork is bad. You can read all about it here. Forks happen. I remember watching one on the Bitcoin network in real time in March of 2013. Recovering from them is very, very tricky and involves shutting down one version of the chain and moving forward with the other while working through any specific safeguards built into the system to avoid old forks being considered valid (such as Steem's last irreversible block number).

So What Happened?

Now that we've set the groundwork for how this stuff works, I'll try to explain (to the best of my ability) what I think happened yesterday. Please understand, I'm not a blockchain core developer so some of this may be incorrect. Keep an eye on the steemitblog account for further details.

One of the features of Hardfork 20 is the Upvote Lockout Period:

In Hardfork 17, a change was implemented to prevent upvote abuse by creating a twelve-hour lock-out period at the end of a post’s payout period. During this time, users are no longer allowed to upvote the post.

You can read about how Hardfork 20 makes some really good changes there to prevent downvote abuse in the last 12 hours. As someone who experienced that for months, I personally really appreciate this change. Based on this commit, my hunch is the change here caused a problem around block number 26037589 when one version of the code tried to allow a late upvote and the other version did not:

10 assert_exception: Assert Exception

_db.head_block_time() < comment.cashout_time - STEEM_UPVOTE_LOCKOUT_HF17: Cannot increase payout within last twelve hours before payout.

{}

steem_evaluator.cpp:1383 do_apply

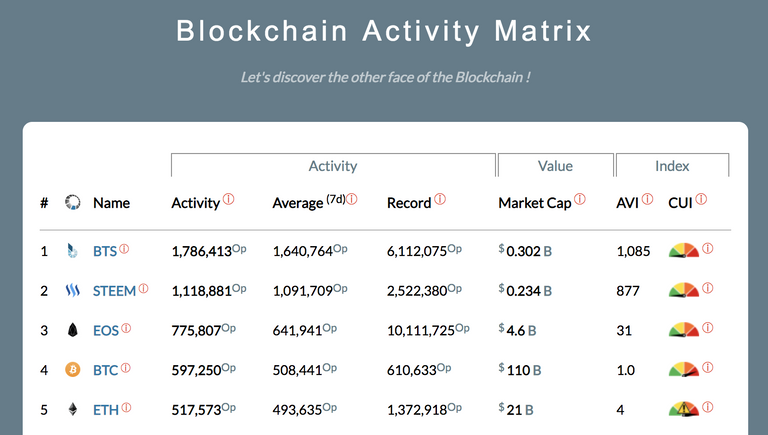

This caused a hardfork as v20 and v19 diverged. I'm not sure, but I wonder if STEEM_UPVOTE_LOCKOUT_SECONDS was needed with another check to see if HF20 was activated yet or not. Once the problem was discovered, the larger and more complicated problem came up of how to get the v19 fork active again. Most of the witnesses, in preparation for the September 25th HF20 switch over had already upgraded to v0.20.0 but most exchanges were probably on v0.19.12 or older. The number one priority of a blockchain is protecting your funds and as witnesses, we didn't want to move ahead with v0.20.0, forcing everyone to upgrade because of a bug. Hardfork changes must happen based on consensus which requires users to vote for witnesses who will support the changes they want. This means we had to fix v19 and get that chain running again. The Steem blockchain is consistently one of the most active blockchains on the planet averaging more than a million transactions a week:

Because of that volume, the v19 fork got out of sync enough to make it quite difficult to activate again. Modifications to the fork database size were needed. Not only did we have to shut down v20 nodes, we had to update v19 nodes with some code changes and ensure the blockchain peers could talk to each other well without getting flooded with invalid blocks from the v20 chain. This involved coordinating one witness to restart their server with enable-stale-production = true (something you otherwise want to avoid) and required-participation = 0 which means the blockchain can move forward, even if you don't have the normal requirement of a minimum number of witnesses participating in order to produce blocks (33%). We also had to configure a checkpoint which ensures our nodes will follow the correct fork.

Many of the top witnesses (myself included) have backup servers and in situations like this are careful to ensure their backup servers are still running the old version (v19) in case there's a problem with the new code. Unfortunately for many of us, rebuilding the code, adjusting config.ini settings, and restarting our nodes resulted in a forced, unplanned full replay of the blockchain which takes many hours. My nodes took 4.39 hours to replay and sync up. Witnesses running v20 had to download, uncompress, and replay the entire v19 block_log to get running again as well.

All told, it was a 15-hour day in front of my computer. Many witnesses went without sleep to help contribute to this process and provide logs to Steemit, Inc. and community developers (I'll let the heroes who contributed tell their own stories). Communication cordination happened on Steem.chat and Slack among top witnesses during the entire time. Though I've advocated for more real-time transparency in the past, in situations like this, it's helpful to keep the noise down and avoid too many cooks in the kitchen. Even with only about 50 people in a chat room, it's challenging to sift through all the different code patch proposals and ideas circulating about the best path forward. It's also important to ensure information shared is kept private so the chain can't be easily attacked while it's in a vulnerable state.

Securing a distributed consensus blockchain with on-chain governance is really hard. Some who are, for lack of a better word, ignorant of the work involved like to gain attention by criticizing what they don't understand and finding fault in things they didn't build. Every time a complicated software project has a bug, they'll ask why more testing wasn't done or how this could have happened. In my 20+ years of software development experience, I understand that some bugs just happen. They make up the perfect storm of untestable, edge-case scenarios. Could this have been avoided? Maybe, but it would have required a specific set of circumstances on the testnet which includes multiple versions running simultaneously with an upvote in the last 12 hours as consensus flips from v19 to v20. Given all the possible permutations of how the Steem blockchain can be used with tens of thousands of active accounts in any given month, testing for all scenarios is currently impossible.

In situations like this, all you can do is the best you can do. Recover quickly, recover securely, protect user funds, learn from any mistakes made, and improve for the future. I think the top witnesses are doing exactly that. We all worked together like I've never seen before and learned some new things along the way. I won't include the details here because in moments like this, privacy is important to ensure bad actors don't exploit forks or code changes for their own gain at the expense of the network. If you want to know more, ask the witnesses you support how they were involved.

Conclusion

Though the process was a bit rough and took longer than any of us would have liked, I'm proud of how the team came together with a good combination of patience, exploration, and support to get this chain going again. I don't know what this means for Hardfork 20's release date or if this is the only obscure bug lurking in that code. I do know it was a frustrating way to spend my last full day in Puerto Rico (the beach would have been so much nicer!), but I would do it again because I love this blockchain and the community that supports it.

Please stay tuned for more official announcements from Steemit, Inc. and other witnesses. If I've misrepresented something here, please comment below as a correction, and I'll get to it when I can (we're flying back to Nashville today). Both my primary and backup witness nodes are running on v0.19.12 again and my seed node should finish replaying soon. As always, if you have questions, please feel free to ask them, and I'll do my best to answer them or direct you to someone who can.

Luke Stokes is a father, husband, programmer, STEEM witness, DAC launcher, and voluntaryist who wants to help create a world we all want to live in. Learn about cryptocurrency at UnderstandingBlockchainFreedom.com

Woah, I thought I had it bad, but that is really bad timing for you.

Nevertheless, I really appreciate the inside information on what exactly happened, since the information-flow wasn't that good. I understand of course that 50 people in a chat-room are already enough, but I would still have appreciated it if someone from Steemit Inc. or somebody else could have given some updates, besides:

"stay calm, downgrade to 0.19.x and don't talk in this chat-room"With that said: I hope you can use today to relax a bit from yesterday's stress.

Perhaps there should be an info page for witnesses behind a steemconnect login so someone from stinc can inform and keep others updated with pertinent information.

The top witnesses defining consensus stayed in constant communication throughout this issue.

Yes i know. I meant for the rest.

i think there was communication through SteemNetwork account on Twitter.

I thought it might just be a good idea to be able to keep non-critical witnesses up to date just in case. from my understanding, @abit played a large role in it but at the time wasn't in the top 20. It could also help the other witnesses feel not left out completely as they are still somewhat in the loop. It only really requires basic information which then would likely be spread throughout the community via chats and reduce the amount of FUD.

Flying with three kids isn’t usually that relaxing, but the first leg of the trip was great and my noise canceling headphones work great. :)

Yeah, this one was stressful. On other issues I’ve spent time connecting with other communities to inform people in real time, but this time I was deeply focused on the conversations taking place and had one of the few nodes still untouched and running v19. Unfortunately my node (like many others) trigger a replay when I tried to launch it. Also, being in an Airbnb on an island with less fha ideal internet had me a little out of my element.

Communication is important Abduzhapparov I did tweet about things as soon as it was happening. It’s hard to give details until we know what’s actually going on. Otherwise we get too much speculation and knee-jerk reactions which can cause other problems.

Better than being on the plane ride back, I suppose.

Indeed. :)

Thanks for dealing with the issue professionally. Bugs happen, especially with something as dynamic, interactive, and new as a live blockchain running social media platforms.

I think the whole event, while unfortunate, is demonstrative of the strong community infrastructure behind what is largely a cutting edge technology.

Technically, we either migrated back to the original v0.19 code.... but in order to gain consensus, some patching with the server to ignore v0.20 acceptance of the 12 hour window for voting was necessary.

I patched my witness node myself after learning about what was needed.

It was a tricky situation indeed. I understand why it took as long as it did to clean up. (I went into further detail here.

Glad to see you are up and running again!

Yeah the almost five hour replays were extra difficult as well. It seems some had to replay and others didn’t. That added a lot of drama. I was ready to go with my v19 node but vanderberg asked me to hold off since he didn’t want to risk anymore replays and we wanted to keep v19 nodes ready to restart with enable stale production if needed. Eventually we mostly had to replay anyway. By the time I tried, my v20 node already downloaded, decompressed, and replayed as well.

Yes, by the time you realized your replay, when synced was no good, you had to replay again. Tough times this replay stuff.

Replaying should never be necessary "EVER"... because replaying from genesis is a terrible idea. Archiving the first 2 years of steem... and keeping it in a separate archive database should be sufficient.

If the code changes, and can't read the archived format anymore.. there should be a separate utility tool created to port the old archive database to the new format, that runs independently of steemd.

Going forward, as the blockchain gets huge... these designs need to happen sooner than later.

The challenge with that idea is that it would no longer be a blockchain. If the data isn’t immutably stored in a way that any node could sync with, things might get weird.

It's important that one is able to replay and verify the full blockchain, and it's also important that people actually do this exercise every now and then (and particularly after the network has been bootstrapped like this), but in situations like this one really want to be able to bootstrap things without having to do a full replay from the genesis block - at least, one should be able to bootstrap things only from a known recent checkpoint.

It's rare to see a social media platform have outages lasting for hours, it's rare to see banking solutions having outages lasting for many hours, and it's even rarer to see major blockchain solutions having long-lasting outages.

Bitcoin had a similar chain fork in March 2013, it took six hours to resolve the situation. Lots of trust were lost as double-spends were possible during those six hours - Steem has obviously learned from this, as the network was shut down to avoid such problems. Anyway, given that the chain actually forked, and invalid blocks got produced by the 0.20-nodes, did any transactions get rolled back?

No, a checksum value was implemented, so we were all on the same fork.

Since witness nodes were not producing properly, there was no conensus for any of the chain forks.

Any transactions that were attempted to be sent, weren't written into any particular chain.

So exchanges and the like, wouldn't have had transfers completed while it was in that state.

So that is the long way of answering "did any transactions get rolled back" (I assume you mean, did double spends occur?) --- no.

"Double spend" is one thing, posting something to the blockchain, seeing that it got posted, and then having it deleted the next day is another. I guess there is no such concept as "unconfirmed transaction" in Steem, a transaction that isn't written to the blockchain in due time is probably lost?

I noticed one of the emergency patches was like "ignore all blocks produced while the blockchain was down", that's part of why I'm asking.

100% UpVote + ReSteemed...

Cheers !!

Thank you @lukestokes! Sorry to hear about your shortened vacation but thank you for your work.

On a positive, Steem jumped a little bit :)

Hahah... I was laughing about that also. Everything was red but Steem.

Nice to hear it really was all hands on deck during the outage - I was searching out information on the outage and found very little - except for one ranter on Twitter that speculated the problem is due to many inactive witnesses. I'm positively sure that Steem comes strengthened out from this. Still, it's quite unexpected and unheard of with such a long outage, so I hope knowledge is drawn out of this experience and used to prevent such long outage next time...thank you

I take my hat of for you Luke! As entrepreneurs we have to sacrafice personal time to work.

I try to look at the positive side of things... While there was a loss due to opportunity costs, when things got back online, everything was good asbif nothing had happened which demonstrates that the “failsafe” works to protect the blockchain and the funds on it. I also think it was beat for this to occur now rather than after the HF 20 as it now provides time for the witnesses to consider what steps are needed for the transition next week, if it stays there. While I imagine it was tough to find consensus, it was resolved... Now it is all about how we learn and improve for the future as the ecosystem continues to grow.

Yep. @steemitblog put out a good post with more details as well.

I had no lack of faith at all in the Steem blockchain.. That is unfortunate that you had to miss your last day though. 😕

Hopefully in the Future Steem will allow you to spend as much time as you want down there!! 🌴

It’s part of the job. :)

100% upvote thank you for all you do on the steem network and keeping it running

Thanks for all that you do to support the network @lukestokes!

Sorry it had to ruin your last day in PR (but from the sound of it, you will have plenty of time to spend down there in the future!)

Yep, there will be many beach days in the future.

Man what a bummer to have this whole thing happen when you're holiday-ing. Thanks for all the work and hassle you went through (I know it was the right thing to vote for you as witness).

I may know nothing about the stress you and the other witness must have felt glued to the screen, but I do know that being able to comment this right now had not possible had not been for that.

It's communications like this that gives confidence in a witness.

Thank you for your support. A lot of great witnesses worked really hard. Makes me proud to work with them.

Thanks for all your work to get us back online. I know some people were worried, but I've seen the team sort issues like this before and had confidence.

Thank you for the confidence. It was a little bumpy at times, but we got through it.

Sorry @lukestokes you had to spend time in Puerto Rico rectifying all this. But as Token holders we surely appreciate you soooo much.

Thank you, Sir , for the excellent job you are doing !!

This is an important point that I hadn’t considered. Maybe 99.9% transparency has advantages over 100% transparency. If it does, who one gives one’s witness votes to is even more important.

I think many were already waiting for your update on the incident, since you're well known for providing a great insight, without getting lost in details only a few of us may comprehend :-)

My biggest takeaway of this situation has been once more that in times of pressure and problems there is always one thing you can rely on: this community.

Thanks for your efforts during the crash and the time dedicated to inform us!

Resteemed

Nice to hear it really was all hands on deck during the outage - I was searching out information on the outage and found very little - except for one ranter on Twitter that speculated the problem is due to many inactive witnesses. I'm positively sure that Steem comes strengthened out from this. Still, it's quite unexpected and unheard of with such a long outage, so I hope knowledge is drawn out of this experience and used to prevent such long outage next time.

One of my pet peeves - it's horrible and totally avoidable that all interfaces to Steem falls down in such a circumstance. I strongly believe that old content as well as user balances should be online and available even though the blockchain is not advancing. It doesn't sound like a very big job to ensure nodes will stay up and deliver content (up to the block where things starts going wrong), but without producing, broadcasting or accepting blocks.

Thanks for explaining the situation, Luke!! Glad all is going well again!!

Thanks for this update. I'm just now reading it, trying to find out a little bit of what's happening with HF-20.

After the fork on the 25th, I read certain posts that were immediately critical and excoriating of the fork and the team. On this post, on the other hand, I at least got some clear perspective about the reasons for the fork and the difficulties in implementing it.

Keep up the good work ... particularly thru this rather tough time.