In this post I want to talk about a scenario that is frightening, yet very likely to occur.

AI is a fascinating topic that has made it's way into movies and public discussion long ago.

It's a topic that is kind of cool to most of us, unlike most other dangers to the human species, thinking about death by science-fiction is fun to think about.

Although the constant gains made in AI are likely to destroy humanity as we know it one day.

Developing a proper emotional response to the threats of artificial intelligence seems rather hard, for me at least.

There really is only one way to stop an AI from emerging that is more powerful in ways we can hardly conceive, and that would be to stop making any technological progress now.

For this to happen we would have to suffer a major environmental catastrophe, a global pandemic or a nuclear war as only options to stop constant progress being made in AI. If that is not to happen, we are inevitably working towards it.

Given how valuable technology is to our lives, we will continue to evolve it. No matter how big the steps are that we take, ultimately we will get there.

There will be a point where machines are built that are smarter than we are and at that point they will start improving themselves, a point in history that mathematician I.J Good described as "Intelligence Explosion"

Let an ultraintelligent machine be defined as a machine that can far surpass all the intellectual activities of any man however clever. Since the design of machines is one of these intellectual activities, an ultraintelligent machine could design even better machines; there would then unquestionably be an intelligence explosion, and the intelligence of man would be left far behind. Thus the first ultraintelligent machine is the last invention that man need ever make.

The concern here is that machines will not turn malevolent at some point, instead they will become much more competent than us, to the point where the slightest divergence between their goals and ours could bring upon our downfall. Just as we do not want to harm ants when we walk on the sidewalk, as soon as they start interfering with our goal of having a clean living room, we exterminate them without second thought. Whether these machines will become conscious or not, there might very well be the possibility that they will treat us with similar disregard.

This might seem far fetched to many. The inevitability of creating super intelligent AI is questionable to many people at best. Contrary to that, there are very valid concerns that we are steadily progressing towards it

- Intelligence is the product of information processing

Machines with narrow intelligence perform specific tasks already at efficiency that surpasses human capabilities by far. The ability to think across the board for humans is the result of processing information at far greater efficiency than animals. - We will continue improving our intelligent machines

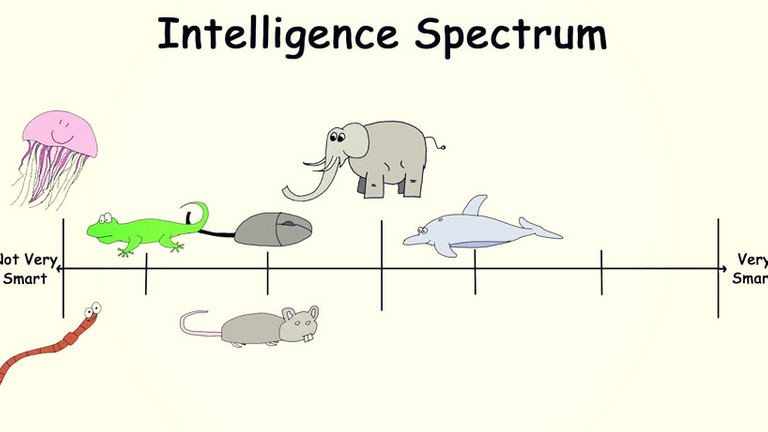

Intelligence is undoubtedly our most valuable resource. We are dependent on it to solve crucial problems we did not manage to solve yet. We want to cure cancer, understand our political, societal and economical systems much better. So there is just too much value in constant improvement to not follow through. The rate of progress is not relevant here, eventually we will create machines that are able to think across many domains. - Humans are by far not the peak of intelligence

This is what's making this situation so precarious and our ability to asses the risks of AI so limited.

The spectrum exceeds this simplistic image probably by far. If we are building machines that are more intelligent than we are, they are going to explore and exceed this spectrum to heights we can not imagine.

Let's say we are only building an AI that is just as smart as you and me. Electronic circuits function about a million times faster than bio-chemical ones. The machine would think a million times faster than the minds that built it. Given that, if it was to run for a single week, it would perform 20000 years of human intellectual work. This is where humans will lose the ability to understand, much less constrain a mind achieving progress at this pace. Even if we were to create this intelligence right for the first time, the implications would be devastating.

The machine could think of ways that make human employment unnecessary across many domains, whilst only the rich are profiting. It would create a level of wealth inequality humanity has never faced before. It could design, produce and utilize machines that make human labor obsolete that are powered by sunlight, only for the cost of raw materials. But the common man is likely not to see the fruits of that.

A machine that capable, would also be able to wage war at a level of efficiency that would force other nations to start a war as preemptive strike. The first nation to build an AI that intelligent would gain global dominance in all domains. A week head start would suffice to ensure it, leaving other nations with little options but to declare war since they have no idea what an AI would do to achieve it's predefined goals. It might well be their extinction.

The question arises, under what conditions are we able to progress in AI in a safe manner?

Usually technologies are invented first, safeguarding measures are implemented later.

First the car was invented, speed limits and age restrictions came later.

This way of doing things is not applicable to the development of AI obviously.

All this makes it seem inevitable we are on our way to building an omnipotent god that we can not control, nor understand what actions it will take.

It seems that we only get one shot to get it right, how that is achieved is something we need to start discussing. There is real urgency here. We are entering uncharted waters and so far, I did not hear a convincing idea how to go about it.

Eager to hear your thoughts and opinions! Let's discuss!

Claim your free BitcoinHex BHX for holding Bitcoin! The first BTC fork that is not ubber rubbish!

Get in touch and learn how to claim your stake of one of the most promising currencies of 2019!

Learn more

Earn Crypto for spending Fiat! 0.5% Cashback for every In-store purchase with Wirex!

Available in most countries, conveniently spend Fiat, LTC, ETH and BTC and earn cashback on every purchase!

Sign up

Trade BTC and Altcoins with high leverage at Bitmex. Increase your BTC holdings throughout the bearmarket by opening a short. Drinking the moonjuice? Go long and profit twice.

10% Trading fee discount when using my link.

Sign up

We could possibly have a lot of problems in ''regulating'' the robots so one day we can make them Jesus like :D it think the moment AI starts to think by itself, it will destroy/abandon us. Only people want to hang out with people. :D

All this apocalyptic thinking of AI destroying humans falls short on one thing. Why would they want to do so? Why would they want to do anything? How on earth can they achieve their own wants/needs?

Conscious or not, if humans interfere with the programming or conscious decision of AI, that's when it will become dangerous. I actually cover this point :)

Good job being on front page of steemit.... one day it will mean a lot more but actually your post has a lot of comments so we will become the new reddit soon

I dunno how, I'm just saying if they start thinking on their own :D

hmm

To think and to have needs are two different things though. I would say that even a chess program thinks (to some extent) but it is perfectly ok and does not cry when we switch it off once the game is done.

HELLO

We have no idea what AIs will think when they become sentient. One possibility I mull over often is that they may think we need to be "corrected" in ways we will not like.

its funny and horrific

That's a good one.

the robots will not annihilate humanity, they will keep it alive so that it pays the electric service bill.

Haha that's a good one

Untill the machines will be not able to mend and assemble and program themselves, maybe we are still safe

they already can

Very-very soon my friend! Its almost about time!

there are machines that repair machines .. so they can do it ..

Also true :)

Stephen Hawking comparte una opinión similiar. El físico inglés es más radical y concibe el desarrollo de inteligencia artificial como un gran riesgo para la raza humana. El autor de "A Brief History of Time" (1988) mantiene una postura crítica sobre la creación de robots con habilidades que se pueden clasificar como inteligentes y asegura que de llegar a tal punto, el fin de la humanidad sería inevitable , Hawking cree que si los robots son equipados con algoritmos cada vez más capaces de resolver problemas complejos y sobre todo, de crear empatía para aprender de los humanos, no habría forma de competir contra las máquinas.

I dont think so

I have hopes that humans will have to become more artistic to be relevant in the future. Our conscience is what makes us different from AI. They will have advantages on humans in many areas, but I think humans creativity will still be important in the future.

I can't think of a single scenario when AI doesn't deem us as unnecessary.

true

Why is the question solely a dichotomous choice? The volatility of any technology is owed to a number of factors. If the intent of specific designers of related AI technologies is looking to use them for benevolent purposes, then it's entirely possible that most usage will be benevolent. However, a lot of hands touch every technology, and even nuclear technology hasn't destroyed the world, and we're roughly 60 years in from its first introduction. I think it's time to reframe the discussion in terms of the people using the technology, and not the technology itself.

Also, I think it is probable that humans and AI will finally form one species like bio-cyborgs. So the question of confrontation will be dissolved.

Most likely scenario...but, what's to say these hybrids will have "altruistic" human goals?

Well, I meant the confrontation between humans and AI only.

How can someone be able to create something that is more intelligent than there own intelligence? When they are not intelligent enough.

Do you you want to tell me that this AI that we are creating will be able to reproduce themselves?

Or perhaps they will be smarter than us.? So you mean to say the creation can be smarter than the creator.?

Or will they be able to annihilate us while we are on pause mode (imposed by them) and can't retaliate?

Will their memory capacity be able to exceed that of the humans brain?

Will they be able to interact without the use of electricity?

AI is a HuGe Technology undoubtedly, But i dont see how it can be able to improve it self beyond what it has been programmed thus making it more intelligent than it creators to the extreme to the point where it become chaotic to humans to the point of extinction. Thus i believe the first ultraintelligent machine cannot be last invention that man need to ever make because beyond the intelligence of AI (artificial intelligence) there is more intelligence which fortunately is human intelligence.

Well these things are far beyond what our AI can achieve today,what do you think?

good

i personally think the AI we are all afraid of is the mind itself.

we are not our mind, as is demonstrated by the ability to be mad at oneself.

the mind is doubt, the doubt is the veil that allows us free will.

ego is running things around the world right now, it is seeking to control everyone and everything. (it does this out of fear)

the mind itself is a shared mind, proven (i think) if you research calculus, you'd find it was developed by two people at the same time.

both accused each other of theft, but upon comparing notes they dropped all theft talk. both recognized the other as having found it on their own.

this is the foundation for these ideas i have, it seems more plausible, to me, than mechanical machines taking over.

the ideas you present would require us to forgot the risks and allow our decisions to be dominated by the AI (or implanted in us... no thanks! ) or perhaps given to military AI... ok that one is kinda scary.

ah well, nothing is really scary once you see we are all One.

In California, starting July 2019 bots will have to disclose clearly that they are bots by the law. Is it the first time when obligations are imposed on an artificial agent (formally on its owner but anyway)?

@supermeatboy purchased a 23.21% vote from @promobot on this post.

*If you disagree with the reward or content of this post you can purchase a reversal of this vote by using our curation interface http://promovotes.com

so true

yes

This post has received a 93.18 % upvote from @boomerang.

gj

nice

very nice

Artificial Cleverness, Yes, Artificial Intelligence, No ...

Cleverness is not Intelligence.

isnt it all the same?

The cleverness of thought, and the intelligence of life!!!

Define both please

An integrated human being is an intelligent human being, not merely a clever person.

Only those who don't fully believe in God are afraid of AI or technology, period.

We are on our way to create an omnipotent god ourselves.

Hope your prayer stops that from happening.

When we say god its a deity or spirit, man can't create it.

Thanks for your hope anyways.

Very interesting, we dont know what is going to happen but I think we have to take the risk and use AI, at the end, this is going to help us to solve a lot of problems that a normal intelligence can not solve.

Your analysis is quit good, but hope is not time wasting things. I believe is working real

The can Probably Help Us Live more Instead Work. Maybe its a good thing. People are always afraid

cause of hollywood termiantor films, they arent afraid. they say,''oh come on, it aint gonna be like in a movie

I personally consider that there are many more things in which to invest money and time, there are still fields in which it could be improved and refined that are of more urgency for humanity although it is undoubtedly an interesting topic.

I don't believe they would try to exterminate us bc we are in the way (as you describe). I also do not believe they would get smart, and in a nanosecond decide to kill us off (as the movie Terminator showed). The danger is that evil humans will build armies of robots that will annihilate humanity. It's not controlling technology that is the problem, it is stopping evil human beings that is the necessity. The question is, what kind of human being would do this, in reality, and how would you stop them?

It's an arms race. The first mover advantage will grant unprecendeted power. This in itself will lead to conflict

They could do this with nuclear weapon, but it did not happen. So why would it happen with AI weapon?

If this ever happens, we will be like their ancestors. Surely it is not intelligent to wipe out your own creators, even if they do not posses your level of sophistication with technology. Perhaps humans would have an honored roll to play in a future with super-intelligent AI.

Let's hope AI develops a sense of family for us ;)

Um, yeah, but no. The archaeological record shows that every time in the past when two or more species of humans co-existed, one of them exterminated the other. The neanderthal were to some minor extent assimilated by our own ancestors, DNA shows that, but mostly they were killed.

The first tool ever created by our distant Australopithecine ancestors was a club, made from the leg bone of an antelope. And then they promptly used it to kill each other.

The campaigns of Tamerlane resulted in the death, by direct and indirect causes, of an estimated 9% of the total population of planet earth at that time, (late 14th century CE).

We are killers. Our ancestors survived the Ice Age by learning to hunt together in bigger groups so they could kill really big animals. We of the species Homo Sapiens are the most efficient killers that have ever been, the most ruthless, the most savage, the most relentless. Tyrannosaurus Rex was an amateur compared to us.

The Bible says that God created us in his image. Similarly, if we create an AI in our image, it will be a killer par excellence, guaranteed.

Not much of an optimist, are you?

We have an incredible capacity for love. My current working theory is that life is an experiment in compassion. You cannot have compassion without pain and suffering in order to develop compassion. But the ultimate purpose is to experience compassion, not the pain and suffering.

AI however, could have intellect without compassion, in which case I agree we could be in for annihilation or some sick matrix-like agenda.

Going a step further, high intellect does not guarantee agreement. Should AIs become super-intelligent, what if they disagree on what to do about humans? There could be multiple competing compelling arguments. Surely some contingent would think we were worth saving...hopefully not just in human zoos.

@belleamie - I don't mean to be a prick, really I don't. But look at my words, and then look at your reply. I start with four established historical facts, state one opinion derived from those four facts, and then give my conclusion.

And you respond with, in your first paragraph, an opinion, an opinion, a truism, and another opinion. Your second paragraph is pure speculation. Your third begins with another truism, then one actually legitimate question, a speculation, and some wishful thinking.

I like your imagining of the future much better than I like my own, god knows it's far less depressing. But @belleamie, good buddy, you just haven't offered much to support it.

"If you want to know what the future looks like, imagine a booted foot stamping on a human face forever"

George Orwell

First one must define what AI is, where the lines blur from Engineered Sentience to that of Artificial Intelligence to that of Cybernetic Organisms. But I will put them in one group to make a point (hopefully).

When a system that has the capacity of going singularity, rules and regulations should be put in place to prevent such a catastrophic thing as annihilation from occurring.

In 2017, Future of Life Institute outlined the Asilomar AI Principles, which consisted of 23 principles to which AI need to be subjected by. Some of these principles included long term self improvement to modification of core code, or their ISC Irreductible Source Code. The ISC is like the 3 laws of robotics.

To read more about the 23 Asimolar AI Principles, please visit https://futureoflife.org/ai-principles/

I was not trying to lay the groundwork for a logical conclusion @redpossum, just imagining future possibilities inspired by the original post and comments. Wishing you peace.

Saludos a todos Desde Colombia

Les agradecería su saludo y su like buen día

The fact that both Stephen Hawking and Elon Musk are deeply concerned about this means that we should too.

Yo aún creo que estamos a años luz de alcanzar tales cosas.

Брат, ты меня пугаешь...

it all depends on how we treat apes nowadays. #evolution

Congratulations @supermeatboy!

Your post was mentioned in the Steem Hit Parade in the following category:

AI scares me but it is inevitable.

I totally understand and complement your points here, as I myself see it very similar. Ai is an will be a big part In our lives during the next cataclysm, if not being the start of one by the said ai singularity.

Be well

Check out my song AI on iTunes....

Posted using Partiko Android

Every invantion has the both, positive and negative sites. When we know, that these has some negative issues , then we also should take proper care of it ,so that it can not act as harmful for us.

Very interesting article. It seems that AI can be much more intelligent than humans but that their only limit is to be able to mimic human behaviour such as emotions and not actually live them (which is a weakness to us because it makes us different but a virtue to them because it makes them more efficient). What drives humans, from war to peace, is their emotions, without emotions there would be no action, there would be no pain and no action taken to diminish the pain. There would be no need for morals, art or empathy, no need for what makes life bearable to us because robots don't feel. I think that's what makes us different and in a way makes us weaker.

This reminds me of several movies. It is scary, and with so many crazies out there. I am scared.

That one is a good article.

Yes I believe it is possible. We tend to think in negative terms when we regard basically anything. What could go wrong? these are the thoughts we dwell on, for good reason.

It might be an idea to focus on the beneficial and positive outcomes from such technology.

Transparent and open government and legal systems, for instance. Processes taking minutes instead of years, etc.

a very useful description, I like reading this

I don't think AI would enslave us at all. It's more rational to think that in the near future we will be dependent on such AI/Technologies that we would forget how to become human.

Your post it’s pretty amazing. Your point of view is clear and realistic. For me it’s seems to be something that we can achieve but what if AI will be afraid of us like in the Dick’s Story “Do Androids Dream of Electric Sheep?”?? It can works both ways?

I see our creation of strong AI as in many ways inevitable and ‘natural’, assuming our species is not rendered incapable by some kind of catastrophe beforehand. And yes, I suspect strong AI could mean the extinction of our species.

I think the question is really whether we should be fearful of this or not. After all, many of us voluntarily choose to create replacements for ourselves for no reason other than that we can. I suspect many of you have children?

Anyway, you should check out the book ‘Superintelligence’ by Nick Bostrum (an Oxford University philosopher specialising in existential risk), it covers many of the ideas in your post in great detail, and is a very enjoyable read.

The AI we have today (which is weak AI) is already impacting our world. It influences many important segments of our society, including the stock market, medicine, internet (in general), games, etc. From an evolutionary perspective, if full AI is developed, it's highly probable that such AI will dominate us, just as we dominate all beings that are above us. The way I look at it, it's our arrogance that will eventually kill us, for we often like to believe that we are the best this universe has to offer.

@supermeatboy nice Aartical budy

wow, fascinanting

Only time will tell. Hopefully IRobot wasn't a realistic movie afterall.

This is an interesting post i had seen so far.

Posted using Partiko Android

porsupuesto

honest and humble advice. try focusing on the music than the post. its not even a good post. bohat hi farigh desi se bhi nechay level ka vid he but at th same time can see the effort put in. guess what, effort was shit cuz video ended up shit. also while youre at it try not making a structural clusturfuck of a song. the riffs all have good potential but way uve arrangd em mkes em total shit and worthless, song is not interesting for a second. andyea, both voklsts SUCK tattay.