Stable Whaaaaat?!

Yes I promise, it is stable.. ish

Artificial Intelegence?

So after some playing around and testing over a couple of weeks I think I may have (somewhat) found a way to more or less get what I want out of the AI.

The Fun Stuff

So as you start to understand(more or less) how the diffusion and text models interpret your words it will all come together and you can start writing prompts and tweak some settings to make some super cool things...

Not So Fun Stuff

While playing around with a lot of settings and models it can get quite annoying and tiring when the produced result is just a tinnyyy bit off. Which leads to some wacky creations...![cute chibi animal digital art mascot chibi cute adorable [Stable Diffusion plms] 361281737.png](https://images.hive.blog/768x0/https://files.peakd.com/file/peakd-hive/deadeagle63/AKLt4ieR6R8g78PxNS5PMarnUY7giETCTLHP1qDYWYsfnZJCuvA2ruEP9PvHsSY.png)

Whatcha usiiing?

![a galaxy in a small glass bottle as a 3d render dreamy mystical chaotic disturbed [Stable Diffusion plms] 1352883676.png](https://images.hive.blog/768x0/https://files.peakd.com/file/peakd-hive/deadeagle63/AKDhF6YJwdrEoSGg5WZtuRj2ixQML32tchVAkaxbqPRFTFvbCuw58oMeNDtdgNN.png)

As many of my previous AI posts, I use Visions Of Chaos with a bunch of AI models; for text to image generation I typically use StableDiffusion but in the past I had used VQGan of DALL-E.

Okay, but gimme the settings bro...

Well, that is easier said than done, for the most part its not just a one-size fits all solution, its more a duct-tape kind of solution (for now). But here are some tips:

Big Yesses

For the most part, any prompt will create something more or less decent, but just like regular art you need to carve it out. When giving it prompt try to be as specific as you want, you want the image to look like a digital painting, cinematic, have a dark ambience etc. - then specify it.Big NO NO's

AI is not some magical tool that just knows what you want it produce, it can only do what you tell it to do. So the more vague you are the more it will struggle and the results will look poor.Some Examples

The images below are prime examples, the images on the left showcase barely any descriptors while the images on the right contain far more descriptiors. All the artworks are generated over 500 iterations*NOTE FOR THE FIRST TWO I FORGOT TO NOTE THE SEED*

![a fantasy landscape [Stable Diffusion plms] 1219434913.png](https://images.hive.blog/768x0/https://files.peakd.com/file/peakd-hive/deadeagle63/AKF7Btf7w5qgYHVuayCr1XryBwGMRAwMCNfsuLi5hMikXB2X8Af4A3nry6CyzKz.jpg)

a fantasy landscape

![thresh combined with pyke from league of legends hooking a soul with his hook [Stable Diffusion plms] 296945706.png](https://images.hive.blog/768x0/https://files.peakd.com/file/peakd-hive/deadeagle63/AKAoqHrG4imyjN4aPrC3NvJsLfcGuF9jFdYPEs11FmUFNHo3Qi1HRQiHiJDryES.png)

thresh combined with pyke from league of legends hooking a soul with his hook seed:296945706

a fantasy landscape featuring mystical plants area landscape hyper realistic detailed fantasy dramatic cinematic

thresh combined with pyke hooking a soul from league of legends, league of legends, detailed, cinematic, dark, digital artwork, concept art, ghostly hands holding the hook, armoured skeleton, thresh, pyke,cinematic lighting, highly detailed, illustration, artstation, digital painting, art by Bob Kehl, Terry Moore, and Greg Rutkowski seed: 1664105712

Okay then..

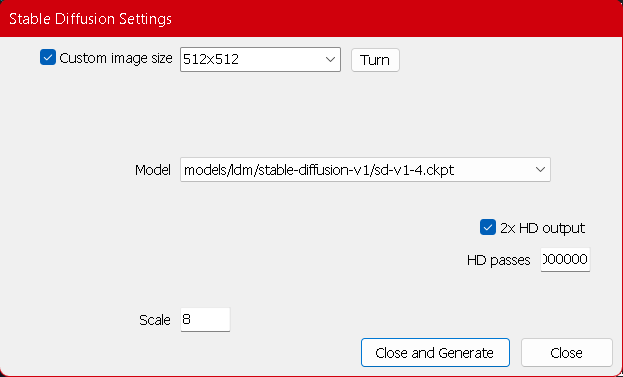

Yep, as you can see adding more details and descriptors can really take an image to the next level. But ofcourse as it stands, AI is a tool not a magic one fit solution.As for my settings, in VoC this would be it:

I just have quite a lot of HD passes on the image to take it from a blurry 512x512 to a nice crisp and detail 4096x4096

Conclusion

After using VQGAN and a couple of other models such as disco I can definitely say StableDiffusion is the current best model for getting more natural and coherent results.. for the most part sometimes you are left questioning where you went wrong like this:

What?!

I guess playing around with the "weight of the prompt" which is on stable normally at 50% (at least on nightcafe), you can crank it up to make sure your words have a bigger impact.

Nice work!!!

Exactly that, I found the more descriptive you are and the more you classify the better results it produces; although SD does have problems with legs and hands, well on v1.4 rn :)

P.S if you have a Nvidia card with atleast 8GB VRAM you can run it locally :D

Sometimes I get stuck on a prompt for hours and waste so much "credit", just to figure out the right order of words.

I admit that I take sometimes ideas from other people's prompt, just to see how it work.

True that, I found especially when using Nightcafe/starry AI i was spending such a long time making a prompt.. and then boop it comes out wonk haha

Congratulations @deadeagle63! You have completed the following achievement on the Hive blockchain and have been rewarded with new badge(s):

Your next target is to reach 20 posts.

You can view your badges on your board and compare yourself to others in the Ranking

If you no longer want to receive notifications, reply to this comment with the word

STOPTo support your work, I also upvoted your post!

Check out the last post from @hivebuzz:

Support the HiveBuzz project. Vote for our proposal!