In my previous posts (part 1 here, and part 2 here) I talked about what makes a dApp a dApp, and about the decentralization of the Steem ecosystems. When I realized how fundamentally critical it is to a decentralized ecosystem to also have decentralized interfaces into the ecosystem, I knew I would have put my money where my mouth is to start the push towards such a model.

Today I announce to the public my API infrastructure, reached by https://anyx.io. After a few weeks of planning, and another for building, testing, and setting up, it's now live and ready to service Steem users, Apps, and dApps for their needs.

Infrastructure Design

At a high level, the infrastructure is quite simple. The goal was to have high-performance API node(s) as the back-end, with a lightweight front-end proxy to redirect traffic appropriately.

To do this, I deployed nginx on the front end (to handle SSL), pointing towards Jussi on the same machine. Jussi is a verification and redirection interface, allowing one to split requests based on type to a specific API backend. This is a form of load balancing primarily, but also enables specialization among the API nodes in use: not all need to contain the entirety of the data, each node can contain specific API data and Jussi can intelligently redirect requests to the specialized nodes.

In theory at least. Currently, the Jussi setup I have going is blocked due to the inability to specify ports, as noted by this Github issue here. So, while in theory I could load balance among Steem instances, there is currently only one back-end servicing API requests.

On the back-end, I have a heavyweight, fully customized, hand-built, enterprise grade server. The idea for hand building the server was to use my knowledge of how Steem functions with different hardware (especially for storage), picking out parts that would give the highest performance. Again, sadly, this was kind of in theory, as some sacrifices were made due to part availability in Canada. The back-end responds the the API requests that Jussi requests, replying back with the data.

Finally, I deployed Lineman on the server. Lineman translates legacy websocket requests into http requests. While not required for an API node to function (the usage of websockets has been deprecated), several services still use them. The two biggest ones are steemd's deprecated cli_wallet, and the standalone Steem wallet Vessel. Supporting alternate wallet methods was critical to my design philosophy of decentralization of interfaces, so I spent the time to make sure that websocket requests work. With some configuration on the front-end, websocket requests go through Lineman first before going to Jussi, and are serviced from the same port (with SSL encryption!) after to setting up some magic with nginx.

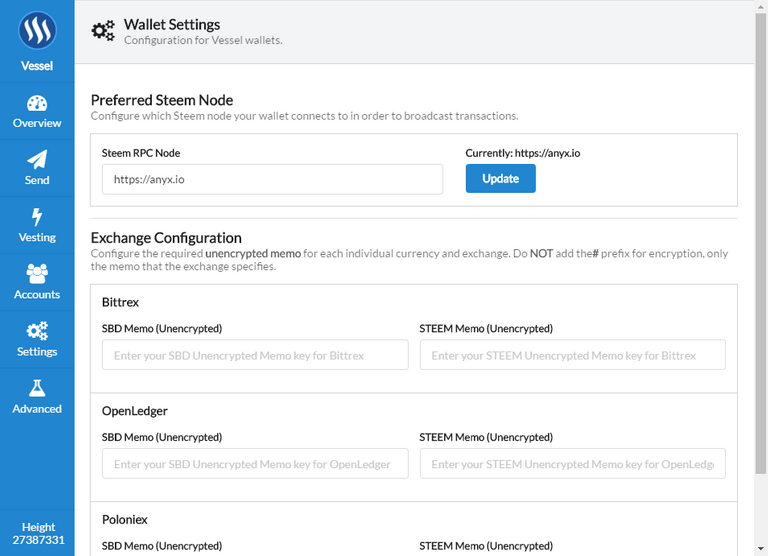

Try it out! Connect your app to https://anyx.io instead of https://api.steemit.com

Or try out ./cli_wallet -swss://anyx.io

Or try out setting your preferred Steem Node in Vessel here:

At this time, non-ssl requests are not supported. This functionality is unfortunately also blocked due to the limitations of Jussi being unable to specify ports. (Dear Steemit Inc, please fix Jussi.)

Hardware Design

When building any computer, there are a bunch of different hardware choices that need to be made. Enumerating them, they are:

- CPU choice (variant and count)

- RAM choice (mostly size)

- Storage (classes of storage, size of storage, configuration)

- Peripherals (power supply, cooling fans/solution)

Certain hardware aspects are not utilized for Steem, so I have left them out (e.g. accelerators and graphics cards).

CPU Considerations

When building a server for Steem, the CPU is one of the biggest considerations to be made. There are two things to consider for Steem: 1. How much RAM can the CPU support? 2. How fast is single core performance?

The consideration of CPU count is actually not very important if we are just considering Steem's needs. As Steem is single threaded, it quite literally does not matter how many cores are in the socket, as only one will ever be used. However, there are a few other considerations to take into account. If you plan on running multiple instances of Steem on the same physical machine (e.g. via virtualization) for load balancing, you will need high clock speed cores for each process. Furthermore, if you plan on running other services on the same machine, more cores will always help.

Notably, for consensus machines, while CPU single core performance matters a lot during replays, after the replay is complete the performance is less critical. However, for API nodes, the incoming API requests can be mostly parallelized (even using multiple threads). The way this is done involves read and write locks on the database file; this means that while parallelism is available, it still can be throttled by poor single core performance, so striking a good balance is key.

Some other things I will only briefly discuss: PCIe lanes don't really matter (as we do not use PCIe peripherals), CPU cache is not well utilized by Steem and thus does not make a significant impact, and multi-socket configurations aren't particularity well used (aside from supporting more RAM) as Steem itself is single threaded.

RAM Considerations

In terms of RAM considerations, we have 3 classes of hardware we can use for Steem:

- Commodity machines: These can support 64GB for regular chips, and 128GB for HEDT. Non-ECC.

- Workstation Xeons: These are single socket only, and can support 512GB of ECC RAM.

- Regular Xeons: Each socket can support 768GB of ECC RAM. Depending on the chip, they can be configured with multiple sockets on the same motherboard, allowing access to a large memory pool. (e.g. a 4 Socket systems can have 3TB of memory).

For Steem, the current usage as of this articles time of writing is 45 GB for a consensus node. Each API you add will require more storage (for example, the follow plugin requires about 20 GB), adding up to 247 GB for a "full node" with all plugins on the same process (note, history consists of an addition 138 GB on disk).

Notably, if you split up the full node into different parts, each will need a duplicate copy of the 45 GB of consensus data, but you can split the remaining requirements horizontally.

With these constraints in mind, commodity machines (which have the highest single core speeds) are excellent candidates for consensus nodes, with Workstation class Xeons coming in a close second. However, the scalability of regular Xeons offers an unparalleled ability to condense hardware into a single machine.

As a final note on RAM, there are different properties of the RAM to consider: the RAM timings and speed, as well as the functionality of ECC. In my testing, using a higher speed of RAM can indeed help, but is limited in its effect as performance remains dominated by the single core clock speed of the CPU. Memory channels are not utilized well by Steem due to it's single threaded nature, so they are fairly irrelevant at this time.

Using ECC RAM isn't particularly something that can be tested, but it's benefit is that long-running processes are far, far less likely to be affected by faults. While most applications for commodity machines are short running and fault tolerant in other ways, long running applications really do benefit from ECC RAM, to avoid an application crash that would corrupt your state.

Storage Considerations

For the consensus state, having a significant amount of RAM matters. However, with the introduction of RocksDB, history data can now be placed on disk. At the time of writing, this consumes about 138 GB of data. Finally, there is the blockchain itself, which is 156 GB.

For storage, there are three important factors: Capacity (amount of GB it can store), Bandwidth (transfer rate for data), and Latency (time between requesting data and beginning to recieve it). There are several storage classes that trade off on certain aspects of these for others.

- Traditional Hard Disk Drives (HDDs). These drives offer a large capacity, but at the cost of poor bandwidth and extremely long latency timings. These drives have a very low cost.

- Solid State Drives (SSDs). These offer decent capacity and decent bandwidth, but often lack in latency. These drives are fairly low cost, and are about 6x "faster" than HDDs.

- Non Volatile Memory Express (NVMEs). NVME can reach capacities similar to SSDs, while offering excellent bandwidth and lower latency. These drives are moderately expensive, and are up to 2x to 3x "faster" than SSDs.

- As a final class, we have the new technology '3D XPoint', or Optane drives. While bandwidth numbers are similar to NVME drives, the key advantage that Optane brings is extremely low latency. They can be up to 4x "faster" than NVME, though specifically for random-access workloads. Unfortunately, these drives are very expensive.

We have two sets of data to consider for Steem: The blockchain, and the history data. While obviously getting the best-in-class for all data will always see improvements, the benefits diminish depending on workload.

In my experience, any SSD is perfectly reasonable for performance for the blockchain. Storing the blockchain on NVME or Optane offers negligible performance improvements. SSD's also seem to work alright for history data, but an advantage can definitely be seen when moving to NVMEs or Optane. Further, though I have not tested yet, I anticipate when random-access requests in the form of accessing history data arrive at the API node, the quicker response time by Optanes can be a boon to performance.

Peripheral Considerations

When building any kind of computer, there are a few considerations that are fairly static, that I won't dive too deeply into. Ensure your PSU can adequately feed your computer, and ensure you have sufficient cooling and airflow. Steem does not require a graphics card, and will not use it. Don't bother getting one, a headless server is fine.

My Hardware Choices

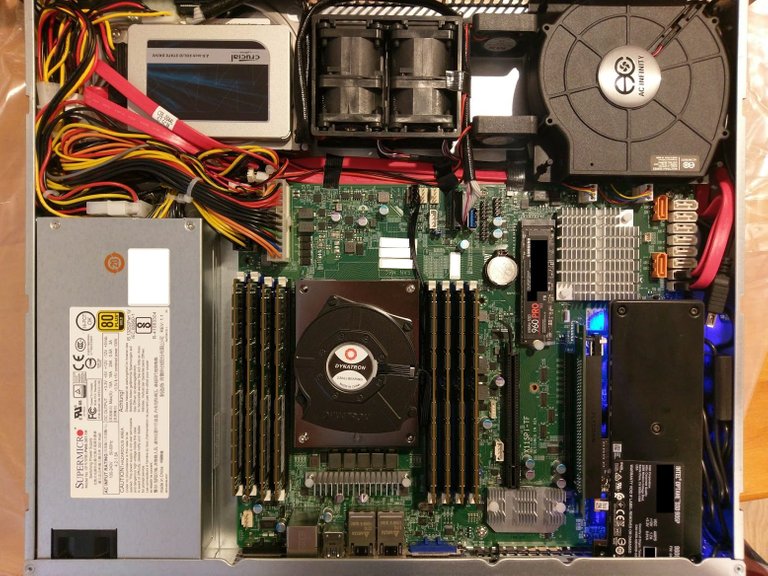

For this section, I'll talk about the "guts" of the infrastructure: building the big fat back-end server.

For the CPU, I actually intended to get a Workstation class Xeon, like the Xeon 2145. While it has a low core count, they can turbo up to 4.5GHz on a single core, which is extremely beneficial for replays. However, they offer little to no advantage once the replay is complete. Unfortunately, I was unable to find a provider that sold them in Canada when I was purchasing, so I went with a Xeon Gold 6130 which was available. This CPU can still go to 3.7 GHz on a single core, which is still quite fast. It does offers a few advantages: it has many more cores, making it more general purpose for running other programs, it allows the ability to upgrade in the future to a dual-socket configuration by only buying a sister CPU, rather than 2 new CPUs, and it has a greater L3 Cache size (it helps a little bit). However, if you are building a Steem server, I do recommend looking into the ability to get a Workstation Xeon instead.

For RAM, I got 512GB of generic DDR4 2666 ECC RAM by Micron. The large volume (double that of a full-nodes requirement) was to allow scalability into the future of Steem, as well as the ability to run multiple Steem nodes on the same machine.

For storage, I actually got a bit of everything -- it gave me the ability to test storage classes.

- Optane 905P, 960 GB

- NVME: Samsung 960 pro, 1 TB

- SSD: Crucial MX 500, 1TB

- some crappy HDD I had lying around, 2 TB

With lots of room to play, I currently have the history on the Optane and the blockchain on the NVME.

For peripherals, I chose a 1U Short depth server with a 350 Watt PSU built in. Though the build only uses about 100 Watts running Steem, the extra wattage allows room for growth in the future (and hey, it was on sale). The CPU fan is a blower by Dynatron, and I installed some extra cheap fans to help with airflow.

Some fun bits about the build:

- 1U Short depth is tiny! It was cramped to get everything in there. There is basically no more room for new drives -- though, it won't really be needed.

- Temperatures can get pretty high in a 1U server: under full load the CPU can get up to 80C! This is really due to the limitation of having to use a blower cooler on you CPU. I added some fans top help cope and get the hot air out of the cramped case, which brought the maximum CPU temp down to 70C. When running just Steem however, it only gets up to about 50C, as most cores are just idle. If I were to do it again, I would probably aim for a 2U or greater.

- A 90 degree PCIe riser is needed for the Optane card to fit it in 1U. Funny enough, my riser was a bit too short, so the Optane is a bit crooked in the server. Eh, it works!

- The RAM was the most expensive part, at about $1,000 CAD before taxes per 64GB DIMM.

- The whole build came out to about $16,000 CAD after taxes.

Part links for the geeks among us:

https://www.newegg.ca/Product/Product.aspx?Item=9SIA66K6362464

https://www.newegg.ca/Product/Product.aspx?Item=9SIABDC64E0428

https://www.newegg.ca/Product/Product.aspx?Item=N82E16820167458

https://www.newegg.ca/Product/Product.aspx?Item=N82E16835114148

https://www.newegg.ca/Product/Product.aspx?Item=N82E16811152136

https://www.newegg.ca/Product/Product.aspx?Item=N82E16820156174

https://www.newegg.ca/Product/Product.aspx?Item=9SIAGVX7U18778

https://www.nemixram.com/ml21300-944k01-103.html

Parting Thoughts

I still have a bunch of work to do getting Jussi configured correction (especially after the port issue gets fixed). But, I would absolutely love for users and dApps to test out my new infrastructure and tell me what they they think! I'd love to get feedback on performance, consistency, and latency that they see. Optimization will take a while, and so the more feedback the better.

Special thanks to @gtg, @themarkymark, and @jesta for some help and advice along the way setting things up!

What's more fun that building a machine and overclocking the CPU. That being said, I hope you have good cooling for the NVME's, sustained I/O will turn them into toasters.

Hehe, yeah! I build my gaming desktops, and I've got the experience from that. Building a server is actually quite similar.

Unfortunately, you can't overclock the multiplier on a Xeon. You can muck with the bclock, but that's just asking for instability and trouble, and for the most part it won't even work. So what you see is what you get for a Xeon.

Bummer, I didn't know that, I never built Xeon machines before. Did you pay for the hardware with bitcoin? Newegg accepts it, and I've been exclusively buying my stuff from them with bitcoin :)

Hehe, I was going to, but due to some tax reasons in my current situation I didn't. It's really awesome they have the option though!

or when possible amazon via purse.io I've got cheap optane drives this way.

Thank you! :-)

You got my witness vote for doing this. I should have given it to you before. I just have avoided giving my vote to many top 20s. Any way thank you so much for this post I enjoyed it very much.

Even though I only build AMD machines I will not hold it against you. I have a Giant silver server case I could get to you some how, it has room for an ac unit inside it.

Did you consider a water cooler on it?

Thanks! Keeping it tiny does have its advantages though, it allows you to stack more hardware in the same space :)

Water cooling is typically not used in datacenters (basically if you have a failure you don't want to destroy the other hardware). As such, getting a radiator that would fit in a server chassis might be a bit hard. Could be a fun next project though!

I wrote water cooler but actually meant liquid cooler.

I think you understood.

That big silver case could fit 2 MoBo and 2 PSU and 8 HDD and has room for 4 drawers on top for removable HDD with room to spare. I think it was originally for military purposes. I have never seen a unit like it before or after I obtained it.

Thank you for OWNING your hardware and not leasing it from a provider. I need to find a spare witness vote for you now, full nodes are very important!

Thanks! I agree owning the hardware becomes important once you're established as a witness. I don't think it's something that should be "required" from the get-go though, as especially if you're a backup witness, costs can become extremely high very quick. But after some income, it's good to move over to total control of your environment.

Thank you :-)

and, here is what you asked for

reading the latest blocks and ops are very quick, though history seems to bit slow. Tried by reading / streaming from an old block (2 years ago in time)

Accessing the block when @anyx was created ASCII Cinema:

Thanks for testing! Bit surprised to hear history was a bit slow. Need to figure out why. What kind of latencies are you seeing and on which requests? And from where?

I am not sure whether its latency or "seek" to find the block from the history.

https://asciinema.org/a/y1yFZNO27LIyP9hRrDW9D4VaH : This can give you details of the exact query. Essentially, I am starting to stream from the block number in which the user @anyx was created

But if we look for a block number that is newer/latest, its quite fast

I was testing from India - yea, quite far, high latency etc. But I think its more to do with either the expected behavior of how looking up for a block works with rocksdb or tinman code.

I plan to test this from a server and will update

I am using Tinman for the tests - and will be playing with Tinman for a while. So will report more findings if any.

Ah, interesting. Indeed, might have to do with how fetching a block is implemented in tinman.

Thanks for the info!

Possible - I will confirm whether the time is spend inside Tinman or in the network after running it in the debug mode.

Please more hardware porn <3

This is so far above my head - but I’m glad the blockchain and dApps have someone like you advancing the field - Thank you!

Posted using Partiko iOS

Best Techie article I've ever read on Steemit. Really enjoyed it and now going back read the previous parts. True decentralisation is having your own server as opposed to renting one in a data centre? Best wishes my friend :-)

Posted using Partiko Android

Impressive hardware ;-) Thank you ;-)

That hardware images looks like a big engine to me, LOL. I am sure, this post is more for techies, however, looks like you have done something which will be beneficial to the community.

A witness vote from me though it may not count anything :)

It works!!!! Huzzah!!!! 😍👍

Hoozah for full nodes. Greatly appreciated.

Awesome ! Thanks for working towards a truly decentralized ecosystem. You earned my witness vote.

What operating system are you running on that beast?

Ubuntu 18. I'm a research scientist, not a sysadmin, so I went for what was easiest for me :)

Happy to hear it I have been using UNIX and various Linux distro's for close to 30 years. Is that what the Witness Nodes run as well?

Many thanks for responding.

Thanks for the detail regarding hardware requirements for Steem servers. I’ve been looking all over for this info and only found it because I wanted to say ‘Hi’ and great to meet you at SteemFest. We never properly chatted but shyly said Hi many times in the INX hotel.

Posted using Partiko iOS

Hey! Nice bumping into you at INX :)

Glad this can help! I'm thinking of doing a follow-up post for a 'minimum viable' cheap server as well.

That would be great. I’ve been setting up a i7 6800k on a x99 MB with 64GB Ram for use as a witness server. I had been unsure of what was required from a storage perspective and your article has clarified things.

Posted using Partiko iOS

Is your node operational? How much did that run you?

Yep! It's been live since the post went up. Purchase costs are actually in the post :)

Dang 16k cad! Did you get a liquid cooler for the cpu yet?

Naw, it's still a blower. In a 1U system you're pretty limited for space, and also putting liquid cooling in a datacenter can be dangerous -- there's a lot more than just one server that can be damaged from a leak!

Temps are better in the datacenter though, since there's better airflow compared to an idle room.

You can mount the liquid cooler outside of the case if you really need to. Either way, nice setup buddy! If i cashed out my steem at the top I could have built a setup like that lmao. oh well maybe next run!

Congratulations @anyx!

Your post was mentioned in the Steemit Hit Parade in the following category:

I enjoyed the post, it reminded me of the times I built my own desktops. I love the fact that you own the server and help decentralizing Steem. I already had voted for you, otherwise this would have been a great moment to cast my vote for you.

Thanks you!

Congratulations @anyx! You have received a personal award!

Click on the badge to view your Board of Honor.

Do not miss the last post from @steemitboard:

Congratulations @anyx! You received a personal award!

Click here to view your Board of Honor

Do not miss the last post from @steemitboard:

Hello @anyx,

We made an error with the transfer for the SteemFest contest prize.

@steemitboard sent you SBD instead of STEEM.

May I ask you to send them back.

Thank you!

Congratulations @anyx! You have completed the following achievement on the Steem blockchain and have been rewarded with new badge(s) :

Click here to view your Board of Honor

If you no longer want to receive notifications, reply to this comment with the word

STOPDo not miss the last post from @steemitboard:

You got my witness vote after we met last year at steemfest2.

This is a great build and look forward to the minimum viable build option.

It will help secure the blockchain.

I too like the idea of running my own gear rather than renting.

Decentralise everything.

Congratulations @anyx! You have completed the following achievement on the Steem blockchain and have been rewarded with new badge(s) :

Click here to view your Board of Honor

If you no longer want to receive notifications, reply to this comment with the word

STOPWow! I'm a bit late to the party but this is amazing. I had no idea you were running a full-node ...

You got it!

Thank you!

Would you happen to be doing some sort of caching? I noticed that when I pull blog/comments from your API it sometimes sends back the same data if within ~1minute? It doesn't seem to happen when I use Steemit's nodes ... I didn't fully debug this problem so it could be something on my end. But, as of right now, I do have my fulltimebots pulling from Steemit to see if problem reoccurs (which it hasn't in past 12hrs) ...

Thanks again for running this full-node tho! I have you in my config files as an alternative gateway for now.

Hum, I do have a cache but it's only for a few seconds (within the same block). However I think I might have found and fixed an issue that could serve up a stale request while it's updating it.

Try again and let me know if that solved the issue!

I think that might've fixed it. Thank you kind sir! 😁👍

I started using this.^^ (I vote for you)~Thanks.

Congratulations @anyx! You have completed the following achievement on the Steem blockchain and have been rewarded with new badge(s) :

Click here to view your Board of Honor

If you no longer want to receive notifications, reply to this comment with the word

STOPGetting requests blocked because

user-agentHTTP header is not allowed in CORS. Would it be possible to have this allowed? The API node is otherwise not working when using Firefox...Thanks you for the node BTW!

Posted using Steeve, an AI-powered Steem interface

I added

user-agentto be allowed, let me know if that fixes your issue.Looks good, thanks ;-)

Posted using Steeve, an AI-powered Steem interface

Ho Ho Ho! @anyx, one of your Steem friend wished you a Merry Christmas and asked me to give you a new badge!

SteemitBoard wish you a Merry Christmas!

May you have good health, abundance and everlasting joy in your life.

To see who wanted you to receive this special gift, click here!

Click here to view your Board

Do not miss the last post from @steemitboard:

Congratulations @anyx! You have completed the following achievement on the Steem blockchain and have been rewarded with new badge(s) :

Click here to view your Board

If you no longer want to receive notifications, reply to this comment with the word

STOPWhy https://anyx.io/ responds with "Bad Request"?

@onepagex

With what request?

When I go to https://anyx.io/ I get this page:

It's not a website, it's an API node.

Oh, sorry. I thought I will find a page with docs how to use the API. Where I can find those docs?

Thanks anyway! I figured out. It's STEEM API. I ran some requests found at https://developers.steem.io/apidefinitions/

Sorry for disturbing.

Dear @anyx

Just decided to see how have you been doing, since I didn't hear from you in a while. I noticed that you didnt post anything in a while.

Did you give up on Steemit? :(

Yours,

Piotr

I'm around, and active as ever :) A lot of what I've been doing is "off-chain" though, so to speak, as I haven't found much time to do blogging. For example, I've been working on supporting API nodes. I recently fixed a large issue causing missed blocks on steem, and I added support for unix sockets in steem that will allow for much higher performance for API nodes. I also wrote a test client to evaluate API performance.

I also spend a lot of time with @steemcleaners and @cheetah, that's more my day-to-day activity here.

Dear @anyx

Thank you for kind kind reply. Im glad that you're around, but I still have seen your last post is 3 months old :(

ps.

I was wondering if you would mind considering supporting my efforts in building crypto community here on Steemit.

Since HF20 took place myself together with few other active "Steemians" we decided to put extra effort into making our little community stronger. And build mostly on mutual engagement and support instead of financial rewards.

I already delegated over 3500 SP to a group of 80 steemians who struggled with low RC levels (resource credit). And the results has been great so far. One of my latest posts reached over 800 comments, which I found insane

Those results are only proving that our efforts are worth something. The only thing is that I can do only "that much" with my current resources.

Perhaps you could consider supporting our efforts and delegating part of your SP for period of time while you're not active on Steemit?Please let me know what do you think. I will appreciate every feedback, regardless of your decision.

Yours

Piotr

Like I said, I'm active (I curate every day!) but I just haven't had the time to blog.

And sounds cool! I'll check it out if I decide to curate less.

Hi @anyx

Thanks for replying to my previous comment. I always appreciate people who engage back.

Will follow you closely.

Yours

Piotr

@anyx Hi the person would flagged your comment and mine has somethingyou can flag back right here https://steemit.com/tlahuelilpan/@rihanna3/tlahuelilpan

I switched my esteem app to your API node :) keep it up and thanks for sharing

@anyx. What will it take to get your cat to leave us alone?

We’re changing the opening paragraph every week. We’re featuring new chefs every week. We’re personally reaching out to you every week. What else do we need to do to get you to call off your cat?

Thank you very much for running this, the more nodes the better for decentralized networks.